Sensors are vital to smartphones today

We use the sensors embedded in our smartphones every day, often without even realizing it. The screen flips as you hold your phone in a landscape position because an accelerometer has detected the change. When there’s an arrow pointing the way you are facing on a map, that’s because a magnetometer is acting as a digital compass. The ambient light sensor tells your screen to brighten when you go outside, and the proximity sensor tells your device to deactivate the touch screen when you hold the phone to your ear.

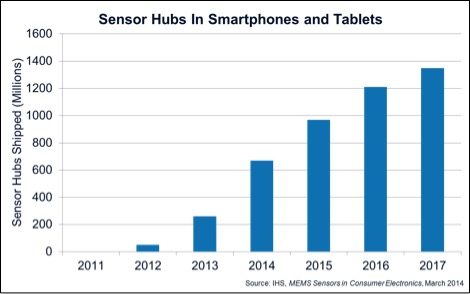

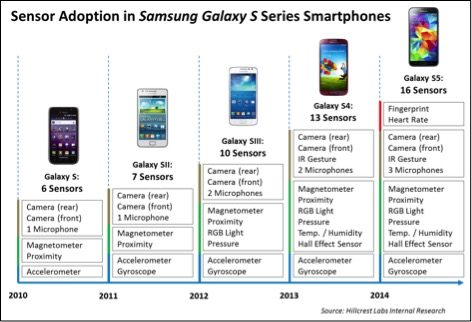

These examples barely scratch the surface of how sensors are used in smartphones today. The latest phones contain as many as 16 sensors that make the phone aware of the environment and the user in order to power new applications, enable longer battery life and deliver better user experiences. This trend has given rise to a new category of embedded processors called “sensor hubs” that have specialized software to efficiently manage the sensors and provide accurate, context-aware information to the smartphone’s applications.

Sensors used in Samsung Galaxy S smartphones

The benefits produced by sensors can broadly be described in three categories:

Contextually aware devices

By sensing motion, biological information, the environment and more, sensors can power a more natural and intuitive user experience. For example, if the phone detects that you are driving in a car, it can reduce distractions by filtering only essential communications and enabling voice rather than touch controls. Or, if you are walking and ask for directions, it can default to walking directions rather than driving. Even a feature as simple as providing a summary of what you “missed” once the phone is picked up after remaining face down on a table for a while can enable a better user experience.

Sensor-enabled user experiences

Sensors enable a range of new applications that can improve the functionality of mobile devices. Examples today include activity trackers, which monitor your daily activity to encourage a healthier lifestyle, and gestures, which act as interface shortcuts that reduce the need for dozens of touch-screen taps. With sensors becoming more advanced, we’ll soon see phones that are able to guide you to your platform at the train station, even without sight of a GPS satellite, and power virtual reality headsets through accessories such as Google Cardboard.

Lower power consumption

Sensors can be used to conserve power, based on device context. For example, if the phone is sitting on a desk in your office and has not moved in several hours, the phone does not have to sample the GPS or otherwise update its location. Similar techniques can be used to intelligently control whether the screen turns on – there’s no point if it is in your pocket – or the rate at which sensors sample data to enable a much more power-efficient system. While individually these are incremental benefits, the total power savings add up to enable longer battery life for smartphones and wearable devices.

Sensor hubs maximize sensor benefits

To enable these user-experience benefits we need the sensors to be “always-on” and gathering sensor data regardless of whether the device is actively being used. That means we need to have a way of gathering, filtering and analyzing sensor data without consuming huge amounts of the phone’s battery or processing resources. That has led to the rise of a specialized processor known as a sensor hub.

A sensor hub is a separate processing element that is tasked solely with managing sensor data. This processor is selected and tuned so that it is able to constantly manage the sensors and analyze their data while consuming a very small amount of the typical phone’s battery life. This makes the goal of “always-on” sensor processing a reality – if we tried to process the sensor data using the application processor then the battery would last just a few hours, thus delivering a very poor user experience.

The most notable example of a sensor hub is probably Apple’s M8 “motion co-processor,” as featured in the iPhone 6. The M8 sensor hub is connected to only four sensors: an accelerometer, gyroscope, magnetometer and pressure sensor. It monitors all-day energy expenditure, detects whether you are walking or driving, and saves power by detecting the context of the phone. Other sensor hubs can manage up to nine sensors and are subsequently able to detect more contexts, track more activities and enable more applications. Sensor hubs are key components in hundreds of millions of phones shipped each year, including market leaders like the iPhone 6 and Samsung Galaxy S5, and are quickly becoming standard in even midrange smartphones.

The possibilities are limitless

Now that we have this foundation in place – dozens of sensors in almost all mobile devices and multiple streams of data being processed in real time using very little power – there are new and exciting opportunities to use the data to make an even greater impact on the lives of users. Some examples include:

Health and wellness tracking

We all know sleep is important, but it’s difficult to actually analyze how well you sleep and why. The phone of the future could use the sensor hub to track ambient light conditions, noise and your sleeping patterns during the night, and combine that data with your previous daily activity to identify specific ways to improve your sleep. Additionally, by combining data from your calendar with heart rate, activity, breathing, ambient noise and even your tone of voice, your phone could gain insight into your mood and stress level, and make suggestions to help you manage your emotions in a positive manner.

Indoor and pedestrian navigation

Navigation is one of the primary apps used today, but it uses a lot of power and is only effective when there is a clear view of the sky. That means we can’t use it indoors to find a platform at the train station or a store at the mall, or in downtown “urban canyon” environments. By using a sensor hub with dead-reckoning algorithms, we can support traditional navigation techniques with inertial sensors on the device to track movement with no external reference points. This dramatically lowers power consumption and makes navigation and associated location-based services a “use anywhere” technology.

Virtual reality

Virtual reality is poised to enable new ways to play, learn and experience the world. However, it relies on tricking the brain into believing the virtual world is real by using sensors to translate real-world actions into the virtual world with the greatest precision possible. This requires a finely tuned sensor hub to ensure that your movements in the real world are translated accurately into the virtual world to make the experience as realistic and fun as possible.

These applications just begin to scratch the surface. What if our phones had air quality gauges to tell us when it’s not safe for children to exercise outside due to harmful pollutants? Or, if our phones could act as breathalyzers to reduce drunk-driving accidents? What if a phone could function as a portable EKG and identify early warning signs for a heart attack? These are just a few of the powerful applications that could be unleashed by the amazing technology contained in sensor hubs today.

Sensor hubs are enabling an intelligent, context-aware personalized platform for billions of users around the world. How we use them will not be limited by cost, power or processing ability, but by our imaginations.

Editor’s Note: In an attempt to broaden our interaction with our readers we have created this Reader Forum for those with something meaningful to say to the wireless industry. We want to keep this as open as possible, but we maintain some editorial control to keep it free of commercials or attacks. Please send along submissions for this section to our editors at: dmeyer@rcrwireless.com.

Photo copyright: / 123RF Stock Photo