Artificial neural networks use deep learning to interpret images; underlying tech for self-driving vehicles

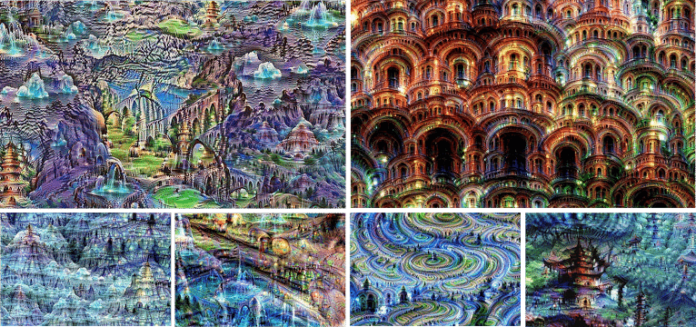

In a particularly meta experiment involving artificial neural networks, Google software engineers have visualized how an artificial intelligence makes sense of visual noise.

Software engineer Alexander Mordvinstev, software engineering intern Christopher Olah and software engineer Mike Tyka, all of Google, discussed their work in a June 17 post to the Google Research Blog.

Artificial neural networks are designed to essentially function like a human brain. The network consists of layers of stacked artificial neurons. An input enters the neural network and the layers progressively fine-tune what the input is until the final layer outputs an interpretation.

Using the self-driving vehicle analogy, a car AI would see input of a road sign. The computer would first see lines and shapes, then more and more features, until it finally recognizes the image as a road sign.

“The first layer maybe looks for edges or corners. Intermediate layers interpret the basic features to look for overall shapes or components, like a door or a leaf. The final few layers assemble those into complete interpretations—these neurons activate in response to very complex things such as entire buildings or trees,” the blog notes.

The trio of Googlers wanted to visualize what that interpretation process looks like from the AI’s perspective. To that end, they decided to “turn the network upside down and ask it to enhance an input image in such a way as to elicit a particular interpretation. Say you want to know what sort of image would result in ‘banana.’ Start with an image full of random noise, then gradually tweak the image towards what the neural net considers a banana. By itself, that doesn’t work very well, but it does if we impose a prior constraint that the image should have similar statistics to natural images, such as neighboring pixels needing to be correlated.”

They took it one step further. After guiding the AI toward a particular input to see how it makes order out of chaos, the group continued to apply the learning algorithms to outputs generated by the computer.

The result is part Timothy Leary, part Vincent Van Gogh and decidedly amazing. The images created have been billed as how an AI would dream, making new information out of data already inside the machine.

“The techniques presented here help us understand and visualize how neural networks are able to carry out difficult classification tasks, improve network architecture, and check what the network has learned during training,” the engineers posted. “It also makes us wonder whether neural networks could become a tool for artists – a new way to remix visual concepts – or perhaps even shed a little light on the roots of the creative process in general.”