All of the major mobile operators are beginning to use network functions virtualization (NFV) to implement fully-virtualized network infrastructures by 2020, but traditional server-based networking models limit performance and increase costs. NFV applications are I/O-intensive, and mobile operators can overspend on servers to deliver the needed I/O performance because network processing on a server can use half of the available CPU cores. By offloading server-based network processing to SmartNICs, however, mobile operators can slash the number of servers needed for NFV infrastructure and accelerate NFV infrastructure (NFVI) processing.

Why mobile operators want NFV

NFV functions (VNFs)

NFV functions (known as virtual network functions, or VNFs), are available to handle all of the key functions of mobile core and edge operations. Examples include virtualized evolved packet core (vEPC) operations such as the packet gateway function (P-GW), the serving gateway function (S-GW), and the mobility management entity (MME). All of these components go into the evolved packet core, and they can be instantiated as VNFs. When implemented on a server, VNFs can leverage virtual switching in a hypervisor to get rich networking services such as overlay network tunneling, security policy, and fine-grained statistics and metering.

NFV challenges

VNFs are typically very I/O intensive, so they need to process a lot of packets going in and out. NFV infrastructure uses a virtual switching layer, and it is typically a bottleneck because that layer is implemented on the server as part of the hypervisor. The hypervisor sits directly in the data path of the packets that need to be delivered from the network to the computer’s memory.

With a virtual machine that is running a VNF, the packets go from the network to the virtual switching layer (OVS, contrail vRouter, VMware, etc.), and then to the virtual machine. There are three issues with this.

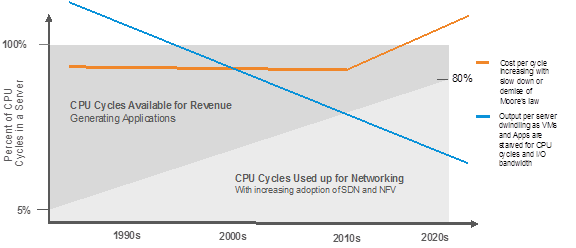

To make matters worse, the amount of CPU resources consumed by networking functions has been steadily increasing over time. This is due to three main factors:

1) The complexity of server-based networking functions has been increasing. A prime example of this is more complex tunneling protocols such as VXLAN and GRE that are needed to support overlay networks.

2) Network port speeds continue to increase, from 1G to 10G, and now 25G, 40G, 50G, and even 100G server port speeds are not uncommon. This results in a commensurate increase in packet per second rates, dramatically pushing up the server workload.

3) Server chip technologies are hitting the limits of Moore’s Law, which means that available server CPU cycles are not growing at a sufficient pace to compensate for 1 and 2.

Addressing Virtual Switching Challenges

The best way to address these problems is to offload the virtual switching from the server CPUs. SmartNICs make this possible. A SmartNIC is a network interface card that includes a programmable network processor that can be used to handle virtual switching and related applications.

In a SmartNIC architecture, you take the virtual switching out of the hypervisor on the server and move it down onto the SmartNIC. As the packet traverses the SmartNIC it is switched and goes directly across the PCI bus and into the server memory where it can be processed. The SmartNIC thus eliminates the extra hop into the hypervisor, addressing the latency problem.

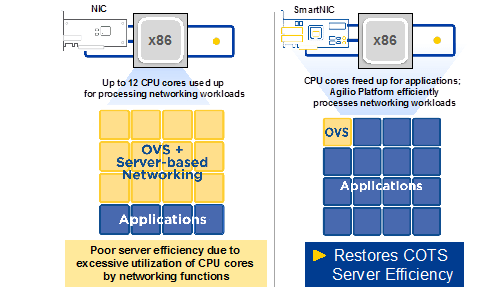

Further, since you’re processing packets on the SmartNIC, you are not using server CPU cores to do that processing. You can thus reclaim the 12 cores you were using to handle switching. Figure 1 shows a comparison of server utilization for virtual switching, with and without SmartNICs.

Figure 1: SmartNICs significantly improve server utilization by offloading virtual switch data plane processing from server CPU cores to the SmartNIC. For I/O-intensive NFV applications, this can reclaim more than 50% of the overall server resources.

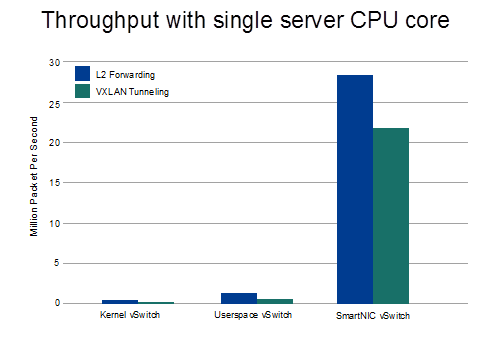

Finally, you remove the potential processing bottleneck because the SmartNIC can process packets at a much higher data rates than can servers. In fact, SmartNICs have been demonstrated to perform 20 times faster processing than on a server. Figure 2 compares packet processing performance on a server versus a SmartNIC.

Figure 2: SmartNICs outperform servers at packet processing.

As mobile operators scale their NFV deployments with COTS servers, server-related I/O bottlenecks will become a major problem for network performance, latency and server usage efficiency. SmartNICs address these problems. Mobile operators are doing testing and early deployments of SmartNICs now, and this activity will accelerate during the coming months.