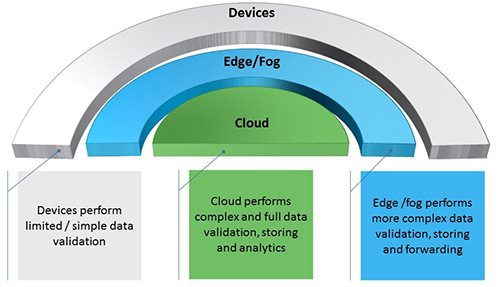

We live in an era of connected and smart devices. With their proliferation, the growth of data has mushroomed to new heights. This data reaches all the way from the end user to your cloud or on-site data center for processing, storage, and other analytical operations. Thus, when accessed, this leads to latency and bandwidth issues. As Nati Shalom explained in his “What is Edge Computing?” blog post, edge computing is essentially transferring processing power to the edge of the network, closer to the source of the data. This enables an organization to gain a significant advantage in terms of speed at which they access data and consume bandwidth.

With edge playing such a crucial role, it is equally important to consider the infrastructure technology upon which the edge workload is running. Let’s evaluate the different options for running edge workloads, and also learn how different public cloud players are running theirs.

Enabling technologies for edge workloads

We have seen the whole paradigm shift in infrastructure technologies, beginning with physical servers, proceeding to the birth of virtual machines (VMs) and now the latest kid on the block, containers. Although VMs have done a great job in the last decade or so, there are inherent advantages that containers offer when compared to VMs. They also can be an excellent candidate for running edge workloads.

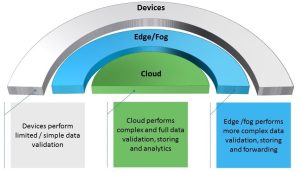

The diagram below describes how containers work when compared with VMs.

Each virtual machine runs a unique operating system on top of a shared hypervisor (software or firmware layer) thereby achieving ‘hardware-level virtualization.’ Conversely, containers run on top of physical infrastructure and share the same kernel thus leading to ‘OS-level virtualization.’

This shared OS keeps the size of containers in MBs, making them extremely ‘light’ and agile, reducing the startup time to seconds as compared to several minutes for a VM. Additionally, management tasks for the OS admin (patching, upgrade, etc.) are reduced as containers share the same OS. On the flip side, kernel exploits will bring down the entire host in the case of a container. Still, a VM is a better alternative if an attacker were to route via the host kernel and hypervisor before reaching the VM kernel.

Today, much research is taking place toward the goal of bringing the power of bare metal to edge workloads. Packet is one such organization that is working toward this unique proposition of fulfilling the demand of low latency and local processing.

Containers on VMs or on Bare Metal?

CenturyLink conducted an interesting study on running Kubernetes clusters on both bare metal and virtual machines. For this test, an open source utility called netperf was used to measure the network latency of both clusters.

As physical servers do not have hypervisor as an overhead, results were as expected. Kubernetes and containers running on bare metal servers achieved significantly lower latency; in fact, three times lower than when running Kubernetes on VMs. Furthermore, when a cluster was run on VMs in comparison to bare metal, CPU consumption was noticeably higher.

Should all edge workloads run on bare metal?

Although enterprise applications such as databases, analytics, machine learning algorithms and other data-intensive applications are ideal candidates for running containers on bare metal, there are some advantages to running containers on VMs. Out of the box capabilities (such as workload motion from one host to another, rollback to the previous configuration in case of any issue, upgrade of software, etc.) are easily achieved in VMs when compared with a bare metal environment.

So, as explained earlier, containers, being lightweight and quick to start/stop, are an ideal fit for edge workloads. There is always a tradeoff when running on either bare metal or VM.

Public clouds and edge workloads

Most of the public clouds, including Microsoft Azure and Amazon, provide Containers as a Service (CaaS). Both are built on top of existing infrastructure layer, based on VMs, thereby delivering portability and agility, which edge computing needs.

AWS has also launched ‘Greengrass’ as the software layer to extend cloud-like capabilities to edges, thus enabling local collection and execution of information.

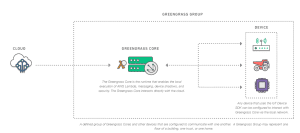

Let’s see how this works.

Greengrass Group contains two components. The first is Greengrass core for local execution of AWS Lambda, messaging and security. The second is IoT, SDK enabled devices, which communicate with Greengrass core via the local network. If Greengrass core was to lose communication with the cloud, it would nonetheless continue communicating with other devices locally.

Enterprise adoption and challenges involved

Due to the speed, density and agility containers provide, they are one of the hottest technologies for serious investigation. H, security may create an obstacle for enterprise adoption of edge workloads on containers. Two of the major issues are:

- Denial of service — One of the applications may start consuming most of the OS resources, depriving others of the bare minimum resources required for continued operation, thus forcing a shutdown of the OS.

- Exploiting kernel — Containers share the same kernel, so if an attacker were able to access the host OS, they would gain access to all the applications running on the host

The way forward: latest developments

Among the various developments in infrastructure technologies, Hyper, a New York-based startup, is trying to provide the best of both the VM and container worlds. With HyperContainers (as Hyper calls it) we see a convergence between the two. It provides the speed and agility of containers, i.e., the ability to launch an instance in less than a second with a minimum resource footprint. At the same time, it provides security for, and isolation of, the VM, i.e., safety against shared kernel issues of a container via hardware-enforced isolation.

Conclusion

Ultimately, we can agree that an enterprise should ideally mix and match a technology for the edge workload involved while keeping in mind the result. This applies whether you want just local processing or local analytics (or a combination of the two), or if you are running a machine learning workload or an enterprise database workload. This is much easier said than done. A proof of concept with the actual workload and various options from the technology front can help in taking the final call.