Deep learning is a machine learning method that uses multiple layers of nonlinear processing units in cascade. It can be supervised, for things like classification, or unsupervised, for pattern analysis and more. IBM’s Romeo Kienzler prefers to use the term Cognitive System: “A cognitive system,” he says, “provides a set of technological capabilities such as artificial intelligence (AI), natural language processing, machine learning, and advanced machine learning to help with analyzing all that data. Cognitive systems can learn and interact naturally with humans to gather insights from data and help you to make better decisions.” In a previous article, Kienzler stated that “cognitive computing is not just human-computer interaction (HCI), it is advanced machine learning driven by powerful algorithms (models) and nearly unlimited data processing capabilities.”

Where is deep learning used?

Deep learning is being used in the analysis of vision, for search and information extraction, security and video surveillance, self-driving carts and robotics, and for speech analysis — including interactive voice response systems, voice interfaces in mobile, cars, gaming and the home. It can also be found in healthcare and for helping people with disabilities. It’s used in text analysis for search and ranking, sentiment analysis, machine translation and question answering, as well as applications such as recommendation engines, advertising, fraud detection, AI, drug discovery, sensor data analysis, and diagnostic support.

Obstacles to implementation

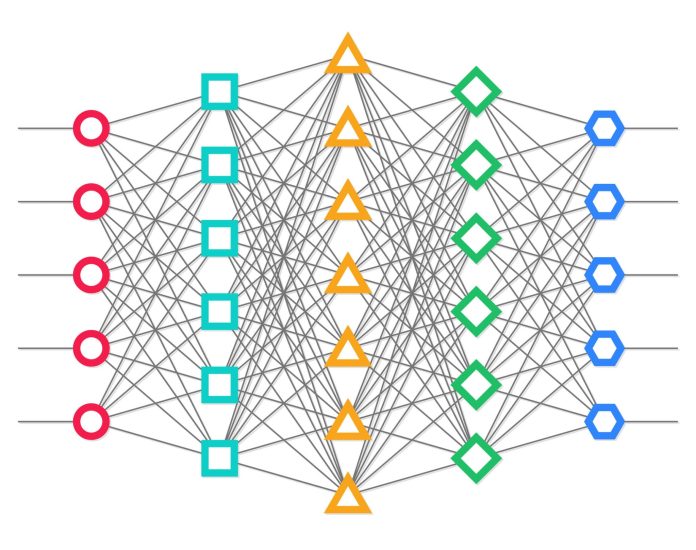

In a previous article, I said that a neural network involves three logical layers — the input layer, which accepts incoming data, the output layer, which presents results, and a hidden layer in between. “The main difference between a neural network and a deep learning one,” says Frank Lee, Co-founder and CEO of Deep Photon, Inc., “is the addition of multiple neural layers. The most obvious example of how deep learning is outperforming traditional machine learning is with image recognition. Every state-of-the-art system uses a special type of deep learning neural network (called a convolution neural network) to perform their tasks.” The workflow for deep learning, Lee continues, involves three basic steps: data gathering and preparation (including importing, cleaning and labeling), training and deployment.

Neural networks require huge amounts of data and enormous computing power. IoT devices certainly generate those huge amounts of data, and the cloud provides enormous computing power. But, as Liran Bar of CEVA points out, this does not work so well when time is important: “Mission-critical use cases, like self-driving vehicles and industrial robots, make use of [deep neural networks] for their ability to recognize objects in real time and improve situational awareness. But issues of latency, bandwidth and network availability are not good fits with cloud computing. In these scenarios, implementers cannot afford the risk of the cloud failing to respond in a real-time situation.” Bar also points out that consumers’ worries about privacy (your devices are listening to you and sending what they hear to the cloud) means that much of the data storage and computational load must be moved to the edge devices. The question is how to do it.

One way to make neural networks practical in IoT and mobile applications, says Dan Sullivan of New Relic, Inc., is to reduce the computational load. One method for doing this, he explains, is network compressions — reducing the number of nodes in a neural network, which can, when used judiciously, reduce computational load by a factor of 10 without a serious impact on accuracy.

“Another approach to reducing computational load,” Sullivan continues, “is to approximate, rather than exactly compute, the values of nodes with the least effect on model accuracy.” This is done by cutting the number of bits per node — a method that can work in many cases, but not where high precision is needed. He cautions that one can take approximate computing and network compression only so far before accuracy becomes unacceptable.

A third method, says Sullivan, is specialized accelerator hardware. Multiple companies, like Bar’s CEVA, Nvidia and others are working to apply vector processing chips and graphic processing units (GPUs), with their multiple processor cores and large, quickly-accessible memory for this. GPUs, says Lee, with their “…hundreds of compute cores that support a large number of hardware threads and high throughput floating point computations…,” are especially well suited for training DL models and, he adds, Nvidia developed its widely-used Compute Unified Device Architecture (CUDA) programming framework for that purpose.

In February ARM announced a new machine learning platform called Project Trillium. Intended for edge processing, the platform includes a new machine learning processor targeting mobile and adjacent markets, a new real-time object detection processor for use with smart cameras, and a software development kit to go with them. In March Nvidia and ARM announced a partnership to integrate Nvidia’s Deep Learning Accelerator (NVDLA) architecture into the Project Trillium platform.

Business Insider’s Nicholas Shields suggests that this “should help Nvidia power millions of very small IoT devices like smart meters or embedded sensors. “ It is also likely, he goes on, to motivate companies like Intel to move more aggressively in that direction,” he adds.

Yet, while all this specialized hardware can improve performance and reduce energy consumption, Sullivan points out, it is expensive, relegating its use to high-value applications. How long this will be an obstacle remains to be seen.

Like other technology, deep learning will gradually migrate from the cloud to the edge and become more and more ubiquitous. It will be interesting to see what comes up in the next few years.