The two basic rules for autonomous driving are: Rule number 1: Do no harm. Rule number 2: Don’t get hurt.

Forget about insurance companies’ claim that autonomous driving is safer and that insurance rates will be lower. When people hear about an autonomous car running into a lamp post or when an autonomous car kills a pedestrian, it scares the daylights out of them. One of the key questions for autonomous driving is whether or not sensors can replace human eyes and senses. How do sensors detect objects?

The current technologies under test include radar, LiDAR/laser scanners, regular/3D cameras, and ultrasonic sonars. Reliable and safe autonomous driving depends mainly on these sensor functions and the interpretation of their input.

Safe autonomous driving in sight with sensors

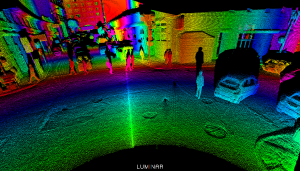

Radar is so common that few people remember its original RAdio Detection And Ranging name. It uses high-frequency electromagnetic waves to hit an object, and when it bounces back the radar will calculate its distance from the object. This type of sensor helps the vehicle determine what objects are in its surroundings. LiDAR (Light Detection And Ranging)/laser scanners are newer technologies which use laser light to detect the range. It also scans to form an image of the car’s surroundings.

Many new start-ups are introducing new solutions based on these technologies. One of the leaders, Luminar, has formed partnerships with Toyota and Volvo, and its technology seems to be ahead of everyone else. Using a 1550nm laser and the InGaAs design, the system boasts resolution that is 50 times greater with a range that is ten times longer than other LIDAR solutions. It can detect hard-to-see, low-reflectivity objects like a black car or a tire even at speeds of 75 mph. This is important because previous accidents from autonomous driving occurred due to failure to detect a blue sky from a blue truck.

Additionally, regular and 3D cameras are used to detect objects surrounding the vehicle. Ultrasonic sonars are used to detect the proximity of an object such as another vehicle ahead or nearby. Another new technology called vehicle-to-vehicle (V2V) communication is a joint project of the Department of Transportation and the National Highway Traffic Safety Administration (NHTSA). V2V is a communication protocol which enables vehicles to talk to each other. Using V2V, vehicles not only rely on their own sensors but other vehicles’ sensors as well. For example, when a vehicle 100 yards ahead detects an accident and comes to a sudden stop, the V2V protocol would also alert the cars behind so everyone will be able to slow down in time to avoid an accident.

Conclusion

Overall, the sensors used by autonomous driving are most critical because of the nature of the application. There are so many factors affecting the driving patterns of vehicles, and replacing the sight and senses of humans is easier said than done. When making a turn at an intersection, it is relatively easy for an experienced driver to detect the lines on the road even when the color of the paint is fading. But for a driverless car, it is much more difficult.

To achieve reliable and safe “driving without drivers” requires more and better sensors than what has been described above. Current sensor-based innovations include advanced GPS, accelerometer, gyroscope and 360-degree view augmented virtual reality to enable a bird eyes’ view of the vehicle. While we are still quite a few years away from safe, fully autonomous driving, using what we have developed so far to support the advanced driver-assistance systems (ADAS) is the next, logical step, and one which will provide immediate benefits.