Distributing machine learning is key to latency-sensitive applications

Today, the vast majority of machine learning algorithms are run in centralized cloud computing facilities. But as 5G opens up a new set of latency-sensitive applications, doing machine learning just in the cloud won’t be enough, which makes the case for moving some of those processes out of the cloud and down to the edge.

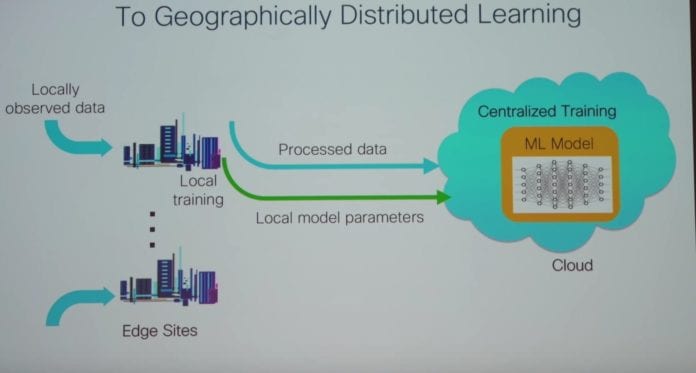

In a presentation last week at the Texas Wireless Summit, Xiaoqing Zhu of Cisco’s Innovation Labs, highlighted some of the challenges current architectural models present for machine learning: privacy and security, bandwidth limitations for transmitting data to the cloud, and the aforementioned latency point.

Machine learning is, at its core, a process of training and inference. Consider a software program meant to identify photographs. First the program needs to learn by looking at a huge store of images–that’s training. Once that bit is complete, the program could use what it has learned to infer what it’s looking at when shown an image it hasn’t seen before.

Today, “Machine learning is really being carried out by the user devices,” Zhu said. “Training data is being gathered to be collected in the cloud and facilitate centralized training. Both the training and the inference run in the cloud.”

She continued: “This may not be suitable for some of the latency-sensitive applications. Of course, if we can support local inference, that problem can be solved separately. We instead are starting to consider…an augmented version of the architecture where we can leverage some of the intelligence deployed at the edge to augment cloud-based machine learning.”

Check out Zhu’s full talk here.

Think about a remote mining site kitted out with sensors that track environmental and other conditions. If the goal of the sensors is, in part, to alert workers to unsafe conditions, that decision-making process needs to happen in as close to real time as possible. So, instead of streaming all of the data being collected back to a cloud farm somewhere for analysis, intelligence at the edge could not only pass on vital information more quickly but also save in data transmission costs.