When Gordon Moore put forth his prediction about the number of transistors per square inch doubling every 18 months, he had no idea how long this state would endure nor what technologies would bring about its demise. All good things must come to an end, including Moore’s Law – but that doesn’t mean other good things won’t rise up to fill the gap. Something needs to, since many of today’s technologies are dependent on an increase in power of server CPUs.

A Gradual Decline

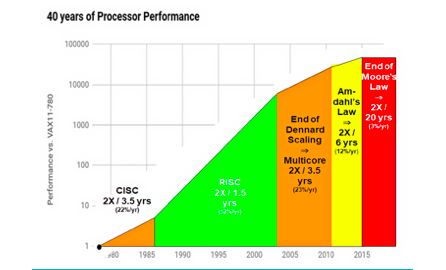

It is only lately that organizations are noticing a slowdown in CPU processing power, but it has been coming for some time, with various activities prolonging the performance curve. It is not just Moore’s Law that is coming to an end with respect to processor performance but also Dennard Scaling and Amdahl’s Law. This graph shows processor performance over the last 40 years and the decline of these laws:

The impetus for Moore’s Law lay in RISC computing, which came on the scene in the 1980s. It did, indeed, double in processor performance every 18 months. But, as the limits of clock frequency per chip began to appear, the use of Dennard scaling and multicore CPUs helped prolong the performance curve. But it is important to note that even at the start of the century, we were no longer on the Moore’s Law curve, and doubling of performance took 3.5 years during this time.

On the heels of the fall of Moore’s Law and Dennard Scaling comes Amdahl’s Law, which refers to the limits of performance improvement that can be achieved with parallel processing. While parallelizing the execution of a process can provide an initial performance boost, there will always be a natural limit, as there are some execution tasks that cannot be parallelized.

We have recently experienced that these limits come into effect when the benefits of using multiple CPU cores decrease, leading to an even longer time span between performance improvements. The prediction, as can be seen in the graph, is that it will now take 20 years for CPU processing power to double in performance. Hence, Moore’s Law is dead.

Expectations Must Be Adjusted

People may lament the passing of Moore’s Law as the end of an era, but beyond nostalgia, it creates a significant concern. Why? Entire industries have been built on the premise of this prediction and the continued expectation of constant processing performance improvement.

It was assumed, for instance, that the processing needs of the software of the future would be serviced by processing power that would increase in line with data growth. As a result, efficiency in software architecture and design has been less integral. In fact, there is an ever-increasing use of software abstraction layers to make programming and scripting more user-friendly, but at the cost of processing power.

One of these abstractions that creates an additional processing cost is virtualization. On one hand, virtualization makes more efficient use of hardware resources but, on the other hand, the reliance on server CPUs as generic processors for both virtualized software execution and processing of input/output data places a considerable burden on CPU processors.

Cloud vendors have been living with this fallout. The cloud industry was founded on the premise that standard Commercial-Off-The-Shelf (COTS) servers are powerful enough to process any type of computing workload. Using virtualization, containerization and other abstractions, it is possible to share server resources amongst multiple tenant clients with “as-a-service” models.

Inspired by this successful model, telecom operators are working to replicate this approach for their networks with initiatives such as SDN, NFV and cloud-native computing. However, the underlying business model assumption is that as the number of clients and volume of work increases, it is enough to simply add more servers.

However, server processing performance will only grow three percent per year over the next 20 years, as the earlier graph illustrated. This is far below the projected need, as it is expected that the amount of data to be processed will triple over the next five years.

Adding Processing Power: Hardware Acceleration

If processor performance has been slowing down, why has it taken so long to notice? For instance, cloud companies seem to be succeeding without any signs of performance issues. The answer is hardware acceleration.

But the pragmatism that led cloud companies to be successful also influenced their reaction to the death of Moore’s Law. If server CPU performance power will not increase as expected, then they would need to add processing power. In other words, there is a need to accelerate the server hardware.

One way to address the end of Moore’s Law is Domain Specific Architectures (DSA), which are purpose-built processors that accelerate a few application-specific tasks. The idea is that instead of using general-purpose processors like CPUs to process a multitude of tasks, different kinds of processors are tailored to the needs of specific tasks.

An example is the Tensor Processing Unit (TPU) chip built by Google for Deep Neural Network Inference tasks. The TPU chip was built specifically for this task, and since this is central to Google’s business, it makes perfect sense to offload to a specific processing chip.

To accelerate their massive workloads, cloud companies use some kind of acceleration technology. One option is Graphics Processing Units (GPUs), which for some time have been modified to support a large variety of applications. Network Processing Units (NPUs) have also been widely used for networking. These options both provide a significant number of smaller processors where workloads can be broken down and parallelized to run on a series of these smaller processors.

The second half of this article will discuss how an older technology, FPGA, is coming to the forefront as a key means of hardware acceleration and a potential solution to the death of Moore’s Law.

Daniel Joseph Barry is VP Strategy and Market Development at Napatech and has over 25 years’ experience in the IT/Telecom industry. He has an MBA and a BSc degree in Electronic Engineering from Trinity College Dublin.