It is well understood that communications in high mobility environments — such as high-speed rail — suffer from severe Doppler spreads, which deteriorate the performance of the widely adopted orthogonal frequency division multiplexing (OFDM) modulation in current 4G and 5G networks. This issue was a topic at the IEEE workshop in June, which also addressed how a new OTFS (Orthogonal Time Frequency Space) waveform, which is a candidate for 6G network development, may be an effective solution.

But another question still stands as to whether there is a “fix” that can be applied to current 4G and 5G-based OFDM networks to help mitigate doppler issues within high-speed communications through the use of MU-MIMO.

Distorted reflections

Communication at high speed is governed by very simple physics. The fundamental observation is that the wireless medium is a collection of reflectors, some of them are moving and others are static. The transmitted electro-magnetic wave propagates through the air (which is the medium in this case) and at times bounces off each of the reflectors and the received signal is a superposition of all these reflections.

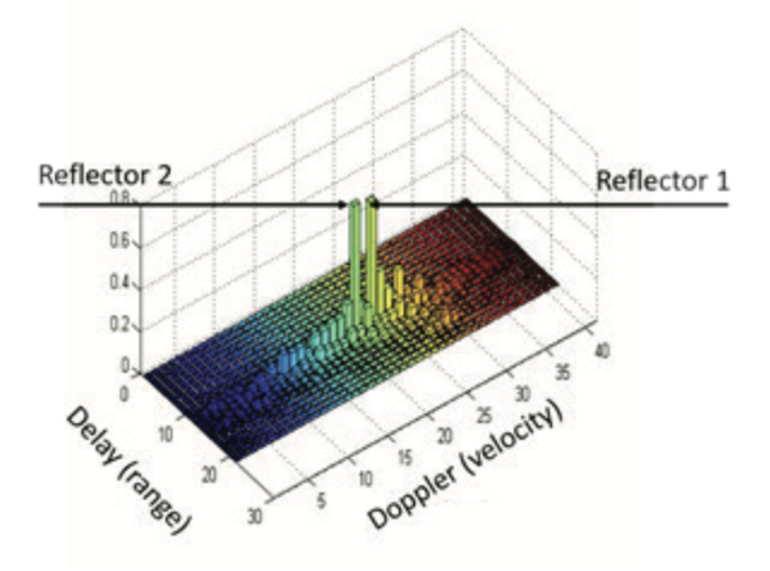

Mathematically speaking, every reflector introduces a small distortion to the propagating wave. The distortion is a combination of a time delay and a Doppler shift. Hence, the overall channel is specified by means of the Delay-Doppler characteristics of its constituent reflectors.

The Delay Doppler variables are commonly used in radar, where they are used to represent and separate moving targets by means of their delay (range) and Doppler attributes (velocity). When used in communication networks, they give rise to the delay-Doppler channel representation. In this representation the channel is specified by means of a superposition of time and frequency shift operations which mirrors the geometry of the reflectors. Probably the most important property of this representation is that it changes far more slowly than the rapid phase changes experienced in the traditional time-frequency channel representation, See fig 1. This “slowing down” of the channel aging process has important implications for various network functions as, for example, MU-MIMO.

Fig 1: Delay Doppler Impulse Response

In summary, the delay-Doppler channel representation yields a reliable geometric channel model which can be accurately learned and predicted both in time and in frequency under high mobility pressures. When incorporated in an MU-MIMO architecture, it enables intelligent user pairing and SNR prediction that results in improved spectral utilization and performance for any waveform.

The end product is a universal technology of spectrum multiplying for mobile networks that can operates in both FDD and TDD and with any generation network, requiring no changes to existing handsets, radios and antennas.

Delay/Doppler for 4G and 5G

Innovations of the Delay Doppler paradigm for wireless communication date back as far as 2010, with commercial products that separate the delay Doppler channel representation and processing IP from the OTFS waveform being available since 2018. These software technologies utilize existing uplink reference signals such as SRS & DMRS along with periodic DL CQI reports to extract robust geometric information and to compute downlink SINR and predict downlink CSI — even on paired FDD spectrum separated by as much as 400MHz.

The slow changing delay Doppler channel representation, due to its geometric nature, opens up the door for functionality disaggregation. This means software solutions can remain accurate for approximately 50-100 milliseconds — depending on the environment. Consequently, this enables Cloud RAN and the foundation to improve cell edge performance via intercell coordination, with software residing in the near-real time RAN Intelligent Controller (RIC) as an xAPP within the O-RAN architecture which can support hyper-reliable, low latency 5G requirements. Delay/Doppler software can also be deployed on any x86-based platform and can be integrated into existing base stations or deployed next to existing base stations through defined interfaces.

Use of delay Doppler software can indeed improve the spectral efficiency and reuse, as well as the user performance, for low-latency applications in current 4G and 5G networks today — without change to the UE, radios and antennas. MNOs are now deploying these types of software solutions in their networks to increase the spectral efficiency of their 4G, and emerging 5G networks, a trend that will no doubt continue with spectrum auctions only increasing in value.