Servers with Nvidia’s first Arm chip expected in 2023

Data center product news punctuated Nvidia Corp.’s announcements from this week’s Computex 2022 event in Taipei. The company unveiled new liquid-cooled versions of its A100 graphics processing units (GPUs) intended for use in data centers. The company also announced the imminent release of its Grace and Grace Hopper “superchips.”

The new chip architecture, revealed publicly for the first time earlier this year at Nvidia’s GTC event, is the first Arm-based design for Nvidia. It’s aimed at even higher-performance data center use than the workhorse A100, to drive the next generation of Artificial Intelligence (AI) and Machine Learning (ML).

The first generation of products using these new chips are now in development from Nvidia’s hardware partners including Asus, Foxconn, Gigabyte, QCT, Supermicro, and Wiwynn, according to Nvidia. Nvidia said those products will be available starting in the first half of 2023.

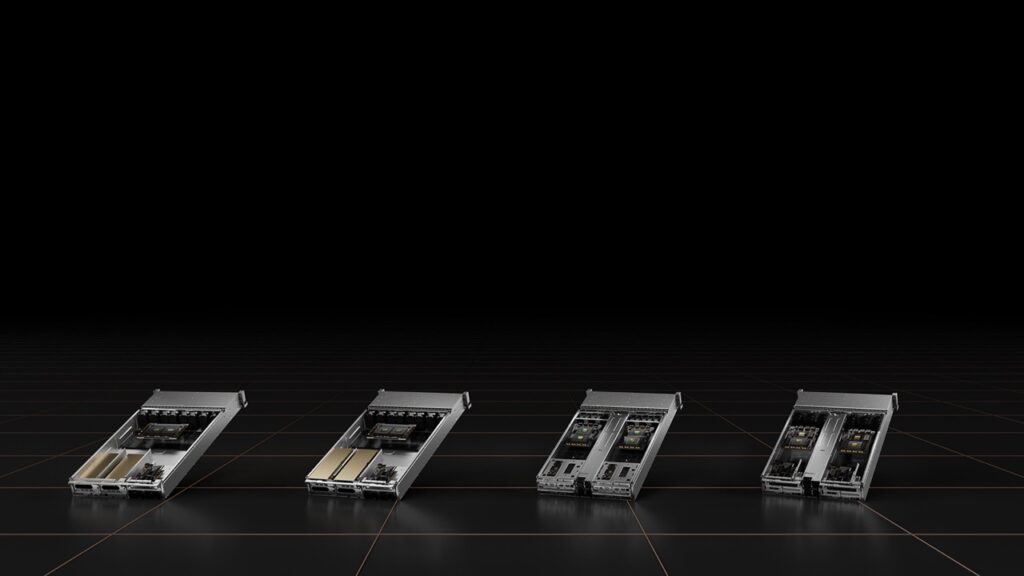

The new “superchip” servers are based on four reference designs created by Nvidia featuring the Grace and Grace Hopper chips, named after the computer scientist (and U.S. Navy rear admiral) credited with pioneering the inception of machine-independent computer programming languages.

The new server portfolio includes configurable, modular, and scalable systems for AI training, inference, and high performance computing (HPC), paired with Nvidia’s Bluefield-3 data processing units (DPUs), programmable circuits used for data acceleration not suited for GPUs.

Making efforts to improve data center sustainability

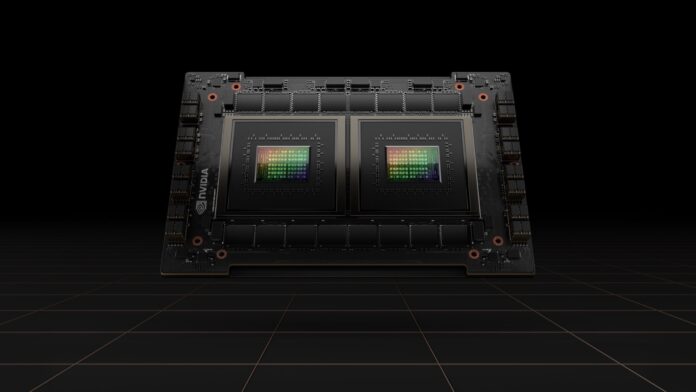

Arm’s chip architecture in Grace Hopper “superchips” promise significantly improved efficiency compared to x86 designs, but that doesn’t mean Nvidia is sitting still when it comes to “greening” its current products. Nvidia’s popular A100 GPUs remain steady and popular workhorses for enterprise AI and other HPC needs. Despite Nvidia’s attention on the new Arm-based processors, the company sees plenty of runway left for the A100. Nvidia announced that the A100 will be available on a liquid-cooled PCIe card.

“They’ll be available in the fall as a PCIe card and will ship from OEMs with the HGX A100 server. The H100 Liquid Cooled will be available in the HGX H100 server, and as the H100 PCIe in early 2023,” said Nvidia.

Nvidia said the new cards are the first data center PCIe-based GPU it’s made with direct-chip cooling. The new board design is being qualified presently by Equinix, it said, and will be generally available this summer.

“In separate tests, both Equinix and Nvidia found a data center using liquid cooling could run the same workloads as an air-cooled facility while using about 30 percent less energy. Nvidia estimates the liquid-cooled data center could hit 1.15 PUE [power usage effectiveness], far below 1.6 for its air-cooled cousin,” said the company.

Nvidia noted that a dozen system makers have already signed up to add the liquid-cooled A100 into their own retail offerings later this year. With the A100 getting the liquid-cooling treatment, Nvidia said that its next target is the H100 Tensor Core GPU, the A100’s far more powerful cousin.

“We plan to support liquid cooling in our high-performance data center GPUs and our NVIDIA HGX platforms for the foreseeable future,” said Nvidia.