With generative AI putting a spotlight on NVIDIA, the company is pushing “accelerated computing” as key to bringing AI to bear across industries

The idea is to use NVIDIA services, software and systems to build “AI factories” that also run 5G RAN workloads

NVIDIA, SoftBank partnership announced during COMPUTEX in Taiwan

Arm-based approach puts NVIDIA up against x86 stalwarts AMD, HPE, Intel

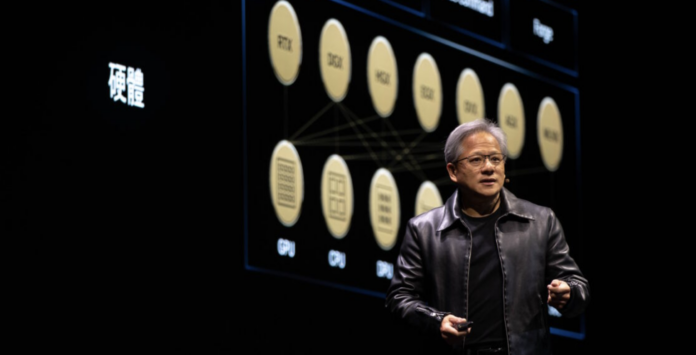

With NVIDIA stock on a tear and the company briefly passing a $1 trillion market cap, CEO Jensen Huang this week took the keynote stage at COMPUTEX in Taipei, Taiwan, to sell his vision for generative aritificial intelligence (AI), backed by a range of NVIDIA’s tech, bringing sweeping change to enterprises and industries of all sorts.

Included in that vision, and backed by buy-in from Japanese conglomerate SoftBank, is the idea that operators investing in cloud-native 5G and distributed computing can build an infrastructure that boosts RAN performance while also standing up AI-optimized compute capabilities that can be leveraged for new revenue opportunities.

“Accelerated computing and AI mark a reinvention of computing,” Huang said in his keynote. “We’re now at the tipping point of a new computing era with accelerated computing and AI that’s been embraced by almost every computing and cloud company in the world.”

Operators typically rely on—and these figures are born out by market share—CPU-driven, x86 stacks provided by the likes of AMD, HPE and Intel. NVIDIA’s accelerated computing pitch involves the Arm-based Grace Hopper Superchip and MGX reference architecture. Layer 1 PHY processing would couple a CPU with a GPU (NVIDIA’s speciality) as the accelerator, and further integrates a DPU.

In a call with media and analysts, NVIDIA’s SVP of Telecom Ronnie Vasishta highlighted the scalability of the solution and the flexibility of a multi-use architecture as compared to “telecommunications networks [that] are built for a single purpose…They have built…for peak demand. So you’re over-provisioning the 5G network for peak demand. As new AI applications come in, that peak demand is going to grow, the power required to power this network is going to grow, the compute requirements for that network are going to grow,” he said.

To address this, NVIDIA’s concept is to take into account the delta between peak and average utilization, and put the difference to work by standing up “AI factories. In fact,” Vasishta continued, “5G becomes a software-defined overlay within that datacenter and can be provisioned to the use requirements of the 5G network in an automated way. That means that even if you’re running RAN at 25% of what you would’ve done in a proprietary network, you can run it at that rate and the rest of the datacenter is being used for AI…That’s very easily said but it’s difficult today,” he acknowledged. But the vision remains: “5G now runs as a software-defined workload in an AI factory.”

SoftBank will use the Grace Hopper Superchip and MGX reference architecture “at new, distributed AI data centers across Japan,” according to companies. The idea is to use a “multi-tenant common server platform” to host generative AI and 5G (and future) cellular workloads. “As we enter an era where society coexists with AI, the demand for data processing and electricity requirements will rapidly increase,” SoftBank CEO Junichi Miyakawa said in a statement. “Our collaboration with NVIDIA will help our infrastructure achieve a significantly higher performance with the utilization of AI, including optimization of the RAN. We expect it can also help us reduce energy consumption and create a network of interconnected data centers that can be used to share resources and host a range of generative AI applications.”