Discussion around generative AI has quickly become a mainstay in conference presentations and earnings calls. The predominant thinking is that if you’re an enterprise, including a mobile network operator or other communications service provider, you’ve got to have a plan to put generative AI to work. So, to start, are generative AI tools like ChatGPT relevant to the telecoms set?

Speaking on a recent webinar hosted by RCR Wireless News, VIAVI Solutions Regional CTO for EMEA Chris Murphy explained that solutions like ChatGPT, Midjourney and the like have garnered so much attention because their outputs are “readily tangible and visualizable…but this is just a start…In specialized areas such as telecoms, generative models are starting to have quite an impact and that impact is only going to grow as time goes on.” Murphy was also quick to point out that these types of generative AI tools are “part of a whole ecosystem of the technology of machine learning and artificial intelligence.”

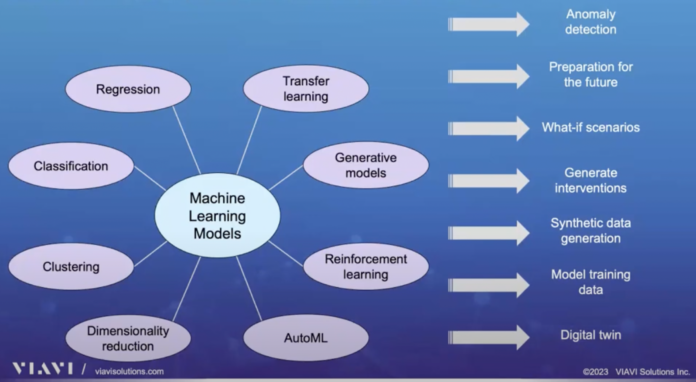

Before digging deeper, let’s take a moment to recap types of classic machine learning algorithms that have reached a comparatively high degree of maturity, new innovations in machine learning, and new innovations effectively upgrading tech that’s been around for decades. Mature machine learning algorithms include things like regression, classification, clustering and dimensionality reduction. Newer machine learning innovations–which are also giving a material boost classic approaches–include things like transfer learning, reinforcement learning, automated machine learning and, of course, content generation.

As Murphy put it, “There are whole new disruptions in the technology from transfer learning allowing us to train models in a general sense and then apply them to a particular focus area. Reinforcement learning where we can learn to interact with the system in an optimal way, and auto ML where we can automate, to some degree, the generation of the machine learning models and the way we train it to take away some of the expertise that’s needed to generate some of these very interesting applications.” This idea of taking a large, general model then honing the focus for a particular type of business or type of business process aligns with how enterprises are actually using generative AI; enterprises are using models trained on domain-specific, proprietary data rather than the collective knowledge of humanity as it exists online.

Back to the relevance of ChatGPT and similar generative AI tools to the telecom industry: “We’re starting to see operators implementing large language models for helping their to do their jobs in better, more creative ways.” AT&T, for instance, in June announced Ask AT&T, an internal tool that uses OpenAI’s ChatGPT functionality that uses corporate data in a dedicated cloud tenant provide by Microsoft’s Azure. AT&T employees are using Ask AT&T to streamline software development and coding, and language translation. Other use cases the operator is “exploring” are network optimizing, updating legacy code, customer care effectiveness, HR support, “and fundamentally changing the way we work with the ability to reduce employee meeting time by providing automated summaries and action items.”

What else can generative AI do for operators?

The big idea behind generative AI is predicting what’s next. You give ChatGPT a text-based prompt and it tells you which words come next, whether that’s to write a poem, draft an academic essay, supplement web searches or assist in developing code or HR documentation. “When we can predict the future,” Murphy said, “we can come prepared for it ahead of time in spinning up the resources required. If we anticipate an increase in demand, we can run what-if scenarios, for example, to measure our resilience to certain scenarios.”

He continued: “We can generate synthetic data, which is tremendously valuable, for example, for training our models using realistic data without having to collect that realistic data in large volumes. And this all leads into things like digital twin, which is an enabler for all sorts of training scenarios of optimization to drive our telecommunication networks to the next level…ChatGPT is in the collective consciousness, but I guess what I’m saying is that this is just one small piece of what’s going on in the technology, and there’s a lot more that will be at least as important as the large language models are for telecommunications.”

For more on this idea of creating a digital twin of the network, read “From real to lab to live—continuous testing in the era of AI.”

Generative AI at the edge

Big picture, 5G is continuing to evolve and continuing to become more complex. Virtualization and disaggregation are happening in tandem with deployment of network workloads in hybrid cloud environments, including distributed clouds deep in the network at radio sites or even at customer premises. This is prompting organizational overhauls wherein operators are establishing DevOps workflows and setting up CI/CD pipelines to take advantage of the network’s flexibility. And all of this is in service of delivering new types of (largely) enterprise-facing services that set the stage for new lines of revenue and more effective network monetization.

Against this dynamic, complex backdrop, data is king. “The data can be aggregated in different ways, everything from subscriber level, the individual user level through to aggregated by network function and network components,” Murphy said. “So we’ve got a lot of data. That’s good news because we’ve got some interesting models, but we have to be careful about how we use the data…If we’re making decisions about how to schedule our data or how to adapt our MIMO, these decisions need to be made right at the edge because those are very short-term decisions based on the channel conditions, for example, and we need to be able to react to those changes.”

With the goal of leveraging data to predict the future then prepare to react accordingly, the edge is an important focal point in the larger context of 5G monetization. 5G is designed to support low-latency applications over the air interface, but to do this requires moving compute out of centralized data centers and closer to the people and devices creating the data. “There’s an interesting challenge here of how to deal with the data that we have to squeeze the maximum value out of…So the data that we use for training, it may be generated at the edge and be in large volumes. And we might want to be careful about transferring [that data] to the datacenter for training models which then need to be operated at the edge.” Hence the importance of AI optimized for edge deployments.

In summary, “We’re lucky to have a lot of data that we can draw on to build the best models, train in the right place, in the optimal place, operate in the optimal place to deliver performance improvements and the ability to deliver the interesting and disruptive services,” Murphy said.

To watch the full webinar featuring Murphy and other industry experts, click here.