In our role helping Communication Service Providers (CSPs) transition to cloud-native 5G networks, there’s a problem we run into time and again. Here’s how it goes:

A customer will deploy, say, a new network card for their servers. We’ll measure the performance and confirm that the cloud infrastructure is now 2x faster. “Great!” the customer will say. “So, what does that mean for my 5G network functions? How many more subscribers can each node support?” The best we can offer: “It depends.”

There’s a direct link between the performance of a cloud-native network function (CNF) and the various dimensions (memory, CPU, network, storage) of the cloud infrastructure it runs on. But since every cloud is different, no universal mechanism exists to connect those dots. The same CNF deployed in one environment (running on VMware Tanzu, with a specific set of hosts and configurations) will perform very differently in another (say Azure Kubernetes Service). In most CSP organizations, there’s not even a natural role to task with working this out. Application teams focus on workloads, Infrastructure teams focus on cloud, and each group assumes the other provides the performance that is needed. Unfortunately, the responsibility for determining the performance requirements and measuring how they’ll intersect in production often falls through the cracks. The result? Ongoing issues and delays.

Application teams promote CNFs that tested beautifully in the lab, only to see them failing in production. Infrastructure teams pull data from the cloud environment to try to diagnose the problem, but have no context to understand what they’re seeing. And since no one knows what cloud performance a given workload actually requires to operate correctly, figuring out what isn’t working right in a production setting is like trying to separate salad dressing into vinegar and oil.

There’s a solution to this challenge. But we’ll need to start thinking differently about cloud-native services and the people supporting them.

Rethinking performance requirements

By moving to cloud-native networks, CSPs will be able to operate more efficiently and bring new services to market more quickly. But first, they’ll have to learn how to navigate an environment that’s radically different than anything they’ve dealt with before. The disconnect between cloud and workload performance is just one example of these growing pains.

The only way to understand what a CNF needs from the cloud is to thoroughly test it in your specific environment and empirically determine the cloud infrastructure performance required by the CNF to work correctly at scale. Once you’ve done that, you can then map each CNF to specific cloud requirements. Now, Application engineers can tell the Infrastructure team what their workload needs during preproduction—instead of having to figure that out after it’s deployed. When there’s a problem with a live service, Infrastructure engineers can now compare what they see in the network with each CNF’s requirements, and quickly pinpoint the problem source and fix it before promoting to production. The CSP can now move more aggressively to capture new business, knowing that the next-generation services they’re selling will work as expected.

Accurate CNF-to-cloud performance data really is that valuable. Getting it, however, isn’t easy, for several reasons:

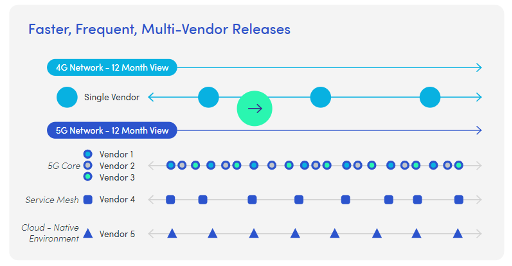

- Software changes continually. In the past, vendors updated legacy network functions 2-3 times a year. In a 5G world, CNFs might receive updates 2-3 times per week—along with every other part of the cloud-native environment. A CNF that performed well in your environment today might behave differently tomorrow.

5G’s cloud architecture leads to a constant stream of updates going live, dynamic instantiation of resources, and greater us of third-party clouds and networks

- Gaps remain between lab and live environments. Most CSP labs still use legacy, 5G-only tools to validate CNFs. Yes, the CNFs run on a preproduction cloud, but it is a highly performant and reliable one that bears little resemblance to the production network. As a result, there’s no good way to know if you’re implementing a CNF optimally or not—it behaves the same way in the lab because the cloud infrastructure is always performant. It’s only when you deploy into production that it becomes clear that the live implementation has very different resource needs and availability.

- Finding people with the right skill set isn’t easy. To solve these problems, you need to understand what happens precisely at the intersection of the 5G workload and the cloud. But this is not a common skill set. Telecom engineers study 5G networks, and infrastructure engineers study cloud. Few have deep expertise across both domains.

Cloud providers and CNF vendors struggle with this problem as much as CSPs. They’re desperate for better ways to characterize customer networks, so they can accelerate installations. Today, they typically deploy everything into production and wait for something to break, so they can troubleshoot what went wrong. It’s an iterative, expensive, trial-and-error effort. Some vendors estimate they could cut initial deployment times up to 75%, just by being able to qualify infrastructure performance before they integrate.

Characterizing cloud performance

If you’re part of a CSP organization, holding off on deploying cloud-native 5G applications isn’t really an option. So, what should you do to capture the CNF-to-cloud performance metrics you need?

- First, recognize that this isn’t a permanent problem. Eventually, vendors will provide new tooling and approaches to navigate these issues more easily. But it’s going to take some time, and these tools are still being developed.

- For now, ask for help. In the short term, the quickest, easiest way to determine CNF-to-cloud performance requirements is to work with 5G cloud testing consultants. You may not yet be able to buy mature cloud-native 5G testing products, but you can work with the experts currently developing them and ensure your objectives are achieved.

- Start preparing to take this on yourself. Therewill never be a single, universal mechanism to make CNFs behave optimally in every cloud. Ultimately, every organization will have to build up the capability to determine what each CNF needs from their environment. Most likely, you will need to expand the charter of the Application team to collect this mapping data. And you’ll need to task Infrastructure teams with continually monitoring cloud performance against what each workload requires.

Whether working with a partner or (eventually) doing it yourself, you’ll find that characterizing CNF-to-cloud performance ahead of time makes a huge difference. You’ll have lower costs and fewer emergencies because you’re no longer promoting broken CNFs. You’ll discover most issues during preproduction, when they’re far less expensive to fix. When you do have a problem with a live application, you’ll be able to quickly pinpoint where and why a CNF isn’t getting what it needs. And you’ll gain the confidence to push forward with next-generation 5G services, knowing your environment and organization are ready to deliver the experience customers expect.