Benefits of hybrid AI include data security, context and efficiency

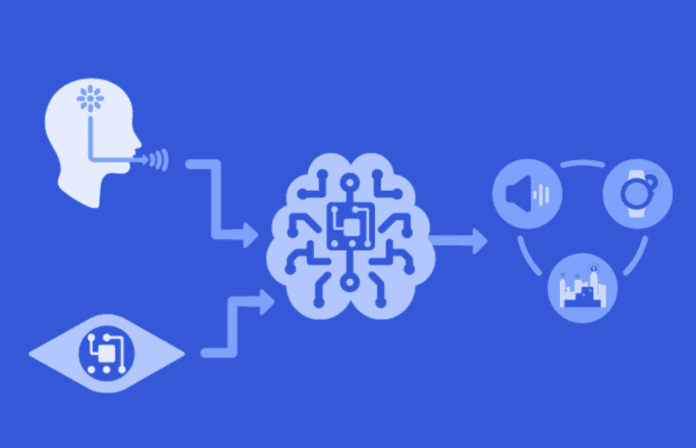

With generative artificial intelligence (AI) poised to permeate virtually all types of consumer and enterprise applications, network architecture becomes increasingly important. If the goal is to use AI to quickly make a decision and effect an action, latency is a major consideration. And with increasingly performant end user devices, hybrid AI architectures that distribute AI workloads between centralized clouds, distributed edge clouds and the device itself bring a number of benefits.

Tantra Analyst Founder and Principal Prakash Sangam, discussing the subject on a webinar hosted by RCR Wireless News, said hybrid AI supports improved security and privacy, context-aware decision making, scalability, and cost efficiency.

To the security/privacy point, “Every organization…will share so much information with AI systems, and storing all that away at a distant service on which users have no control seems really dangerous and worrisome…If it can be stored on the device or at an edge cloud that you have control over, then that makes it that much safer and gives security.”

Sangam also discussed the importance of context in effectively using generative AI which mirrors messaging from major AI companies around the need for enterprises to use their own data to develop their own models for their own specific use cases. While the versions of tools like ChatGPT used by you or me contain enormous amounts of generic data essentially sourced by crawling the internet, a domain-specific tool would provide more immediate business value.

“Domin specificity is important,” Sangam said. Open models use “huge amounts of generic data. When you use that data for predicting something very specific, obviously errors are prone to happen. So, because of that and especially for enterprises, if you are trying to use AI for any of your applications, it makes sense that you use the data specific to your domain so that you get very accurate results. It is better to do that on an edge serve somewhere or on-device.” This also has obvious security/privacy implications for proprietary corporate data.

Sangam also talked through the business logic around where AI workloads are run, and how that relates to ease of scalability. For AI models, training helps a model learn from data and is followed by inference where the AI makes decisions based on its training. The centralized cloud lends itself to training due to the sheer compute and memory capacity necessary; development of edge computing capacity could enable some training to happen at the edge, but certainly inferencing will happen at the edge–as well as on the device in a hybrid architecture. “And for companies providing gen AI,” Sangam said, “it’ll be very cost effective” to use a hybrid architecture. “They don’t have to invest in all of this infrastructure to do the gen AI.”