Note, this article is taken from the RCR editorial report on Critical 5G Edge Workloads, published in September. The following is the first part of the foreword to the report. Go here to download the full report. The subject will be further explored in the upcoming Industrial 5G Forum on November 7; sign up here.

Let’s get down to brass tacks. Which critical industrial workloads should stay on site at the enterprise edge, and which should go to far-off cloud data centres? That is the question. What rules should critical-grade industries and industrial-grade service providers follow? None, retorts almost everyone (see interviews, pages 8ff). That is the challenge with Industry 4.0; nothing is easy with the design and implementation of new-fangled digital-change infrastructure for critical industry. Almost everything is bespoke – and the solution invariably takes on a life of its own when it gets to sign-off.

There isn’t a “one-size-fits-all”, responds AWS. “There are no rules,” says Siemens. Others say the same, in effect. Except this is not exactly true. There are no hard-and-fast rules, the message goes; and we are deliberately misquoting Siemens, in fact. In response to a direct question, it actually responds: “There is no rule-of-thumb.” Which is different, of course – and also wrong. Because while there are no strict rules, there are very clearly rules-of-thumb; and even Siemens, in denial or in translation, says so in its response – and promptly reels them off.

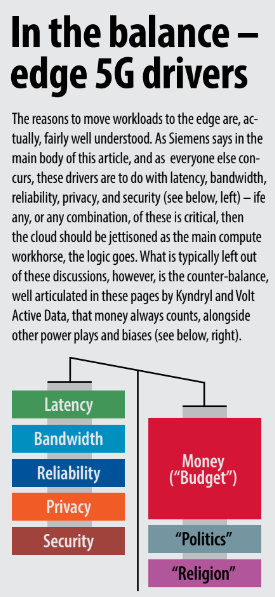

The German firm suggests (see pages 22-23) the “key factors” that govern the provenance of data processing are latency, bandwidth, and “privacy and security”. The last two are often bundled together, and might be related, but they are also different: the first is about sharpening regulation, which says local data should be processed in local data centres, of whatever type; the second is about robbery and vandalism of property and systems, in the digital sense. The point is that the edge should be engaged whenever these factors are critical.

Across the interviews in the following pages, everyone agrees on this: edge requirements are most often dictated by application performance, where latency and bandwidth are the king measures, or else by security and privacy (and abiding industrial distrust in public infrastructure). In ways, these factors are all facets of infrastructure ‘reliability’. But reliability is the de facto terminology for infrastructure uptime, measured as a percentage of time (99.99… percent reliable) – arrived at initially by calculating operational outages, and arrived at finally by calculating capital outages. “Because an extra nine costs an extra zero,” explains Volt Active Data. Four nines, five nines, six nines? How many do you want? And how many can you actually afford?

Data streaming specialist Volt Active Data addresses the mad challenge of ultra-reliability very well (see pages 18-19); system integrator Kyndryl notes that enterprises are invariably pragmatic about digital change, whatever ‘rules’ are imposed during its design (see pages 8-9). But these are rules-of-thumb, remember; no one (quizzed as part of this exercise) is prepared to put metrics against latency and bandwidth, say, to explain when workloads start to migrate from the cloud to the edge. Because everything, as above, is bespoke. The only rock-solid rule is to look at the data.

“What is the data? How do you collect it? What are you going to do with it? Once you understand all of that, then everything gets a little clearer,” explains Steve Currie, vice president of global edge compute at Kyndryl. Of course, answers about the nature and velocity of critical data go in all different directions – which is where the long process of consultancy and collaboration starts, and what this report discusses in separate conversations with various protagonists in the Industry 4.0 mix.

Interestingly, Siemens points to certain other practicalities, besides (see pages 22-23): the ring-fenced enterprise edge also becomes important because of constrained or restricted network or compute resources. System integrator NTT says the same in a separate conversation (not included here; available online): certain new industrial IoT solutions, especially camera-based sensor applications, would fall-over if their payloads had to traverse the open internet, or channels of it, to be transmogrified into useful information, it says.

Certain of them would knock-out legacy enterprise networks if they were not rerouted on local-edge cellular, it adds. “There’s lots of data – especially in a large plant with thousands of sensors. There is so much data that the backhaul to the cloud would be overwhelmed, and the cost to upgrade could be very high,” comments Parm Sandhu, vice president of enterprise 5G services at the firm. “Which is another reason to process data locally – because the volume of data would add significant cost to centralising [compute functions].”

This article is continued in the RCR editorial report on Critical 5G Edge Workloads, available to download here. The subject will be further explored in the upcoming Industrial 5G Forum on November 7; sign up here.