Google recently invested $2 billion in Anthropic

San Francisco-based generative AI startup Anthropic has revealed that it will be one of the first companies to deploy new AI chips from Google. The announcement comes just a few weeks after Google confirmed a $2 billion investment in the startup.

Anthropic, considered a serious rival to ChatGPT creator OpenAI, will use Google Cloud’s newest TPU v5e chips in its Claude large language model (LLM). Claude 2, according to Anthropic, is a chatbot with the ability to summarize up to about 75,000 words — compared to Chat GPT’s 3,000 words.

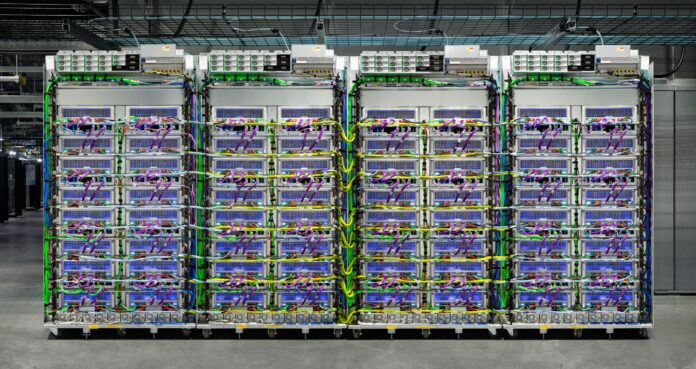

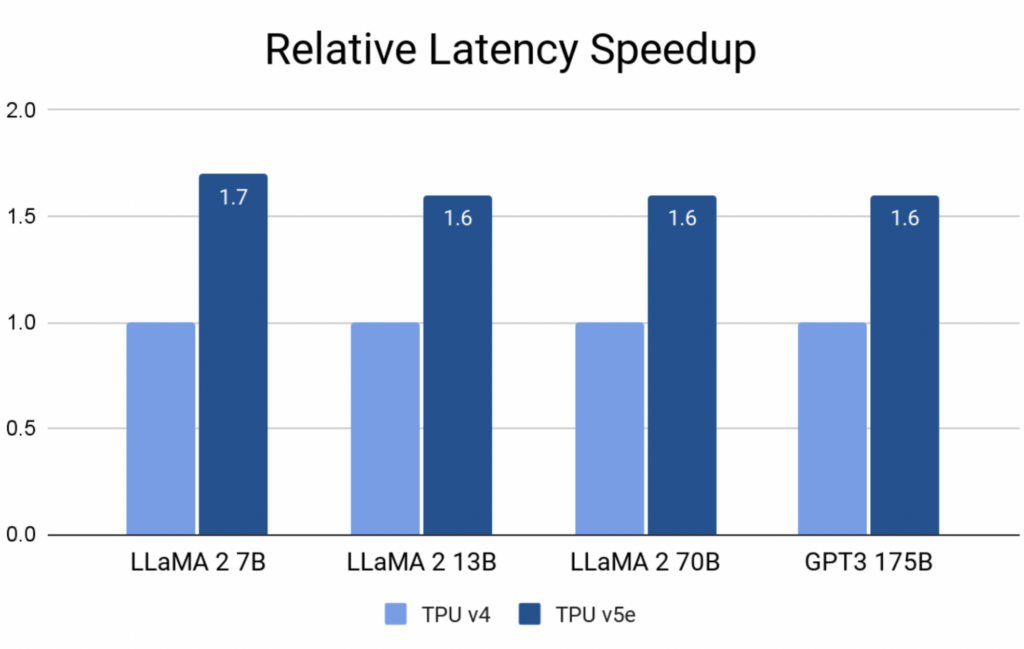

Google stated in a press release that its Cloud TPU v5e is designed to deliver the “cost-efficiency and performance required for medium- and large-scale training and inference,” and delivers 2.3x higher training performance per dollar relative to the prior generation. On latency, Cloud TPU v5e reportedly achieves up to 1.7x speedup compared to TPU v4, as shown in the graph below.

The new chips, continued the company, will enable Anthropic to scale its LLMs beyond the physical boundaries of a single TPU pod — “up to tens of thousands of interconnected chips.” The AI company is also now using Google Cloud’s security services as part of the collaboration.

“Anthropic and Google Cloud share the same values when it comes to developing AI–it needs to be done in both a bold and responsible way,” said Thomas Kurian, CEO of Google Cloud. “This expanded partnership with Anthropic, built on years of working together, will bring AI to more people safely and securely, and provides another example of how the most innovative and fastest growing AI startups are building on Google Cloud.”

In addition to the Google Cloud investment, Anthropic also secured a $4 billion from Amazon in September the pair announced strategic partnership, a partnership that will see Anthropic use AWS Trainium and Inferentia chips to build, train and deploy its future foundation models, while AWS will become Anthropic’s primary cloud provider for mission critical workloads, including safety research and future foundation model development.

Additional details about Google’s investment in Anthropic remain scarce; however, it has been reported that Google’s investment includes $500 million upfront, and that in April the tech giant invested hundreds of millions into the Anthropic for what was then a 10% stake.

For more about Google’s cloud and AI efforts, download the Google Cloud Next ’23 in Review report.