Beyond the inner workings of mostly-public telecoms, about the navel gazing of open RAN and open APIs to re-make operators as ‘digital natives’ in the post-digital era, what will be the hottest topic at MWC next week? Sustainability? Well, yes; but that is a global challenge, and – in the flashy corporate power-play that is MWC – environmental sustainability is a noisy consequence of economic sustainability, and of desperate growth. No, the hottest topic will be generative AI, clearly; followed, arguably (says RCR Wireless), by the developing narrative around private 5G.

Of course, generative AI, as it has captured the imagination, is the magic in the mix – the ghost in the machine, which will change everything. But at MWC, it might start to combine with 5G in the enterprise market, along with edge computing at hardware-level and all kinds of unknowable applications at software-level, as an outward-facing solution for global economic change. This is grandiose MWC-talk, of course; the 5G angle in this conglomeration is significant, but also overplayed – in most places, except in the niche critical Industry 4.0 game. Wi-Fi will work very well, also.

But while it is roll-your-eyes trendy and predictable, the introduction of generative AI into MWC-style Industry 4.0 propositions is also exciting. And who might break from the ranks to produce such a prototype? Nokia, which trailed an industrial AI chatbot for its MXIE system last week. Is this surprising? Probably not. Whatever its woes with the supply of public 5G gear, the firm has been selling private cellular networks, in one form or another, for a decade already – and selling critical railway (GSM-R) networks for 35 years, and public safety networks for almost as long.

Indeed, even as the supplier ecosystem has swollen in the last five years (and latterly consolidated, as well), the latest GSA stats for global private 5G deployments put Nokia’s market share at about 50 percent. There is a case to be made that the Finnish outfit, more than most, has cleaved open the hard-nosed Industry 4.0 market for private cellular; and, as it will tell anyone who will listen, it has pioneered a bigger 5G edge-bundle for mission-critical (MX) workloads in the form of its industrial-edge (MXIE) system (now, apparently, part of a broader Nokia ‘ONE’ platform).

Ahead of MWC, Nokia is pitching its large-language model (LLM) tool, MX Workmate, as the “first OT-compliant gen AI solution” for Industry 4.0. RCR says Siemens, which knows a thing about operating technology (OT), beat it to the punch with a press note early-February about generative AI for predictive maintenance, with a text-chatbot to boot – which is in-test already, and in-market by Spring. “It’s one of those times where everyone is launching something,” says Stephane Daeuble, in charge of solutions marketing in Nokia’s enterprise division.

“But I think ours is pretty different. Ours is focused on the worker. It’s about machines and workers talking the same language.” Specifically, it is about an industrial AI lifeform with total contextual awareness, of a plant and a worker, to advise staff about the minutiae of machine fixes and process tweaks on a team comms app. Nokia says it has solved, or part-solved, certain challenges with AI for critical industry – around reliability, availability, security, sovereignty, as per the requirements for all Industry 4.0 tech; plus accuracy, clarity, traceability, and moderation, as related to AI.

“We are solving a lot of these challenges… [and] it’s not trivial, and why we are proud [of our work], and why we think we’re the first one to have an AI LLM for OT,” remarks Daeuble.

The first set of requirements, as above, are broadly solved by MXIE, as an on-prem compute-and-network system – effectively apart from the kinds of central cloud data centres that run consumer-geared generative AI apps. Daeuble explains: “The bottom line is we invested a lot, and continue to invest a lot, in R&D to fix all of these problems – to make generative AI work for Industry 4.0. So we moved the LLM to our own OT-compliant edge – which offers high availability, high reliability, and easy scalability; all orchestrated and managed for industrial SLAs.

“The edge also solves issues with data security and data sovereignty – by leveraging the secure OT data that’s already on the MXIE, where we’ve already got data flowing from the private network, just next to it. So really, the MXIE system is the ideal place for these LLMs; we’ve got local breakout, an OT data lake, and local storage. So we can tap into an LLM that is open source and locally deployable to run on-prem without a massive impact on energy consumption.” So, AI for OT? Problem solved? No, because of gnarlier issues with ‘hallucination’ and traceability.

These are the things, along with the everyday trust issues of industrial enterprises, that make MX Workmate a prototype, effectively, rather than a full-blown commercial proposition. “This is not a solution that’s going to come out tomorrow. We are showing the first instance at MWC. But it will take a little while to finalise, validate that in customer premises, and ensure it really does what it says – that it doesn’t do stupid things. But certainly, we have made fantastic strides, and there is lots of potential,” says Daeuble. He adds: “It is probably going to be a 2025 thing.” Customer tests will be sooner, the plan goes.

So what is AI hallucination, and what’s the deal with traceability, too? “Yeah, so basically we need to make sure it doesn’t say stupid stuff. So we are training and adapting, and grooming if you like, these [open source] language models for OT – which don’t need to know about your cat, or the best music in the world. And we will remove some of that stuff by making them OT-compliant. But yes, hallucination is a big thing, and a big challenge. Because you can’t have an AI tell a worker to do something which negatively impacts the plant, or the worker. It is a big, big topic.”

He explains: “We haven’t found a solution, to be honest; we’ve found parts of the solution. There’s lots of research in universities; [Nokia’s scientific R&D facility] Bell Labs is working with some of them. The challenge is to ensure an LLM that doesn’t know something doesn’t invent something – which is this concept of hallucination. One way to solve it is to run parallel LLMs – so we have three or four running on the MXIE, and, if one of the four, say, produces a different response, then we’ll remove it and use the other three; so we remove the outliers. But that work is ongoing.”

Daeuble has slides about some of the inner workings, which he’s happy to share briefly for background information, but doesn’t want quoted. The implication is that Nokia’s work on OT-AI breaks ground, and sets it apart from its competitors in the edge-5G game. The issue of moderation / traceability sounds simpler; a digital paper trail to ensure accountability – in case the AI-bits hit the fan. “We will have tools to audit what’s happening, and moderate what the AI says,” he says, adding something about utilising the cloud for “long-term storage… of the trace [data].”

The auditing tool also keeps the automation loop open by stipulating a validation check with a specialist engineer – either in-house or at a third-party machine vendor, say – to double-check the AI analysis and recommendation. “As the trust builds, that step can be removed, or retained for critical use cases,” says Daeuble. In all, Nokia has “composed” a dozen different elements in the MXIE engine; some are its own, and some are from third parties. It all sounds complex and difficult; but like Nokia is properly pleased and rather hopeful about its work.

“It is not trivial,” he says again. “And it will take baby steps to be accepted in the OT space.” Indeed, it will be some years, probably, before generative AI is let loose on critical enterprise processes. “It will be 2025 [before its commercial launch]. But that doesn’t mean we don’t have something that works today. It is just that we have to test it, very extensively, with customers. But trust is hard in OT. Some customers in the chemical industry, say, won’t allow us yet to connect the controllers for their machines onto private wireless.”

He goes on: “There is a trust curve with all this new technology that we are bringing in. At this point, we are still working just to light the [Industry 4.0] fire in some segments, let alone to do the more advanced things that these technologies enable. Other markets are more advanced, of course. But, yes, we will start testing by the end of 2024. But a commercial launch, suitable for and accepted by all industries, will take time. We will run quite a number of trials with close customers and close partners to iron out all these issues, and to start to build trust.”

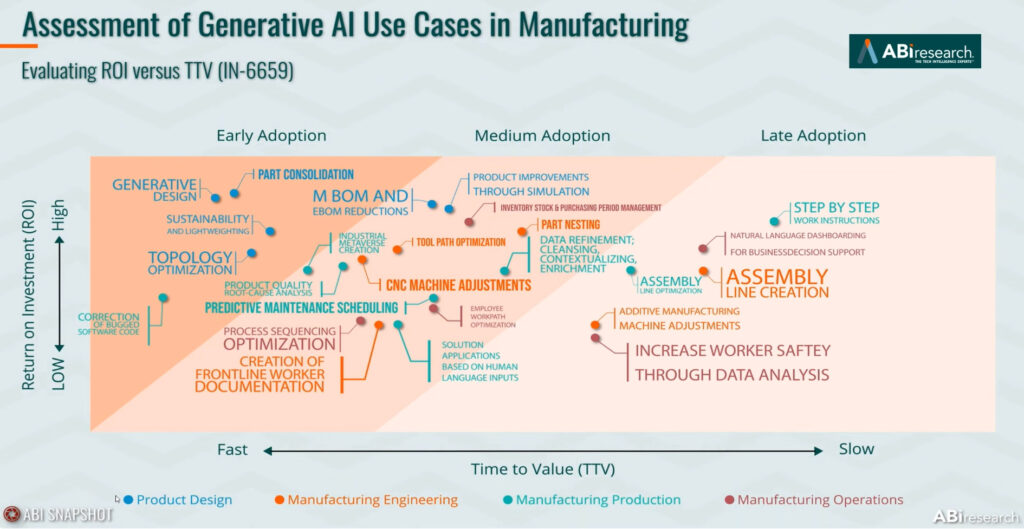

ABI Research has issued a report to assess potential generative AI use cases in manufacturing (see image). The point is that it stands to go everywhere; but the point, as well, because of issues with hallucination and trust, is that its initial commercialisation will likely be scaled down into single non-critical use cases (as highlighted in blue and orange in the ABI slide) – to applications like predictive maintenance, which Siemens appears to be doing? “Yeah, there are plenty of OT use cases that don’t impact the whole plant. Which are probably some of the early ones we’ll look at.”

Daeuble adds: “But MX Workmate can have an impact on all the things that ABI highlights.” Maybe so, but the launch apps will likely focus on “contextual messages”, issued via Nokia’s MXIE-hosted team comms solution to raise alerts and recommendations about nearby issues in the plant – “based on where a worker is in a factory”. He says: “It’s isolated – where you’re producing a metal widget of some kind, perhaps. And a little hallucination doesn’t impact more widely. Worst case, production of the single part decreases – but then the AI will realise and compensate later.”

He has a couple of made-up scenarios, complete with chatbot recordings. One is about the oil level in a pump. “The AI sends a message in human language, rendered in team comms as a two-way conversation – as voice or text. The worker doesn’t need to know anything. The only action, initially, is to look in the sight glass and check the oil level. And based on that, the AI analyses the documentation, and says what to do and how to do it,” he explains, before pressing play on a slightly-dislocated industrial Alexa.

“There is a 90 percent chance of a valve error in the next 12 hours. Initiate maintenance procedure #3,752 – for oil replacement. Fill up the lubricant reservoir with 12-litre 800/90 viscosity oil. Click on the link for the training video.” Another scenario is about the safety procedure for an emergency evacuation. The recording goes: “Warning, your position is inside the safety distance of malfunctioning high-pressure pump #45. Shutdown in progress. Put on your protective gas mask [and] proceed immediately to the meeting point in the parking lot. See navigation guidance.”

Daeuble says: “It adapts the language and instruction for the worker – so it knows if you’ve joined this morning, or if you’re a contractor. It can send visual guides; it knows, if you are brand new, not to instruct you to carry out the maintenance, but to contact the right staff… It may sound simple, but all of that information probably saves you three or four hours of digging into manuals, comparing what you see, sending someone to check, and so on. So the impact is significant in terms of ensuring workers know what they need to do – even if they’re new and unskilled.”

Quite how you construct the ROI for such random procedures is unclear; but, then, the private 5G market has been running the maths on such scenarios for years. “In terms of product deployment, the easiest use cases to implement are the ones that focus on human workers. Because they often have the most impact.” He explains how the whole AI project started, after observing a worker in a client factory go to retrieve a part, only to return again for a pallet loader to do the heavy lifting. “We were like, ‘Isn’t that where you need automatic dispatch of an AGV?’”

He adds: “And then we got with Bell Labs, and we put two-and-two together with generative AI – to have an AI engine figure things out, and an LLM to converse’. And then you’re like, ‘Okay, all the pieces seem to fit’.” He reasons, as well, that “the most successful apps meet a fundamental need”, and that private 5G generally finds its mark, initially, just with team comms. “Because humans in charge of complex machines can now call a remote expert – versus having a bloke walk 20 miles between machines every day with a stack of books in a book sack.”

The same logic will apply with AI-based apps in Industry 4.0, he says – where familiar manual processes are rendered impractical by new technology. “You start with one case, and then add a second and a third; and then you’re like, ‘Okay, wow; that’s impactful’ – and it just expands with more advanced use cases.” When asked about the timing, and whether it might be perceived as premature and opportunistic, given how on-point generative AI is, Daeuble suggests simply that the timing works for Nokia, given its pioneering spirit in the digital enterprise space.

“When we had this in our hands, we wondered what to do with it. Is it too early? But it’s a perfect illustration of the value in the ONE platform. You need the connected worker and the connected machine, and everything on-prem. So there’s a perfect storm of data in MXIE, and we now have a solution that’s greater than the sum of its parts. And equally, we always launch early. We were early with private wireless – back in 2011. People were like, ‘What are you doing?’ But we were right. This is the same, and it will take time. But if you don’t start, it never happens.”