39,997 feet above the Gulf of St. Lawrence—Still on the way home from Mobile World Congress, still trying to figure out Mobile World Congress. One thing I learned is that context and data are everything really if you’re looking to create knowledge, right? Whether it’s that distinction between knowing a historical fact and understanding why that fact was important then and is relevant now, or knowing the best way to ask the question to get the information you need, or knowing how to move through a buffet line at, say, the Joan Miro VIP Lounge at Barcelona El Prat Airport, it’s all about leveraging context and data to create knowledge.

The first of what will likely be many asides: I think—I know deeply in my soul really—that buffets should move left to right even if physically circular, so clockwise in that case. Barring that clear structure, you should start where the plates are. Having plates available at multiple points destroys the system and it’s absolute madness. Putting plates and utensils together makes putting shit onto the plate impossible because you then have to hold the plate, utensils and put snacks on the plate with the third hand you don’t have, so you put the plate down, and that slows things down and is just completely inelegant and inefficient and it makes me feel like my brain is on fire. Plates to the left, then food, then utensils and napkins at the opposite end from the plates. Serving utensils should have appropriately shaped trays or holders that clearly indicate which dish they correspond to and where they should be stored when not in use. Hot and cold items should be grouped together with cold items first. If there’s a base item that you can then customize, that base item, yogurt for instance, goes to the left, then you move left to right through fruit, granola, chia seeds, etc…An international standardization body should be established at the urgent direction of the United Nations Security Council and every buffet at every airport lounge and every other place on this planet should be structured as outlined above. It’s obvious. How to use these standardized buffets should be a required element of public education, although it wouldn’t have to be taught because it would just be understood. The context is the problem, the data is there, creating the knowledge would sure as hell help me.

OK, I’m exiting my dissociative state for now. So to know something, you need context. Problem is, context isn’t universal. There are very few, maybe zero, shared bits of context and shared bits of data that lead to shared bits of knowledge. And that’s why things are harder than they should be. This idea has given rise to whole disciplines. Industrial design (environmental, interactive, product, transportation) that finds the right balance of form and function, a creative/scientific iterative process that’s amazing; Ive of Apple, Dyson of Dyson, Rams of Braun, and the granddaddy of them all, Raymond Loewy. This guy has given us some of the most iconic brand identity collateral there will ever be. The Coca-Cola bottle, Pennsylvania Railroad’s S1 steam locomotives, Greyhound Scenicruiser buses, pencil sharpeners, kitchen (and buffet) items…Lowey is an icon who made things easy, elegant, clear, functional. And he did it because he combined context with data to create knowledge. Legend. Then we’ve got user-centered design (UCD) which is a lot of the same but maybe different in that there’s slightly more emphasis on accessibility, researching, testing…but delivering accessibility with style and grace is among the goals of industrial and user-centered design. For more information, reach out; I’m happy to send you the required reading list.

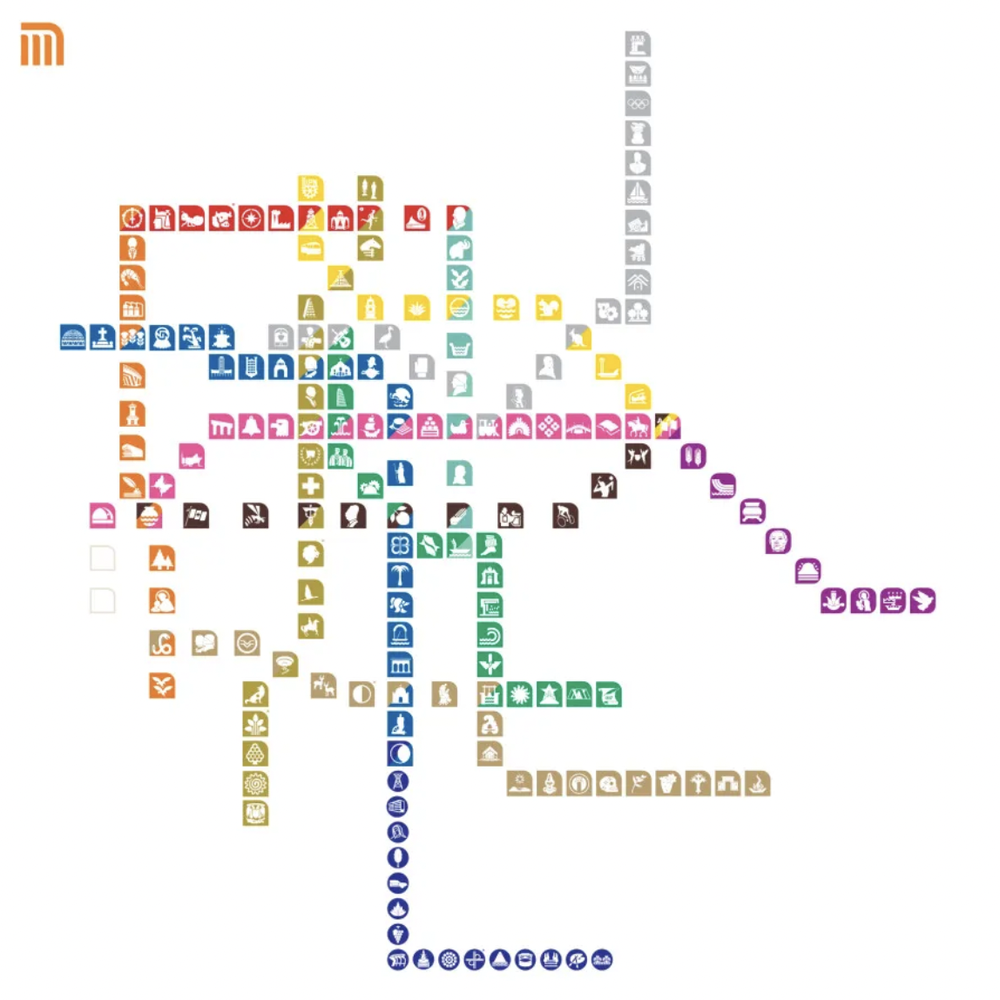

To pull that accessibility thread from UCD, here’s what you need to do. Go to Mexico City and use the metro system. Look past the fact that it’s over capacity at peak times and that leads to, again, absolute madness, but that’s just madness as a function of overload, not madness as a function of poor design. The metro system got refreshed ahead of the 1968 Olympics and this guy Lance Wyman was given the job of creating an accessible system for a population with a high rate of illiteracy. Written signs wouldn’t work for a lot of the locals; even if they would, they wouldn’t work for all of the visitors to the Olympics. It had to be something different. The big idea of it is logos that correspond to what’s around a particular station. And “what’s around” could be defined as recognizable fountain or the history of the place or the thing that happens in that area regularly or the kind of animals that live there. To bring that back around, the idea here is that Wyman combined context and data to create knowledge in a way that meant you didn’t need to know any language or mathematics to get around efficiently. This worked, it scaled out, it’s still around, and I’ve spent a lot of time down there just looking at it all and thinking about it all. For more information…you know, don’t reach out (but do). I’m not an expert. There’s this place in London, in Mayfair, called Heywood Hill. You can give them a topic or a person or a time period or a whatever and they can curate a library for you. Give them context and data, they help you create knowledge. Highly recommend. And acknowledge that I’m dissociating again; perhaps more succinct from here. But perhaps not.

Life is hard, the condition of modernity is challenging, and this exercise in managing complexity in service of X is only going to get harder. In telecoms and in enterprise IT, we talk a lot about the edge—the metro edge, the RAN edge, the far edge, the enterprise edge, etc…Those are all fine, they all mean something and do something, but there’s another edge. It’s the human edge. It’s individuals that exist in space alone and together. It’s you, it’s me, it’s us. And we’re all walking around just absolutely radiating data of all sorts—biological, digital, mental, physical, nominal, ordinal, discrete, continuous. It’s all here, all around us, all the time. But it’s damn near impossible to ingest all of that into some sort of fluid platform, systematize it, process it, apply rules, then turn it into knowledge. That’s because capturing the context is crazy difficult and, as established (hopefully,) context and data go together like Loewy and Wyman.

The product reviews. My colleague who I travel with a lot, talk with a lot and have a lot in common with—someone who has all the context and data, and has used that to create the knowledge of how to be around me, manage me, manipulate me, make me happy, etc…brought with him to Mobile World Congress some continuous glucose monitors, the Dexcom G7. Really he was doing an experiment on me and I immediately understood that and was fine with it. Putting it on, physically, couldn’t be easier. The app is intuitive although it does need some calibration; it initially was desperately pushing me critical alarms telling me to seek medical help immediately to then being dialed in with context from dietary input and data from a finger prick. Setting up the app was a pain in the ass but the kind of pain in the ass I’m good at, and not at all a reflection on the product, rather on my location. Basically, medial data and privacy laws being what they are, I should’ve set it up in the US. The solution, figured out pretty quickly by looking at a few subReddits, was to reboot my iPhone in dev mode, turn on a VPN, set that location to the region in my Dexcom G7 user profile, open the app, there you go.

To say that the experience of using the product was immediately impactful would be an understatement. It took me a day or two of looking at the data to figure out the food piece of it. I don’t have any kind of insulin-related disorder, but I now know that to keep my blood sugar between the 90-100 milligrams of glucose per deciliter of blood range which is where I felt the best, it helps if I eat veggies, protein, carbs in that order assuming something of a composed meal. Fruit, not really a great snack for me. Just protein. When I was at the Fira, two-thirds of the way through rolling meetings in 45-minute increments, feeling myself losing attentiveness, wouldn’t you know there were some patterns in my blood sugar? That I could fix right away. Sitting for an extended period of time, walking for an extended period time, walking really fast for a short period of time—I could see exactly what they were doing to my body. My man Gavin who stays on me during the show, we set him up to be able to see my ebbs and flows. He’s a meticulous British (he’d want me to specify Scottish) bureaucrat. Give him context and data and he knows how to turn it into knowledge. End result was a highly-productive show that didn’t absolutely destroy my body. I’m typing this on the flight home. Feel great. I’m not going to get on one about bio-hacking, but I am going to get on one—am already on one—about taking this kind of data, giving it as much context as possible, then creating actionable knowledge.

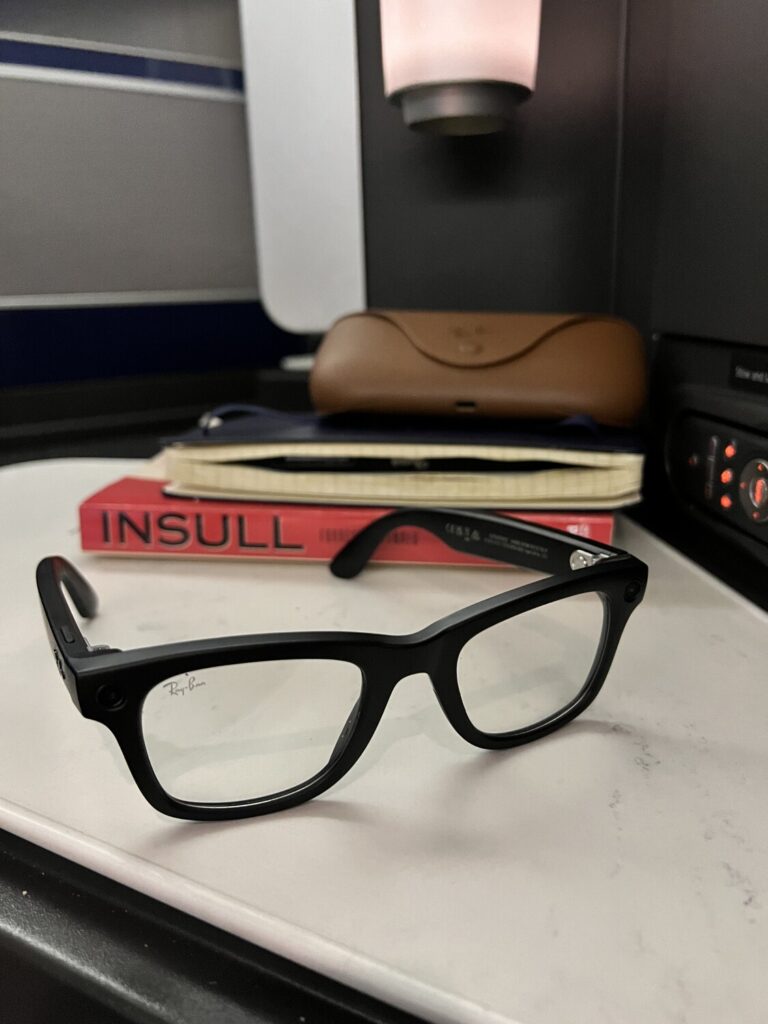

Second product review. The Ray-Ban Meta Smart Glasses. Qualcomm’s Hugo Swart turned me on to these things; it took me awhile to figure out what he’s known all along. I’m about two months in and I find this piece of gear a must-have at this point. My initial thesis statement for buying this was that I have a soon-to-be two-year-old at home, so we’re taking lots of baby pics and videos. But taking out the phone, for me, takes too long and completely breaks the context of the moment I was trying to capture. I want the context and the data to create and retain experiential knowledge. The glasses do not break the context while getting the data and capturing the knowledge. I have so many highly intimate videos that I’ll treasure forever, including things like reading my son a book at bedtime, telling him I love him before putting him in his crib, and him telling me back, for the first time, love you. I have that on video. I can, and do, watch that video all the time. And that’s one of many of that sort of moment that I was able to capture because of these glasses. There’s the first killer application.

The second is the audio. I listen to lots of podcasts and a wholly absurd amount of Steely Dan while I’m doing pretty much any other thing that doesn’t involve me needing to hear what’s going on around me. I usually use the latest-gen AirPods. They have good active noise cancellation. Too good. My wife scares the bejesus out of me sometimes because I completely lose situational awareness. We have a number of soft agreements around how and when to maybe just text me to make sure I know there’s movement happening so it doesn’t make me jump out of my skin. The glasses, on the other hand, deliver incredible audio experiences without sacrificing awareness. Worth noting this is not a one-to-one comparison and it isn’t meant to be. But the open-ear speakers and overall audio experience, including for phone calls, is fantastic.

There’s also the Meta AI feature set. I don’t want to sound harsh, but it’s not good. It’s getting better, but it’s still not good. That’s why I try to use it as often as a I can. It’s no secret that this is a product meant to setup a long-term shift of primary computing platform from phone to glasses. Right now it’s a combo of both, but eventually phone goes away. It’s also a product that’s meant to train Meta’s AI model—train it with context and data, so that it can some day soon it can create knowledge for you. The combination of the camera and voice-based AI interface gives me such clear understanding of all the applications coming to your glasses. Look at a menu in Catalan, ask your AI to bring to your attention to any dishes with an ingredient you prefer, and be fed the information in your ear. You gave the thing context and data and it created knowledge to give back to you. You’re walking around the show floor and someone approaches you. You know you’ve met but can’t access the memories. The camera looks at their face, feeds you in your ear some quick relevant background. You gave the thing context and data and it created knowledge to give back to you. Now think about advancements in the lenses and ability to overlay visual information. The outlook is unbelievable to me.

And there are other products. I looked at lots of them at Mobile World Congress. All of your smart glasses, of course, but the whole system is really pushing right now with things like the Humane Ai pin and Apple Vision Pro. Now combine this all together and think about how to take in all of the dynamic contextual elements that surround us, the fluid platforms and architectures we need to ingest this constant, radiating flow of data, and create new kinds of knowledge at speed and scale. Imagine how much knowledge you can create and gain, I can create and gain, we can create and gain, if we crack this. So much knowledge that every complex system of every type changes—the climate, the economy, power, transportation…And to change those complex systems, we need to solve for the complex system I’m trying to describe here; it’s a thing that’s moving away from the analog-to-digital and toward the digital-to-biological. And this emergent, non-linear new complex system system is centered at the human edge. It’s me, it’s you, it’s us. And getting it right depends on using as much context as possible and as much data as possible to deliver as much net-new knowledge as possible as quickly as possible. Qualcomm calls this “engineering human progress.” I think that’s what we all need to be working on.