Using a gen AI overlay for legacy BSS allows operators to unlock value from data without a holistic upgrade

Last month at the TM Forum’s DTW—Ignite event in Copenhagen, Denmark, Dell Technologies, SK Telecom, Matrixx and Compax showcased a “Moonshot Catalyst” project that lets operators more effectively engage with and derive value from data held in legacy BSS technologies by bringing in a gen AI overlay. The idea is to use “human-like, context-specific, expert personalized customer interactions” to enhance customer experience, grow revenues and boost customer retention, according to a blog post from Dell and its collaborators.

In an interview with RCR Wireless News, Manish Singh, CTO for Dell’s Telecom Systems Business, addressed the “why” of the project. “Long story short,” he said, “it comes down to it being a challenging environment for the telcos in terms of revenue growth…I think that’s just the reality of where we are as a market.”

Talking through why an operator would elect to add an additional layer of technology on top of legacy BSS rather than upgrade the whole thing, Singh explained that a full on replacement is “expensive, it’s time consuming, its resource intense. So I think near term the option and the approach that I expect more of the service providers to take is…unlock value from all the progress that has been made with generative AI…and unlock specific use cases while still keeping…existing BSS stacks.”

The catalyst project took an existing AI foundation model that was then fine-tuned using SK Telecom’s proprietary data. This speaks to a sort of ongoing debate within telecoms (and other industries) around whether to build models from the ground up or take something like a Llama or a ChatGPT and then optimize it for a particular domain or business by using industry-specific and/or proprietary data.

“Telco has all its domain nuances,” Singh said. “You’re talking about charging plans, roaming plans, adding customers, add a family member, remove another family member, plan changes, devices offers…The data that was used was [SK Telecom’s] data sets around BSS.”

Dell’s approach here is model agnostic, Singh said. “The world of foundation models themselves is rapidly evolving and they are rapidly changing. It’s clear the models, their abilities, their capabilities, are continuously evolving so what we wanted to do is ensure that architecturally we have flexibility that you can select and adopt different models without have to go change the underlying BSS stack.”

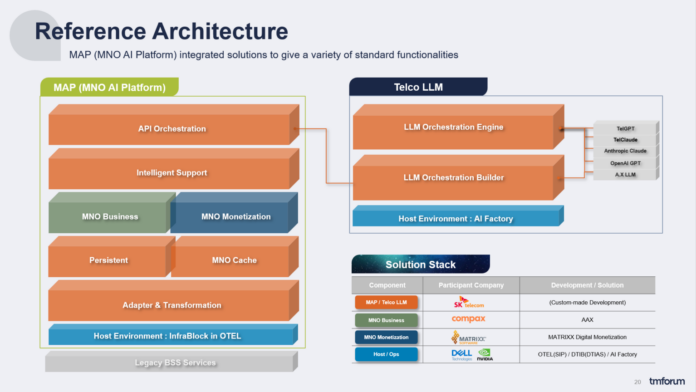

The technology involved here is the telecom specific large language mode (LLM)l that’s hosted on a Dell AI Factory infrastructure; the Mobile Network Operator AI Platform (MAP), which essentially organizes data and makes it accessible to the LLM; an On-premises Telecom Cloud Platform (OTCP) which acts as an automated, validated and continuously integrated platform for deploying and managing BSS functions; and the AI chat agent that a user interacts with.

Looking at next steps, Singh said the priorities are further standardization through TM Forum, working with SK Telecom to take the solution to other service providers and expanding use cases the solution can serve.

Another big piece here is around Dell’s push to bring AI to where data is rather than the inverse, which means running workloads on-prem. Making the case for on-prem, Singh called out “the data security/data sovereignty/data privacy issues start to come up, and…as you get more and more users to do it and your inference transactions start to grow, there’s an economic aspect to it. Whole whole Dell AI Factory is pretty much designed ground up for that.”

Singh discussed Dell’s Telecom Systems Business’s two major priorities: “Cloud transformation of the service provides and really getting that cloud transformation form end-to-end,” from OSS/BSS to core to edge to access networks, all of it. “Horizontal cloudification of the network…The other thing that I’m working on and driving is unlocking all these productivity gains, customer care gains, the service providers can have with generative AI. And these two are not decoupled. If you really want to unlock the full potential of…generative AI, you need to have an underlying infrastructure that’s…cloud-native. If your underlying infrastructure is not flexible, not agile, not elastic, you are very limited in terms of what you can do with those insights.”