This article is intended for individual/individuals who predominantly work for mobile operators and mobile enterprise business who would like to leverage containerized network functions that can generate, emulate, and validate real-world traffic to test.

Introduction

In today’s cloud-native world, there are a number of advantages when it comes to deploying containerized network function test tools that run on containers in cloud platforms such as Microsoft Azure. This article covers some of the important configurations and recommendations which can be helpful for the deployment process and leverages the self-healing properties of Kubernetes. It concludes with how users can leverage Spirent tools for testing needs from a 5G, Wi-Fi, and network testing perspective.

Azure Infrastructure and HAKS

Azure Infrastructure consists of compute with NUMA-aligned VMs with dedicated cores backed by hugepages ensuring consistent performance. Azure networking includes SR-IOV and DPDK for low latency and high throughput. Filesystem storage is backed by high performance storage arrays. Kubernetes can be deployed on VMs or directly on bare-metal servers, depending on the performance needs. Azure prefers to run Kubernetes on VMs. Azure Kubernetes service (AKS) is a managed service that lets users focus on developing and deploying their network functions while letting Azure handle the management and operational overheads.

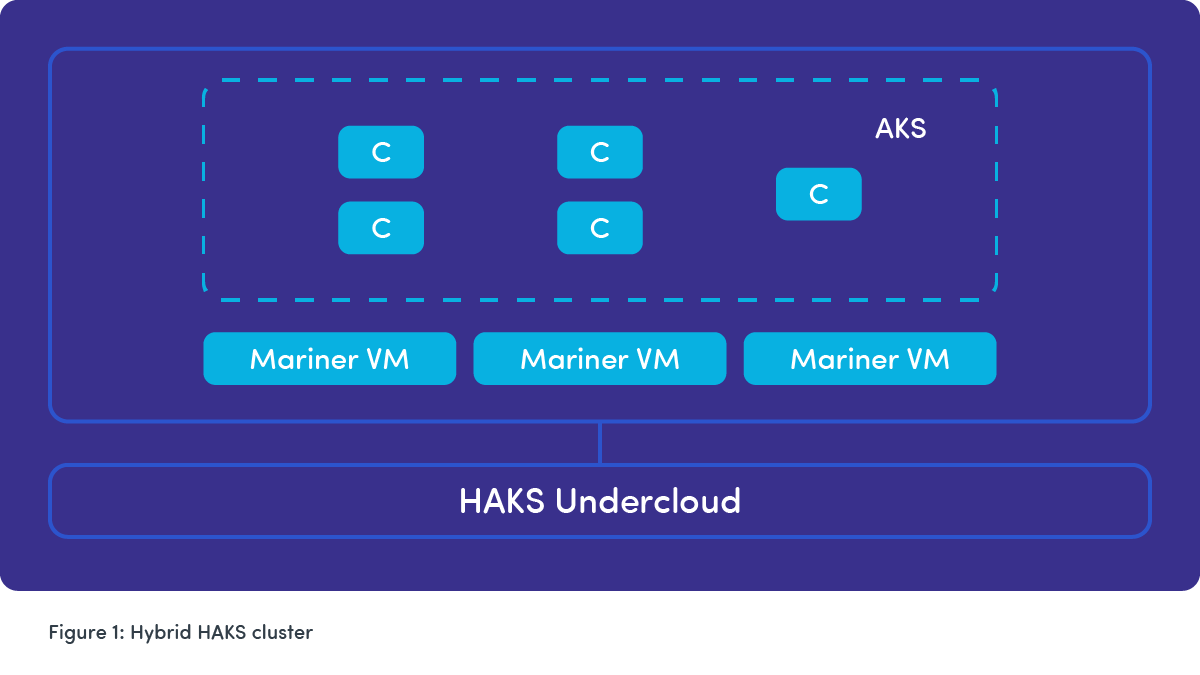

HAKS (Hybrid Azure Kubernetes Service) is an emerging concept that combines on-premises and cloud-based Kubernetes solutions. It offers users the flexibility of running AKS both in the Azure cloud and in their on-premises environments, typically through Azure Arc. This hybrid setup enables organizations to manage and deploy containerized applications consistently across cloud and on-premises resources, making it suitable for scenarios with specific compliance, latency, or data concerning requirements.

Virtualization layer configurations and recommendations

- The key to successful deployment is creating cloud resources via resource groups, virtual machines, networking configuration, storage, Microsoft Entra ID, network security groups, and application security groups to control the traffic within a VNet in advance.

- Set up the Kubernetes cluster with required Layer 2 and Layer 3 networking including but not limited to isolation domains, cloud service networks, default CNI networks, and additional attached networks as multus and flannel plugins.

- A layer 3 network requires VLAN and subnet assignment. The subnet must be large enough to support IP assignments to each of the VMs. The layer 2 network requires only a single VLAN assignment. A trunked network requires assignment of multiple VLANs.

- It’s always good practice to follow a quorum while deploying a number of master and worker nodes. It’s best to have an odd number of nodes for the highest fault tolerance.

Kubernetes layer configurations and recommendations

- Packaging charts though helm and installing helm on the Kubernetes cluster simplifies installation, upgrades, and management of network functions.

- Ensure containers are as lightweight as possible and define clear resource requests like CPU, memory, and storage. Ensure each pod has well-defined resource requests and limits to optimize resource utilization.

- Running Kubernetes on VM’s is helpful as there is no need to be concerned with the details of underlying server hardware when the nodes are setup as VMs and orchestrated using an orchestration tool. Also, VMs are easier to provision and manage.

- Make sure applications can grow vertically and horizontally by utilizing Kubernetes API resources. This can be achieved either by increasing the nodes or by adding more CPU and memory resources.

Running applications smoothly and efficiently

Follow these steps to ensure container applications that are deployed on Azure Kubernetes services run smoothly and efficiently.

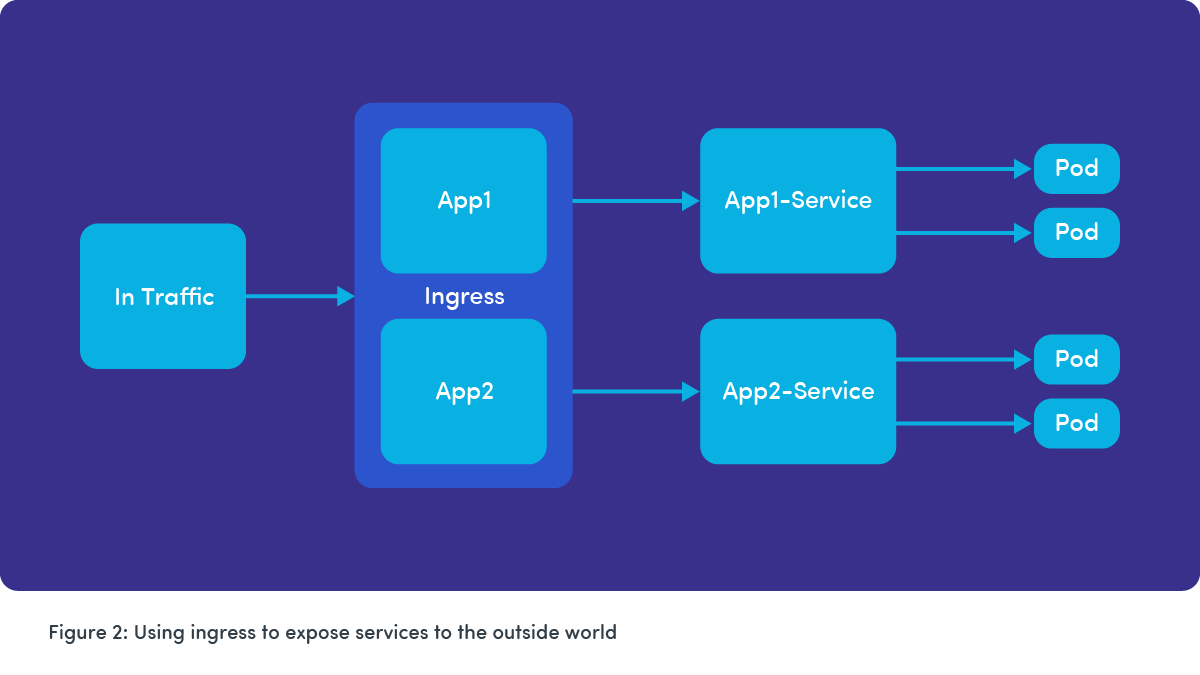

- It’s always effective to use ingress load balancing to manage how services are exposed outside of the Kubernetes cluster.

- For best cost and utilization, use horizontal pod autoscaling to scale resources up and down.

- Use Kubernetes namespace for logical isolation of various deployments pertaining to lab and production.

- Using Azure DevOps, leverage pipelines to effectively deploy, upgrade, and manage applications with ease.

- Integrating health check services will improve reliability, uptime, and availability of the application.

- Kubernetes monitoring tools like Prometheus and Grafana should be used for analytics and data visualization.

How Spirent can help

Spirent Landslide CNF Validation is a cutting-edge, automated solution that evaluates and validates cloud-native functions resiliency within 5G networks. It provides comprehensive testing capabilities to assess the robustness, fault tolerance, and performance of cloud-native functions in various real-world scenarios, enabling network operators and developers to ensure the reliability and stability of 5G deployments. Landslide CNF Validation supports testing of microservices and containerized applications, ensuring fault tolerance, scalability, and failover capabilities.

Landslide is designed to execute user-defined test scenarios to simulate real-world conditions and potential failure scenarios. These scenarios include network disruptions, infrastructure failures, and high traffic loads allowing users to validate the resiliency of their cloud-native functions under different stress conditions. Concurrently, the solution collects cloud-native metrics to monitor the impact on the 5G CNF and the cloud-native infrastructure.

For some insight on cloud native functions themselves, download our whitepaper, “How 5G CNFs are Designed to Fail.”