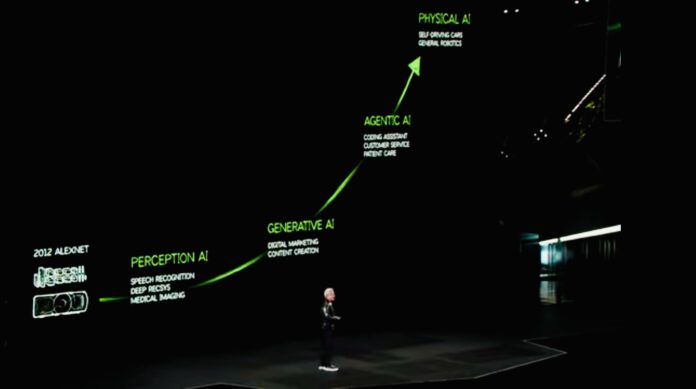

At CES NVIDIA CEO Jensen Huang proposes a three-computer solution to the figurative three-body problem of physical AI—using a digital twin to connect and refine physical AI training and deployment

Whether it’s an autonomous vehicle (AV), highly-digitized, lights-out manufacturing environments or use case involving humanoid robotics, NVIDIA CEO Jensen Huang, speaking in a keynote during the Consumer Electronics Show (CES) earlier this week in Las Vegas, Nevada, sees it as a three-body problem with a three-computer solution.

First things first, the Three-Body Problem, from 2008, is the first book in a trilogy by Chinese author Liu Cixin. The titular “problem” is a physics classic—how do you calculate the trajectories of three co-orbiting celestial bodies at a point in time using Newtonian mathematics. In the novels, an alien race’s approach to solving the three-body problem sets off a multi-generational thriller that’s well worth the read. In Huang’s keynote, the three-body problem of training, deploying and continuously optimizing objects with autonomous mobility, is addressed by a three-computer solution.

“Every robotics company will ultimately have to build three computers,” Huang said. “The robotics system could be a factory, the robotics system could be a car, it could be a robot. You need three fundamental computers. One computer, of course, to train the AI…Another, of course, when you’re done, to deploy the AI…that’s inside the car, in the robot, or in an [autonomous mobile robot]…These computers are at the edge and they’re autonomous. To connect the two, you need a digital twin…The digital twin is where the AI that has been trained goes to practice, to be refined, to do its synthetic data generation, reinforcement learning, AI feedback and such and such. And so it’s the digital twin of the AI.”

So, he continued, “These three computers are going to be working interactively. NVIDIA’s strategy for the industrial world, and we’ve been talking about this for some time, is this three-computer system. Instead of a three-body problem, we have a three-computer solution.”

And those three computers are: the NVIDIA DGX platform for AI training, including hardware, software and services; the NVIDIA AGX platform, essentially a computer to support computationally-intensive edge AI inferencing; and then a digital twin to connect the training and inferencing which is NVIDIA Omniverse, a simulation platform made up of APIs, SDKs and services.

Here’s what’s new. At CES, Huang announced NVIDIA Cosmos, a world foundation model trained on 20 million hours of “dynamic physical things,” as the CEO put it. “Cosmos models ingest text, image or video prompts and generate virtual world states as videos. Cosmos generations prioritize the unique requirements of AV and robotics use cases, like real-world environments, lighting and object permanence.”

Huang continued: “Developers use NVIDIA Omniverse to build physics-based, geospatially accurate scenarios, then output Omniverse renders into Cosmos, which generates photoreal, physically-based synethic data.” So AGX trains the physical AI, DGX runs edge inferencing for the physical AI, and the combo of Cosmos and Omniverse creates a loop between a digital twin and a physical AI model that devs “could have…generate multiple physically-based, physically-plausible scenarios of the future…Because this model understands the physical world…you could use this foundation model to train robots…The platform has an autoregressive model for real time applications, has diffusion model for a very high quality image generation…And a data pipeline so that if you would like to take all of this and then train it on your own data, this data pipeline, because there’s so much data involved, we’ve accelerated everything end to end for you.”

This idea of using a world foundation model, and other computing platforms, to give autonomous mobile systems the ability to operate effectively and naturally in the real world reminds me of a section from the book Out of Control by Kevin Kelly where he examines “prediction machinery.” One bit is based on a conversation with Doyne Farmer who, when the book was published, was focused on making and monetizing short-term financial market predictions.

From the book: “Farmer contends you have a model in your head of how baseballs fly. You could predict the trajectory of a high-fly using Newton’s classic equation of f=ma, but your brain doesn’t stock up on elementary physics equations. Rather, it builds a model directly from experiential data. A baseball player watches a thousand baseballs come off a bat, and a thousands times lifts his gloved hand, and a thousand times adjusts his guess with his mitt. Without knowing how, his brain gradually compiles a model of where the ball lands—a model almost as good as f=ma, but not as generalized.”

Kelly continues to equate “prediction machinery” with “theory-making machinery—devices for generating abstractions and generalizations. Prediction machinery chews on themes of seemingly random chicken-scratched data produced by complex and living things. If there is a sufficiently large stream of data over time, the device can discern a small bit of a pattern. Slowly the technology shapes an internal ad-hoc model of how the data might be produced…Once it has a general fit—a theory—it can make a prediction. In fact prediction is the whole point of theories.”

It appears NVIDIA is combining cutting-edge, high-performance compute, AI, the new world foundation model and other bits of tech, to essentially give robots the type of intuition that humans rely on. And systematizing intuition (simulations and predictions) and making it reliably available at scale to the worlds of AVs, heavy industry and robotics could prove to be a breakthrough in the control of our physical world.