Introduction—economic revolution requires global systems, not point solutions

Editor’s note: This report is presented in partnership with Dell Technologies. Click here for more information.

Research of artificial intelligence (AI) effectively began with Alan Turing in the early 1940s, and slowly progressed in fits and starts until the deep learning revolution of the 2010s. This era of research, driven by academics—and soon after by industry—propelled the domain forward to the generative era we’re in today. As companies and countries today open up their war chests and spend trillions of dollars to advance AI capabilities, the long-term goal is artificial general intelligence (AGI), but the short-term goal is incremental revenue gains that can fund the continued deployment of massive AI infrastructure projects.

The state-of-play in the world of AI suggests belief among captains of industry that both the short-term and long-term promise of AI will result eventually in viable, sustainable business cases; necessarily, these business cases will need to add trillions in revenue and global GDP to justify the massive investment in it—essentially deploy capital today because an economic revolution is coming. When that revolution is coming, however, is a difficult question to answer.

In the 2022 book “Power and Prediction: The Disruptive Economics of Artificial Intelligence,” authors and economists Ajay Agrawal, Joshua Gans and Avi Goldfarb describe “the between times” wherein we’ve “witness[ed] the power of this technology and before its widespread adoption.” The distinction is around AI as a point solution, “a simple replacement of older machine-generated predictive analytics with new AI tools,” as opposed to AI as a system capable of fundamentally disrupting companies, economies and industries.

The authors rightly draw an analog with electricity. Initially electric power served as a replacement to steam power—a point solution. Over time electricity became more deeply embedded in manufacturing leading to the physical overhaul of how factories were designed and operated; think the reconfigurable assembly line—a new system. “These systems changes altered the industrial landscape,” the authors wrote. “Only then did electrification finally show up in the productivity statistics, and in a big way.”

So how does AI go from point solution to system, and how do these new systems scale globally? Technology is obviously a key driver, but it’s important not to overlook the established fact that system-level changes include restructuring, both physically and from a skills perspective, how organizations conduct day-to-day business. Setting aside how the technology works, “The primary benefit of AI is that it decouples prediction from the organizational design via reimagin[ing] how decisions interrelate with one another.”

Using AI to automate decision-making systems will be truly disruptive, and the capital outlays from the likes of Google, OpenAI and others align, with the belief that those systems will usher in an AI-powered economic revolution. But when and how AI has its electricity moment is an open debate. “Just as electricity’s true potential was only unleashed when the broader benefits of distributed power generation were understood and exploited, AI will only reach its true potential when its benefits in providing prediction can be fully leveraged,” Agrawal, Gans and Goldfarb wrote.

AI, they continued, “is not only about the technical challenge of collecting data, building models, and generating predictions, but also about the organizational challenge of enabling the right humans to make the right decisions at the right time…The true transformation will only come when innovators turn their attention to creating new system solutions.”

1 | AI infrastructure—setting the stage, understanding the opportunity

Key takeaways:

- AI infrastructure is an interdependent ecosystem—from data platforms to data centers and memory to models, AI infrastructure is a synergistic space rife with disruptors.

- AI is evolving rapidly—driven by advancements in hardware, access to huge datasets and continual algorithmic improvements, gen AI is advancing at a rapid pace.

- It all starts with the data—a typical AI workflow starts with data preparation, a vital step in ensuring the final product delivers accurate outputs at scale.

- AI is driving industry transformation and economic growth—in virtually every sector of the global economy, AI is enhancing efficiency, automating tasks and turning predictive analytics into improved decision-making.

The AI revolution is driving unprecedented investments in digital and physical infrastructure—spanning data, compute, networking, and software—to enable scalable, efficient, and transformative AI applications. As AI adoption accelerates, enterprises, governments, and industries are building AI infrastructure to unlock new levels of productivity, innovation, and economic growth.

Given the vast ecosystem of technologies involved, this report takes a holistic view of AI infrastructure by defining its key components and exploring its evolution, current impact, and future direction.

For the purposes of this report, AI infrastructure comprises six core domains:

- Data platforms – encompassing data integration, governance, management, and orchestration solutions that prepare and optimize datasets for AI training and inference. High-quality, well-structured data is essential for effective AI performance.

- AI models – the multi-modal, open and closed algorithmic frameworks that analyze data, recognize patterns, and generate outputs. These models range from traditional machine learning (ML) to state-of-the-art generative AI and large language models (LLMs).

- Data center hardware – includes high-performance computing (HPC) clusters, GPUs, TPUs, cooling systems, and energy-efficient designs that provide the computational power required for AI workloads.

- Networking – the fiber, Ethernet, wireless, and low-latency interconnects that transport massive datasets between distributed compute environments, from hyperscale cloud to edge AI deployments.

- Semiconductors – the specialized CPUs, GPUs, NPUs, TPUs, and custom AI accelerators that power deep learning, generative AI, and real-time inference across cloud and edge environments.

- Memory and storage – high-speed HBM (High Bandwidth Memory), DDR5, NVMe storage, and AI-optimized data systems that enable efficient retrieval and processing of AI model data at different stages—training, fine-tuning, and inference.

And while these domains are distinct, AI infrastructure is not a collection of isolated technologies but rather an interdependent ecosystem where each component plays a critical role in enabling scalable, efficient AI applications. Data platforms serve as the foundation, ensuring that AI models are trained on high-quality, well-structured datasets. These models require high-performance computing (HPC) architectures in data centers, equipped with AI-optimized semiconductors such as GPUs and TPUs to accelerate training and inference. However, processing power alone is not enough—fast, reliable networking is essential to move massive datasets between distributed compute environments, whether in cloud clusters, edge deployments, or on-device AI systems. Meanwhile, memory and storage solutions provide the high-speed access needed to handle the vast volumes of data required for real-time AI decision-making. Without a tightly integrated AI infrastructure stack, performance bottlenecks emerge, limiting scalability, efficiency, and real-world impact.

Together, these domains form the foundation of modern AI systems, supporting everything from enterprise automation and digital assistants to real-time generative AI and autonomous decision-making.

The evolution of AI—from early experiments to generative intelligence

AI is often described as a field of study focused on building computer systems that can perform tasks requiring human-like intelligence. While AI as a concept has been around since the 1950s, its early applications were largely limited to rule-based systems used in gaming and simple decision-making tasks.

A major shift came in the 1980s with machine learning (ML)—an approach to AI that uses statistical techniques to train models from observed data. Early ML models relied on human-defined classifiers and feature extractors, such as linear regression or bag-of-words techniques, which powered early AI applications like email spam filters.

But as the world became more digitized—with smartphones, webcams, social media, and IoT sensors flooding the world with data—AI faced a new challenge: how to extract useful insights from this massive, unstructured information.

This set the stage for the deep learning breakthroughs of the 2010s, fueled by three key factors:

- Advancements in hardware, particularly GPUs capable of accelerating AI workloads

- The availability of large datasets, critical for training powerful models

- Improvements in training algorithms, which enabled neural networks to automatically extract features from raw data

Today, we’re in the era of generative AI (gen AI) and large language models (LLMs), with AI systems that exhibit surprisingly human-like reasoning and creativity. Applications like chatbots, digital assistants, real-time translation, and AI-generated content have moved AI beyond automation and into a new phase of intelligent interaction.

The intricacies of deep learning—making the biological artificial

As Geoffrey Hinton, a pioneer of deep learning, put it: “I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain. That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain actually works.”

Deep learning mimics human intelligence by using deep neural networks (DNNs). These networks are inspired by biological neurons wherein dendrites receive signals from other neurons, the cell body then process those signals, and the axon transmits information to the next neuron. Artificial neurons work similarly. Layers of artificial neurons process data hierarchically, enabling AI to perform image recognition, natural language processing, and speech recognition with human-like accuracy.

For example, in image classification (e.g., distinguishing cats from dogs), a convolutional neural network (CNN) like AlexNet would be used. Unlike earlier ML techniques, deep learning does not require manual feature extraction—instead, it automatically learns patterns from data.

A typical AI workflow—from data to deployment

AI solution development isn’t a single-step process. It follows a structured workflow—also known as a machine learning or data science workflow—which ensures that AI projects are systematic, well-documented, and optimized for real-world applications.

NVIDIA laid out four fundamental steps in an AI workflow:

- Data preparation—every AI project starts with data. Raw data must be collected, cleaned, and pre-processed to make it suitable for training AI models. The size of datasets used in AI training can range from small structured data to massive datasets with billions of parameters. But size alone isn’t everything. NVIDIA emphasizes that data quality, diversity, and relevance are just as critical as dataset size.

- Model training–once data is prepared, it is fed into a machine learning or deep learning model to recognize patterns and relationships. Training an AI model requires mathematical algorithms to process data over multiple iterations, a step that is extremely computationally intensive.

- Model optimization–after training, the model needs to be fine-tuned and optimized for accuracy and efficiency. This is an iterative process, with adjustments made until the model meets performance benchmarks.

- Model deployment and inference–a trained model is deployed for inference, meaning it is used to make predictions, decisions, or generate outputs when exposed to new data. Inference is the core of AI applications, where a model’s ability to deliver real-time, meaningful insights defines its practical success.

This end-to-end workflow ensures the AI solution delivers accurate, real-time insights while being efficiently managed within an enterprise infrastructure.

The transformational AI opportunity

Image courtesy of 123.RF.

“We are leveraging the capabilities of AI to perform intuitive tasks on a scale that is quite hard to imagine. And no industry can afford or wants to miss out on the huge advantage that predictive analytics offers.” That’s the message from NVIDIA co-founder and CEO Jensen Huang.

And based on the amount of money being poured into AI infrastructure, Huang is right. While it’s still early days, commercial AI solutions are today delivering tangible benefits largely based on automation of enterprise processes and workflows. NVIDIA calls out AI’s ability to enhance efficiency, decision making and organizational ability for innovation by pulling out actionable, valuable insights from vast datasets.

The company gives high-level examples of AI use cases for specific functions and verticals:

- In call centers, AI can power virtual agents, extract data insights and conduct sentiment analysis.

- For retail businesses, AI vision systems can analyze traffic trends, customer counts and aisle occupancy.

- Manufacturers can use AI for product design, production optimization, enhanced quality control and reducing waste.

Here’s what that looks like in the real world:

- Mercedes-Benz is using AI-powered digital twins to simulate manufacturing facilities, reducing setup time by 50%.

- Deutsche Bank is embedding financial AI models (fin-formers) to detect early risk signals in transactions.

- Pharmaceutical R&D leverages AI to accelerate drug discovery, cutting down a 10-year, $2B process to months of AI-powered simulations.

The point is that AI is reshaping industries by enhancing efficiency, automating tasks, and unlocking insights from vast datasets. From cloud computing to data centers, workstations, and edge devices, it is driving digital transformation at every level. Across sectors like automotive, financial services, and healthcare, AI is enabling automation, predictive analytics, and more intelligent decision-making.

As AI evolves from traditional methods to machine learning, deep learning, and now generative AI, its capabilities continue to expand. Generative AI is particularly transformative, producing everything from text and images to music and video, demonstrating that AI is not only analytical but also creative. Underpinning these advancements is GPU acceleration, which has made deep learning breakthroughs possible and enabled real-time AI applications.

No longer just an experimental technology, AI is now a foundational tool shaping the way businesses operate and innovate. There are lots of numbers out there trying to encapsulate the economic impact of AI; most all are in the trillions of dollars. McKinsey & Company analyzed 63 gen AI use cases and pegged the annual economic contribution at $2.6 trillion to $4.4 trillion.

Final thought: AI is no longer just a tool—it is becoming the backbone of digital transformation, driving industry-wide disruption and economic growth. But AI’s impact isn’t just about smarter models or faster chips—it’s about the interconnected infrastructure that enables scalable, efficient, and intelligent systems.

2 | The AI infrastructure boom and coming economic revolution

Key takeaways:

- AI infrastructure investment is skyrocketing—the push to AGI, as well as more near-term adoption, is leading to hundreds of billions of capital outlay from big tech.

- AI scaling laws are shaping AI infrastructure investment—given that AI system performance is governed by empirical scaling laws, along with emerging scaling laws, all signs point to the need for more AI infrastructure to improve AI outcomes and scale systems.

- Power, not just semiconductors, is a bottleneck—although the acute semiconductor shortage has eased (but not disappeared), a potentially bigger bottleneck to AI infrastructure operationalization is access to power.

- The AI revolution could mirror past economic transformations—historical precedents like the agricultural and industrial revolutions suggest that AI-driven economic productivity gains could lead to significant global economic growth, but only if infrastructure constraints can be managed effectively.

In the sweep of all things AI and attendant AI infrastructure investments, the goal, generally speaking, is two-fold: incrementally leverage AI to deliver monetizable, near-term capabilities that deliver clear value to consumers and enterprises, while simultaneously chipping away at achieving artificial general intelligence (AGI), an ill-defined set of capabilities that could arguably bring consequences that the world, collectively, may not be ready for. The two complementary paths demand massive capital investment in AI infrastructure—both digital and physical.

OpenAI CEO Sam Altman recently posted a blog that reiterated the company’s unequivocal commitment to “ensure that AGI…benefits all of humanity.” In exploring the path to AGI, Altman delineated “three observations about the economics of AI”:

- “The intelligence of an AI model roughly equals the log of the resources used to train and run it.”

- “The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use.” More here on Jevons’ paradox.

- And “The socioeconomic value of linearly increasing intelligence is super-exponential in nature. A consequence of this is that we see no reason for exponentially increasing investment to stop in the near future.”

Here Altman is essentially highlighting the AI scaling laws—bigger datasets create bigger models, necessitating greater investment in AI infrastructure. This makes the case for companies and countries to continue spending hundreds of billions—if not trillions—on AI capabilities that, in turn, deliver outsized returns.

The race toward AGI and the continued expansion of AI capabilities have led to a fundamental truth: bigger models require more data and more compute. This reality is governed by AI scaling laws—which explain why AI infrastructure spending is rising exponentially.

A closer look at AI scaling laws

Figure from “Scaling Laws for Neural Language Models”.

In January 2020, a team of OpenAI researchers led by Jared Kaplan, who moved on to co-found Anthropic, published a paper titled “Scaling Laws for Neural Language Models.” The researchers observed “precise power-law scalings for performance as a function of training time, context length, dataset size, model size and compute budget.” Essentially, the performance of an AI model improves as a function of increasing scale in model size, dataset size and compute power. While the commercial trajectory of AI has materially changed since 2020, the scaling laws continue to be steadfast; and this has material implications for the AI infrastructure that underlies the model training and inference that users increasingly depend on.

Let’s break that down:

- Model size scaling shows that increasing the number of parameters in a model typically improves its ability to learn and generalize, assuming it’s trained on a sufficient amount of data. Improvements can plateau if dataset size and compute resources aren’t proportionately scaled.

- Dataset size scaling relates model performance to the quantity and quality of data used for training. The importance of dataset size can diminish if model size and compute resources aren’t proportionately scaled.

- Compute scaling basically means more compute (GPUs, servers, networking, memory, power, etc…) equates to improved model performance because training can go on for longer, speaking directly to the needed AI infrastructure.

In sum, a large model needs a large dataset to work effectively. Training on a large dataset requires significant investment in compute resources. Scaling one of these variables without the others can lead to process and outcome inefficiencies. Important to note here the Chinchilla Scaling Hypothesis, developed by researchers at DeepMind and memorialized in the 2022 paper “Training Compute-Optimal Large Language Models,” that says scaling dataset and compute together can be more effective than building a bigger model.

“I’m a big believer in scaling laws,” Microsoft CEO Satya Nadella said in a recent interview with Brad Gerstner and Bill Gurley. He said the company realized in 2017 “don’t bet against scaling laws but be grounded on exponentials of scaling laws becoming harder. As the [AI compute] clusters become harder, the distributed computing problem of doing large scale training becomes harder.” Looking at long-term capex associated with AI infrastructure deployment, Nadella said, “This is where being a hyperscaler I think is structurally super helpful. In some sense, we’ve been practicing this for a long time.” He said build out costs will normalize, “then it will be you just keep growing like the cloud has grown.”

Nadella explained in the interview that his current scaling constraints were no longer around access to the GPUs used to train AI models but, rather, the power needed to run the AI infrastructure used for training.

And two more AI scaling laws

Image courtesy of NVIDIA.

Beyond the three AI scaling laws outlined above, Huang is tracking two more that have “now emerged.” Those are the post-training scaling law and test-time scaling. Post-training scaling refers to a series of techniques used to improve AI model outcomes and make the systems more efficient. Some of the relevant techniques include:

- Fine-tuning a model by adding in domain-specific data, effectively reducing compute and data required compared to building a new model.

- Quantization reduces model precision weights to make it smaller and faster while maintaining acceptable performance and reducing memory and compute.

- Pruning removes unnecessary parameters in a trained model making it more efficient without performance decreases.

- Distillation essentially compresses knowledge from a large model to a small model while retaining most capabilities.

- Transfer learning re-uses a pre-trained model for related tasks meaning the new tasks require less data and compute.

Huang likened post-training scaling to “having a mentor or having a coach give you feedback after you’re done going to school. And so you get tests, you get feedback, you improve yourself.” That said, “Post-training requires an enormous amount of computation, but the end result produces incredible models.”

The other emerging AI scaling law is test-time scaling which refers to techniques applied after training and during inference meant to enhance performance and drive efficiency without retraining the model. Some of the core concepts here are:

- Dynamic model adjustment based on the input or system constraints to balance accuracy and efficiency on the fly.

- Ensembling at inference combines predictions from multiple models or model version to improve accuracy.

- Input-specific scaling adjusts model behavior based on inputs at test-time to reduce unnecessary computation while retaining adaptability when more computation is needed.

- Quantization at inference reduces precision to speed up processing.

- Active test-time adaptation allows for model tuning in response to data inputs.

- Efficient batch processing groups inputs to maximize throughput to minimize computation overhead.

As Huang put it, test-time scaling is, “When you’re using the AI, the AI has the ability to now apply a different resource allocation. Instead of improving its parameters, now it’s focused on deciding how much computation to use to produce the answers it wants to produce.”

Regardless, he said, whether it’s post-training or test-time scaling, “The amount of computation that we need, of course, is incredible…Intelligence, of course, is the most valuable asset that we have, and it can be applied to solve a lot of very challenging problems. And so, [the] scaling laws…[are] driving enormous demand for NVIDIA computing.”

The evolution of AI scaling laws—from the foundational trio identified by OpenAI to the more nuanced concepts of post-training and test-time scaling championed by NVIDIA—underscores the complexity and dynamism of modern AI. These laws not only guide researchers and practitioners in building better models but also drive the design of the AI infrastructure needed to sustain AI’s growth.

The implications are clear: as AI systems scale, so too must the supporting AI infrastructure. From the availability of compute resources and power to advancements in optimization techniques, the future of AI will depend on balancing innovation with sustainability. As Huang aptly noted, “Intelligence is the most valuable asset,” and scaling laws will remain the roadmap to harnessing it efficiently. The question isn’t just how large we can build models, but how intelligently we can deploy and adapt them to solve the world’s most pressing challenges.

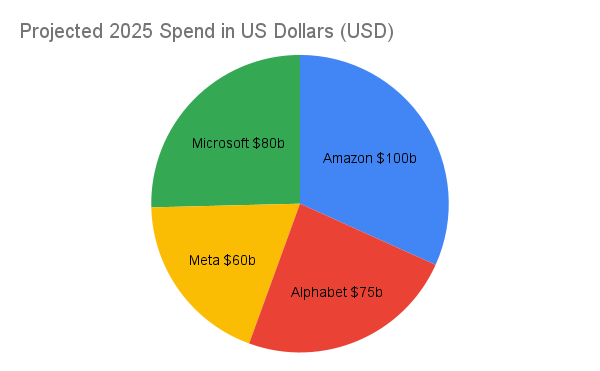

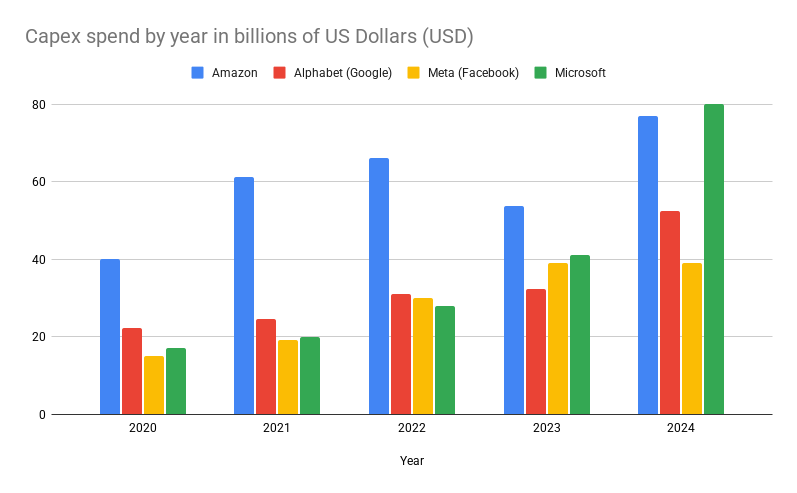

Alphabet, Amazon, Meta, Microsoft earmark $315 billion for AI infrastructure in 2025

Given that four of the biggest companies in the game reported 2025 capex guidance in the past few weeks, we can put a number to planned AI infrastructure investment. For the purposes of this analysis, we’ll limit capex guidance to Alphabet (Google), Amazon, Meta and Microsoft. In the coming year, Amazon plans to spend $100 billion, Alphabet guided for $75 billion, Meta plans to invest between $60 billion and $65 billion, and Microsoft expects to lay out $80 billion. Between just four U.S. tech giants, that’s $315 billion earmarked for AI infrastructure in 2025. And this doesn’t include massive investments coming out of China or the OpenAI-led Project Stargate which is committing to $500 billion over five years.

The animating concept here is that the rate of growth in economic productivity that the AI revolution could deliver has historical parallels. While these numbers are hard to peg, if the change in the rate of growth of economic productivity after the Agricultural Revolution and after the Industrial Revolution are instructive, a successful AI revolution could lead to incredibly rapid economic growth. The level of AI investment today suggests that industry leaders expect a historic leap in economic productivity. But how much growth is possible? Here, history provides clues.

In his 2014 book Superintelligence, philosopher Nick Bostrom draws on a range of data to sketch out these revolutionary step changes in the rate of growth of economic productivity. In pre-history, he wrote, “growth was so slow that it took on the order of one million years for human productive capacity to increase sufficiently to sustain an additional 1 million individuals living at subsistence level.” After the Agricultural Revolution, around 5000 BCE, “the rate of growth had increased to the point where the same amount of growth took just two centuries. Today, following the Industrial Revolution, the world economy grows on average by that amount every 90 minutes.”

Drawing on modeling from economist Robin Hanson, economic and population data suggests the “world economy doubling time” was 224,000 years for early hunter gatherers. “For farming society, 909 years; and for industrial society, 6.3 years…If another such transition to a different growth mode were to occur, and it were of similar magnitude to the previous two, it would result in a new growth regime in which the world economy would double in size about every two weeks.” While such a scenario seems fantastical—and is constrained by AI infrastructure and power supply—even a fraction of that growth rate would be transformative beyond comprehension.

Given the planned investments from hyperscalers, it’s clear that they buy the narrative and see the ROIC. This also runs contrary to the emerging discourse that we’re currently in an AI infrastructure investment bubble that will invariably burst leaving someone to hold the bag. To wit: unlike past speculative bubbles, AI infrastructure spending is directly tied to scaling laws and immediate enterprise demand and adoption, much like cloud computing investments in the 2010s that ultimately proved prescient.

Reporting its fourth quarter and full-year 2024 financials on Feb. 4, Google-parent Alphabet CEO Sundar Pichai highlighted the company’s “differentiated full-stack approach to AI innovation” of which its AI infrastructure is a crucial piece.

“Our sophisticated global network of cloud regions and datacenters provides a powerful foundation for us and our customers, directly driving revenue,” he said, adding that in 2024 Google “broke ground” on 11 new cloud regions and datacenter campuses. “Our leading infrastructure is also among the world’s most efficient. Google datacenters deliver nearly four times more computing power per unit of electricity compared to just five years ago. These efficiencies, coupled with the scalability, cost and performance we offer are why organizations increasingly choose Google Cloud’s platform.”

He continued: “I think a lot of it is our strength of the full stack development, end-to-end optimization, our obsession with cost per query…If you look at the trajectory over the past three years, the proportion of spend toward inference compared to training has been increasing which is good because, obviously, inferences to support businesses with good ROIC…I think that trend is good.”

In sum, Pichai said, “I think part of the reason we are so excited about the AI opportunity is, we know we can drive extraordinary use cases because the cost of actually using it is going to keep coming down, which will make more use cases feasible. And that’s the opportunity space. It’s as big as it comes, and that’s why you’re seeing us invest to meet that moment.”

Amazon is working to further capitalize on what CEO Andy Jassy called a “really unusually large, maybe once-in-a-lifetime type of opportunity,” during an earnings call on Feb. 6. In a separate call with investors, Jassy pegged Q4 capex at $26.3 billion and said, “I think that is reasonably representative of what you expect [of] an annualized capex rate in 2025…The vast majority of that capex spend is on AI for AWS,” according to reporting from CNBC.

Discussing how Amazon Web Services (AWS) is delivering AI-enabled services, along with the underlying AI infrastructure down to homegrown custom silicon for model training and inference, Jassy said, “AWS’s AI business is a multi-billion dollar revenue run rate business that continues to grow at a triple-digit year-over-year percentage, and is growing more than three times faster at this stage of its evolution as AWS itself grew. And we felt like AWS grew pretty quickly.”

Asked about demand for AI services as compared to AWS’s capacity to deliver AI services, Jassy said he believes AWS has “more demand that we could fulfill if we had even more capacity today. I think pretty much everyone today has less capacity than they have demand for, and it’s really primarily chips that are the area where companies could use more supply.”

“I actually believe that the rate of growth there has a chance to improve over time as we have bigger and bigger capacity,” Jassy said.

Reporting on its Q4 2024 on Jan. 29, Meta CEO Mark Zuckerberg highlighted adoption of the Meta AI personal assistant, continued development of its Llama 4 LLM, and ongoing investments in AI infrastructure. “These are all big investments,” he said. “Especially the hundreds of billions of dollars that we will invest in AI infrastructure over the long term. I announced last week that we expect to bring online almost 1 [Gigawatt] of capacity this year, and we’re building a 2 [Gigawatt] and potentially bigger AI datacenter that is so big that it’ll cover a significant part of Manhattan if it were placed there. We’re planning to fund all this by at the same time investing aggressively in initiatives that use these AI advances to increase revenue growth.”

CFO Susan Li said Q4 capex came in at $14.8 billion “driven by investments in servers, datacenters and network infrastructure. We’re working to meet the growing capacity needs for these services by both scaling our infrastructure footprint and increasing the efficiency of our workloads. Another way we’re pursuing efficiencies is by extending the useful lives of our servers and associated networking equipment. Our expectation going forward is that we’ll be able to use both our non-AI and AI servers for a longer period of time before replacing them, which we estimate will be approximately five and a half years.” She said 2025 capex will be between $60 billion and $65 billion “driven by increased investment to support both our generative AI efforts and our core business.”

And for Microsoft, Nadella said, also on Jan. 29 earnings call, that enterprises “are beginning to move from proof-of-concepts to enterprise-wide deployments to unlock the full ROI of AI.” The company’s AI business passed an annual revenue run-rate of $13 billion, up 175% year-over-year in Q2 for fiscal year 2025.

Nadella talked through the “core thesis behind our approach to how we manage our fleet, and how we allocate our capital to compute. AI scaling laws are continuing to compound across both pre-training and inference-time compute. We ourselves have been seeing significant efficiency gains in both training and inference for years now. On inference, we have typically seen more than 2X price-performance gain for every hardware generation, and more than 10X for every model generation due to software optimizations.”

He continued: “And, as AI becomes more efficient and accessible, we will see exponentially more demand. Therefore, much as we have done with the commercial cloud, we are focused on continuously scaling our fleet globally and maintaining the right balance across training and inference, as well as geo distribution. From now on, it is a more continuous cycle governed by both revenue growth and capability growth, thanks to the compounding effects of software-driven AI scaling laws and Moore’s law.”

On datacenter investment, Nadella said Microsoft’s Azure cloud is the “infrastructure layer for AI. We continue to expand our datacenter capacity in line with both near-term and long-term demand signals. We have more than doubled our overall datacenter capacity in the last three years. And we have added more capacity last year than any other year in our history. Our datacenters, networks, racks and silicon are all coming together as a complete system to drive new efficiencies to power both the cloud workloads of today and the next-gen AI workloads.”

Back to Altman: “Ensuring that the benefits of AGI are broadly distributed is critical. The historical impact of technological progress suggests that most of the metrics we care about (health outcomes, economic prosperity, etc.) get better on average and over the long-term, but increasing equality does not seem technologically determined and getting this right may require new ideas.”

Final thought: The scale of investment signals that major players aren’t just chasing a speculative future—they’re betting on AI as the next fundamental driver of economic transformation. The next decade will reveal whether AI’s infrastructure backbone can match the ambitions fueling its expansion.

3 | Can data center deployment rise to meet the AI opportunity?

Key takeaways:

- AI is transforming data center investment and strategy—for AI to deliver on its promise of economic revolution, massive multi-trillion dollars investments in modernizing existing data centers for AI and building new data centers specifically for AI.

- Every data center is becoming an AI data center—AFCOM found that 80% of data center operators are planning major capacity increases to keep pace with current and future demand for AI computing.

- AI-based power demand is reshaping energy strategies—data center electricity consumption is expected to double in the coming years, driving a shift toward efficient hardware, optimized power usage, AI-driven energy management and other techniques aimed at ensuring the expansion of AI infrastructure doesn’t come with a proportional increase in energy consumption.

- There’s a new hyperscaler coming to town—Project Stargate is a $500 billion bet on AI infrastructure leadership, positioning SoftBank, MGX, OpenAI, and Oracle as a new hyperscaler, with 100,000-GPU clusters and 10+ data centers planned in Texas to secure U.S. dominance in AI.

Before looking ahead to what AI means for how data centers are designed, deployed, operated and powered, let’s look back to less than a year ago when ChatGPT had made its impact, but before the most valuable companies in the world allocated hundreds of billions in capital to build out the data center, and complementary, infrastructure needed for the AI revolution.

It was Dell Technologies World 2024, held annually in May. Company founder and CEO Michael Dell took the stage and reflected on factories of the past where the process was based on turning a mill using wind or water, then using electricity to turn the wheel then, eventually, bringing electricity deeper into the process to automate tasks with purpose-built machines–the evolution from a point solution to a system, essentially. “That’s kind of where we are now with AI,” Dell said. But, he cautioned to avoid the temptation to use AI to simply turn the figurative wheel; rather, the goal should be to reinvent the organization with AI at its center. “It’s a generational opportunity for productivity and growth.”

Joined onstage by NVIDIA’s Huang, the pair discussed the approximately $1 trillion in data center modernization, and orders of magnitude more than that—the number $100 trillion was thrown out—in net new data center capacity that needs to be built to support the demands of AI. Last words from Dell: “The real question isn’t how big AI is going to be, but how much good is AI going to do? How much good can AI do for you?…Reinventi[ng] and reimagining your organization is hard. It feels risky, even frightening. But the bigger risk, and what’s even more frightening, is what happens if you don’t do it.”

In the less than a year since Dell and NVIDIA made the case for immediate, significant investments into AI infrastructure, the message (which has been repeated over and over and over in the interim) was heard loud and clear by data center owners and operators. In January this year, AFCOM, a professional association for data center and IT infrastructure profession, released its annual “State of the Data Center” report. Jumping straight to the conclusion, AFCOM found that, “Every data center is becoming an AI data center.”

Based on a survey of data center operators, AFCOM found that 80% of respondents expect “significant increases in capacity requirements due to AI workloads. Nearly 65% said they are actively deploying AI-capable solutions. Rack density has more than doubled from 2021 to present, with nearly 80% of respondents expecting rack density will be pushed further due to AI and high-performance workloads. This means that data center managers are looking to improve air flow, adopt liquid cooling and also use new sensors for monitoring.

To that power point, AFCOM found that more than 100 megawatts of new data center construction has been added every single month since late 2021; in fact, the US colocation data center market has more than doubled in size in the past four years with record low vacancy rates and rents steadily increasing. Solar energy is seen as the key renewable energy source for data centers, according to 55% of respondents, while interest in nuclear power is also on the rise.

“The data center has evolved from a supporting player to the cornerstone of digital transformation. It’s the engine behind the most innovative solutions shaping our world today,” said Bill Kleyman, who is CEO and co-founder of Apolo.us and program chair for AFCOM’s Data Center World event. “This is a time to embrace bold experimentation and collective innovation, push boundaries, adopt transformative technologies and focus on sustainability to prepare for what lies ahead.”

Kleyman also said: “In the past year, our relationship with data has shifted profoundly. This isn’t just another tech trend; it’s a foundational change in how humans engage with information.”

The energy demands of AI infrastructure

The rapid growth of AI and the investments fueling its expansion have significantly intensified the power demands of data centers. Globally, data centers consumed an estimated 240–340 TWh of electricity in 2022—approximately 1% to 1.3% of global electricity use, according to the International Energy Agency (IEA). In the early 2010s, data center energy footprints grew at a relatively moderate pace, thanks to efficiency gains and the shift toward hyperscale facilities, which are more efficient than smaller server rooms.

That stable growth pattern has given way to explosive demand. The IEA projects that global data center electricity consumption could double between 2022 and 2026. Similarly, IDC forecasts that surging AI workloads will drive a massive increase in data center capacity and power usage, with global electricity consumption from data centers projected to double to 857 TWh between 2023 and 2028. AI-specific infrastructure is at the core of this growth, with IDC estimating that AI data center capacity will expand at a 40.5% CAGR through 2027.

Given the massive energy requirements of today’s data centers and the even greater demands of AI-focused infrastructure, stakeholders must implement strategies to improve energy efficiency. This is not only essential for sustaining AI’s growth but also aligns with the net-zero targets set by many companies within the AI infrastructure ecosystem. Key strategic pillars include:

- Upgrading to more efficient hardware and architectures—generational improvements in semiconductors, servers, and power management components deliver incremental gains in energy efficiency. Optimizing workload scheduling can also maximize server utilization, ensuring fewer machines handle more work and minimizing idle power consumption.

- Optimizing Power Usage Effectiveness (PUE)—PUE is a key metric for assessing data center efficiency by considering cooling, power distribution, airflow, and other factors. Hyperscalers like Google have reduced PUE by implementing hot/cold aisle containment, raising temperature setpoints, and investing in energy-efficient uninterruptible power supply (UPS) systems.

- AI-driven management and analytics—AI itself can help mitigate the energy challenges it creates. AI-driven real-time monitoring and automation can dynamically adjust cooling, power distribution, and resource allocation to optimize efficiency.

One of the primary goals is to scale AI infrastructure globally without a proportional increase in energy consumption. Achieving this requires a combination of investment in modern hardware, advancements in facility design, and AI-enhanced operational efficiency.

Liquid cooling and data center design

As rack densities increase to support power-hungry GPUs and other AI accelerators, cooling is becoming a critical challenge. The need for more advanced cooling solutions is not only shaping vendor competition but also influencing data center site selection, as operators consider climate conditions when designing new facilities.

While liquid cooling is not new, the rise of AI has intensified interest in direct-to-chip cold plates and immersion cooling—technologies that remove heat far more efficiently than air cooling. In direct-to-chip cooling, liquid circulates through cold plates attached to AI semiconductors, while immersion cooling submerges entire servers in a cooling fluid. Geography also plays a role, with some operators leveraging free cooling, which uses ambient air or evaporative cooling towers to dissipate heat with minimal energy consumption.

While liquid and free cooling require higher upfront capital investments than traditional air cooling, the long-term benefits are substantial—including lower operational costs, improved energy efficiency, and the ability to support next-generation AI workloads.

New AI-focused data centers are being designed from the ground up to accommodate higher-density computing and advanced cooling solutions. Key design adaptations include:

- Lower rack density per room and increased spacing to optimize airflow and liquid cooling efficiency.

- Enhanced floor loading capacity to support liquid cooling equipment and accommodate heavier infrastructure.

- Higher-voltage DC power systems to improve energy efficiency and integrate with advanced battery storage solutions.

Taken together, liquid cooling and data center design innovations will be crucial for scaling AI infrastructure without compromising energy efficiency or sustainability.

The Stargate Project—meet the newest hyperscaler

Image courtesy of OpenAI.

As detailed in the previous section, major global tech companies are poised to invest hundreds of billions into AI infrastructure in 2025, but the $500 billion Stargate Project represents an unprecedented mobilization of capital. Framed as a strategic, long-term investment in U.S. technological leadership, Project Stargate aims to position the country at the forefront of the race toward AGI.

The lead investors in Stargate Project are Japan’s SoftBank and UAE-based investment firm MGX. OpenAI, which will be the anchor tenant of the planned data centers, and Oracle are also throwing in equity, although likely at a lower level than SoftBank and MGX. SoftBank is tasked with running things on the financial side while OpenAI will be the lead operational partner. The $500 billion in capital is planned to be deployed over a four-year period with $100 billion coming this year.

The project’s proponents frame the spending as a strategic long-term bet. The return on investment is envisioned more in geopolitical and economic terms than immediate profit. SoftBank’s Chairman and CEO Masayoshi Son called it “the beginning of our Golden Age,” indicating confidence that this will unlock new industries and enormous economic value. OpenAI said in an announcement that the Stargate Project “will secure American leadership in AI, create hundreds of thousands of American jobs, and generate massive economic benefits for the entire world.”

OpenAI specifically called out its ambitions to leverage the new AI infrastructure investment to develop AGI “for the benefit of all of humanity. We believe that this new step is critical on the path, and will enable creative people to figure out how to use AI to elevate humanity.”

In addition to the equity partners, Stargate Project has named several technology partners, including Arm (which is owned by SoftBank), Microsoft (an OpenAI investor and currently primary provider of data center capacity), NVIDIA and Oracle. In addition to being an equity partner, Oracle has been building data centers in Texas that are being folded into the first phase. In addition to its cloud deal with Microsoft, OpenAI also has existing arrangements with Oracle for GPU capacity.

Oracle’s pre-existing data center expansion in Texas has now become a core initial site for Project Stargate. Before the project’s announcement, Oracle had already begun deploying a 100,000-GPU cluster at its Abilene, Texas campus. Oracle co-founder and CTO Larry Ellison has stated that the company is building 10 data centers in Texas, each 500,000 square feet, with plans to scale to 20 more locations in future phases of Project Stargate.

As Stargate’s first phase accelerates, with more details on future phases expected, one thing is already evident—this initiative effectively introduces a new hyperscaler into the AI infrastructure landscape. With unprecedented capital deployment, strategic partnerships, and a strong national security narrative, Stargate Project is poised to reshape the competitive dynamics of AI infrastructure and reinforce U.S. dominance in the race toward AGI.

Final thought: AI is prompting an historical overhaul of data center infrastructure, but it’s still early innings. The question isn’t whether AI data centers will dominate, but who will control this next-generation infrastructure and how they will balance innovation with sustainability.

4 | The convergence of test-time inference scaling and edge AI

Key takeaways:

- Test-time inference scaling is crucial for AI efficiency—as AI execution shifts from centralized clouds to distributed edges, adjusting compute resources during inference can improve efficiency, latency and performance.

- Edge AI is transforming AI deployment—running inference locally, either on-devices or at the edge, delivers benefits to the end user while improving system-level economics.

- The “memory wall” is a potential bottleneck—AI chips can process data faster than memory systems can deliver data, but addressing this limitation to make edge AI is a priority.

- Agentic AI will unlock real-time decision-making at the edge—AI agents running locally will autonomously turn data into decisions while continuously learning. Agentic AI at the edge will help enterprise AI systems dynamically adjust to new inputs without relying on expensive, time-consuming model re-training cycles.

As AI shifts from centralized clouds to distributed edge environments, the challenge is no longer just model training—it’s scaling inference efficiently. Test-time inference scaling is emerging as a critical enabler of real-time AI execution, allowing AI models to dynamically adjust compute resources at inference time based on task complexity, latency needs, and available hardware. This shift is fueling the rapid rise of edge AI, where models run locally on devices instead of relying on cloud data centers—improving speed, privacy, and cost-efficiency.

Recent discussions have highlighted the importance of test-time scaling wherein AI systems can allocate resources dynamically, breaking down problems into multiple steps and evaluating various responses to enhance performance. This approach is proving to be incredibly effective.

The emergence of test-time scaling techniques has significant implications for AI infrastructure, especially concerning edge AI. Edge AI involves processing data and running AI models locally on devices or near the data source, rather than relying solely on centralized clouds. This approach offers several advantages:

- Reduced latency: Processing data closer to its source minimizes the time required for data transmission, enabling faster decision-making.

- Improved privacy: Local data processing reduces the need to transmit sensitive information to centralized servers, enhancing data privacy and security.

- Bandwidth efficiency: By handling data locally, edge AI reduces the demand on network bandwidth, which is particularly beneficial in environments with limited connectivity. And, of course, reducing network utilization for data transport has a direct line to cost.

And industry focus on edge AI is gaining traction. Qualcomm, for instance, has been discussing the topic for more than two years, and sees edge AI as a significant growth area. In a recent earnings call, Qualcomm CEO Cristiano Amon highlighted the increasing demand for AI inference at the edge, viewing it as a “tailwind” for their business.

“The era of AI inference”

Amon recently told CNBC’s Jon Fortt, “We started talking about AI on the edge, or on devices, before it was popular.” Digging in further on an earnings call, he said, “Our advanced connectivity, computing and edge AI technologies and product portfolio continue to be highly differentiated and increasingly relevant to a broad range of industries…We also remain very optimistic about the growing edge AI opportunity across our business, particularly as we see the next cycle of AI innovation and scale.”

Amon dubbed that next cycle “the era of AI inference.” Beyond test-time inference scaling, this also aligns with other trends around model size reduction allowing them to run locally on devices like handsets and PCs. “We expect that while training will continue in the cloud, inference will run increasingly on devices, making AI more accessible, customizable and efficient,” Amon said. “This will encourage the development of more targeted, purpose-oriented models and applications, which we anticipate will drive increased adoption, and in turn, demand for Qualcomm platforms across a range of devices.”

Bottomline, he said, “We’re well-positioned to drive this transition and benefit from this upcoming inflection point.” Expanding on that point in conversation with Fortt, Amon said the shifting AI landscape is “a great tailwind for business and kind of materializes what we’ve been preparing for, which is designing chips that can run those models at the edge.”

Intel, which is struggling to differentiate its AI value proposition and is turning focus to development of “rack-scale” solutions for AI datacenters, also sees edge AI as an emerging opportunity. Co-CEO Michelle Johnston Holthaus talked it out during a quarterly earnings call. AI “is an attractive market for us over time, but I am not happy with where we are today,” she said. “On the one hand, we have a leading position as the host CPU for AI servers, and we continue to see a significant opportunity for CPU-based inference on-prem and at the edge as AI-infused applications proliferate. On the other hand, we’re not yet participating in the cloud-based AI datacenter market in a meaningful way.”

She continued: “AI is not a market in the traditional sense. It’s an enabling application that needs to span across the compute continuum from the datacenter to the edge. As such, a one-size-fits-all approach will not work, and I can see clear opportunities to leverage our core assets in new ways to drive the most compelling total cost of ownership across the continuum.”

Holthaus’s, and Intel’s, view of edge AI inference as a growth area pre-date her tenure as co-CEO. Former CEO Pat Gelsinger, speaking at a CES keynote in 2024, made the case in the context of AI PCs and laid out the three laws of edge computing. “First is the laws of economics,” he said at the time. “It’s cheaper to do it on your device…I’m not renting cloud servers…Second is the laws of physics. If I have to round-trip the data to the cloud and back, it’s not going to be as responsive as I can do locally…And third is the laws of the land. Am I going to take my data to the cloud or am I going to keep it on my local device?”

Post-Intel, Gelsinger has continued this area of focus with an investment in U.K.-based startup Fractile which specializes in AI hardware that specializes in in-memory inference rather than moving model weights from memory to a processor. Writing on LinkedIn about the investment, Gelsinger said, “Inference of frontier AI models is bottlenecked by hardware. Even before test-time compute scaling, cost and latency were huge challenges for large-scale LLM deployments. With the advent of reasoning models, which require memory-bound generation of thousands of output tokens, the limitations of existing hardware roadmaps [have] compounded. To achieve our aspirations for AI, we need radically faster, cheaper and much lower power inference.”

Verizon, in a move indicative of the larger opportunity for operators to leverage existing distributed assets in service of new revenue from AI enablement, recently launched the AI Connect product suite. The company described the offering as “designed to enable businesses to deploy…AI workloads at scale. Verizon highlighted McKinsey estimates that by 2030 60% to 70% of AI workloads will be “real-time inference…creating an urgent need for low-latency connectivity, compute and security at the edge beyond current demand.” Throughout its network, Verizon has fiber, compute, space, power and cooling that can support edge AI; Google Cloud and Meta are already using some of Verizon’s capacity, the company said.

Agentic AI at the edge

Qualcomm’s Durga Malladi discussed edge AI at CES 2025.

Looking further out, Qualcomm’s Durga Malladi, speaking during CES 2025, tied together agentic and edge AI. The idea is that on-device AI agents will access your apps on your behalf, connecting various dots in service of your request and deliver an outcome not tied to one particular application. In this paradigm, the user interface of a smart phone changes; as he put it, “AI is the new UI.”

He tracked computing from command line interfaces to graphical interfaces accessible with a mouse. “Today we live in an app-centric world…It’s a very tactile thing…The truth is that for the longest period of time, as humans, we’ve been learning the language of computers.” AI changes that; when the input mechanism is something natural like your voice, the UI can now transform using AI to become more custom and personal. “The front-end is dominated by an AI agent…that’s the transformation that we’re talking of from a UI perspective.”

He also discussed how a local AI agent will co-evolve with its user. “Over time there is a personal knowledge graph that evolves. It defines you as you, not as someone else.” Localized context, made possible by on-device AI, or edge AI more broadly, will improve agentic outcomes over time. “And that’s a space where I think, from the tech industry standpoint, we have a lot of work to do.”

Dell Technologies is also looking at this intersection of agentic and edge AI. In an interview, Pierluca Chiodelli, vice president of engineering technology, edge portfolio product management and customer operations, underscored this fundamental shift happening in AI: businesses are moving away from a cloud-first mindset and embracing a hybrid AI model that connects a continuum across devices, the edge and the cloud.

Image courtesy of 123.RF.

As it relates to agentic AI systems running in edge environments, Chiodelli used computer vision in manufacturing as an example. Today, quality control AI models run inference at the edge—detecting defects, deviations, or inefficiencies in a production line. But if something unexpected happens, such as a subtle shift in materials or lighting conditions, the model can fail. The process to retrain the model takes forever, Chiodelli explained. You have to manually collect data, send it to the cloud or a data center for retraining, then redeploy an updated model back to the edge. That’s a slow, inefficient process.

With agentic AI, instead of relying on centralized retraining cycles, AI agents at the edge could autonomously detect when a model is failing, collaborate with other agents, and correct the issue in real time. “Agentic AI, it actually allows you to have a group of agents that work together to correct things.”

For industries that rely on precision, efficiency, and real-time adaptability, such as manufacturing, healthcare, and energy, agentic AI could lead to huge gains in productivity and ROI. But, Chiodelli noted, the challenge lies in standardizing communication protocols between agents—without that, autonomous AI systems will remain fragmented. He predicted an inter-agent “standard communication kind of API will emerge at some point.” Today, “You can already do a lot if you are able to harness all this information and connect to the AI agents.”

“It’s clear [that] more and more data is being generated at the edge,” he said. “And it’s also clear that moving that data is the most costly thing you can do.” Edge AI, Chiodelli said is “the next wave of AI, allowing us to scale AI across millions of devices without centralizing data…Instead of transferring raw data, AI models at the edge can process it, extract insights, and send back only what’s necessary,” Chiodelli said. “That reduces costs, improves response times, and ensures compliance with data privacy regulations.”

A true AI ecosystem, he argues—and hearkening back to the idea of an AI infrastructure continuum that reaches from the cloud out to the edge—requires:

- Seamless integration between devices, edge AI infrastructure, datacenters and the cloud.

- Interoperability between AI agents, models, and enterprise applications.

- AI infrastructure that minimizes costs, optimizes performance and scales efficiently.

Chiodelli summarized in a Forbes article: “We should expect the adoption of hybrid edge-cloud inferencing to continue its upward trajectory, driven by the need for efficient, scalable data processing and data mobility across cloud, edge, and data centers. The flexibility, scalability, and insights generated can reduce costs, enhance operational efficiency, and improve responsiveness. IT and OT teams will need to navigate the challenges of seamless interaction between cloud, edge, and core environments, striking a balance between factors such as latency, application and data management, and security.”

Tearing down the memory wall

Image courtesy of Micron.

Further to this idea of test-time inference scaling converging with edge AI where real people really experience AI, an important focus area is around memory and storage. To put it reductively, modern leading AI chips can process data faster than memory systems can deliver that data, limiting inference performance. Chris Moore, vice president of marketing for Micron’s mobile business unit, called it the “memory wall.” But to start more generally, Moore was bullish about the idea of AI as the new UI and on personal AI agents delivering useful benefits to our personal devices; but he was also practical and realistic about the technical challenges that need to be addressed.

“Two years ago…everybody would say, ‘Why do we need AI at the edge?” he recalled in an interview. “I’m really happy that that’s not even a question anymore. AI will be, in the future, how you are interfacing with your phone at a very natural level and, moreover, it’s going to be proactive.”

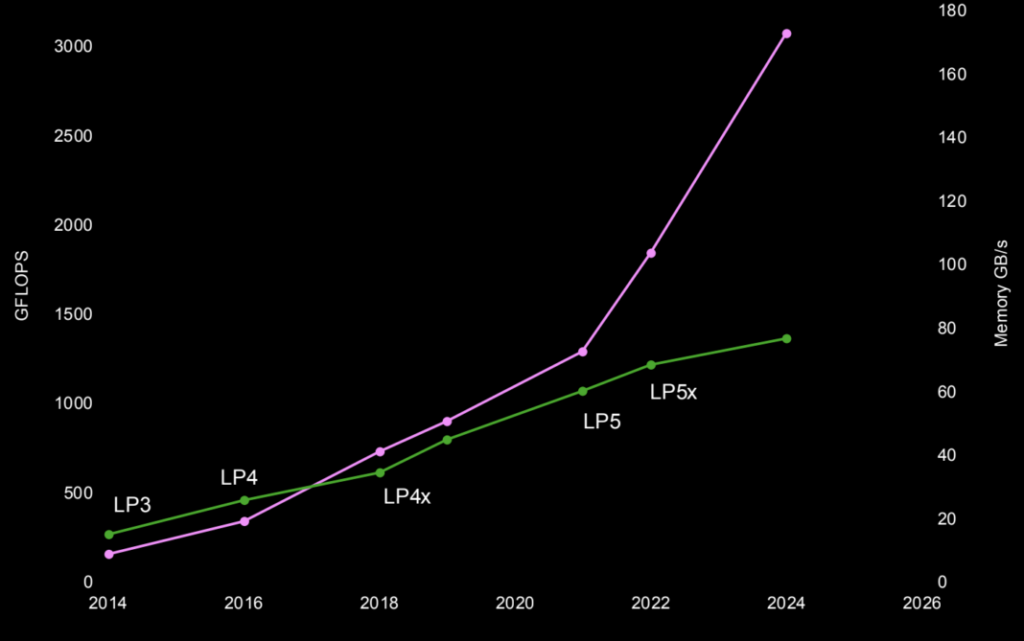

He pulled up a chart comparing Giga Floating Point Operations per second (GFLOPS), a metric used to evaluate GPU performance, and memory speed in GB/second, specifically Low-Power Double Rate (LPDDR) Direct Random Access Memory (DRAM). The trend was that GPU performance and memory speed followed relatively similar trajectories between 2014 and around 2021. Then with LP5 and LP5x, which respectively deliver 6400 Mbps and 8533 Mbps data rates in power envelopes and at price points appropriate for flagship smartphones with AI features, we hit the memory wall.

“There is a huge level of innovation required in memory and storage in mobile,” Moore said. “If we could get a Terabyte per second, the industry would just eat it up.” But, he rightly pointed out that the monetization strategies for cloud AI and edge AI are very different. “We’ve got to kind of keep these phones at the right price. We have a really great innovation problem that we’re going after.”

To that point, and in keeping with the historical paradigm that what’s old is often new again, Moore pointed to Processing-in-Memory (PIM). The idea here is to tear down the memory wall by enabling parallel data processing within memory, which is easy to say but requires fundamental adjustments to memory chips at the architectural level and, for a complete edge device, a range of hardware and software adaptions that would, in theory, allow for test-time inference of smaller AI models to occur in memory thereby freeing up GPU (or NPU) cycles for other AI workloads, and reduce the power associated with moving data from memory to a processor.

Comparing the dynamics for cloud-based AI workload versus edge AI workload processing, Moore pointed out that it’s not just a matter of throwing money at the problem, rather (and again) it’s a matter of fundamental innovation. “There’s a lot of research happening in the memory space,” he said. “We think we have the right solution for future edge devices by using PIM.”

Incorporating test-time inference scaling strategies within edge AI frameworks allows for dynamic resource allocation during the inference process. This means that AI models can adjust their computational requirements based on the complexity of the task at hand, leading to more efficient and effective performance. The AI infrastructure landscape is entering a new era of distributed intelligence—where test-time inference scaling, edge AI, and agentic AI will define who leads and who lags. Cloud-centric AI execution is no longer the default; embracing hybrid AI architectures, optimize inference efficiency, and scale AI deployment across devices, edge, and cloud will shape the future of AI infrastructure.

Final thought: AI is no longer confined to the cloud—it is evolving into a distributed intelligence spanning devices, edge nodes, and data centers. The convergence of test-time inference scaling, edge AI, and agentic AI will determine who leads and who lags in this new paradigm. But the industry’s next challenge isn’t just scaling AI—it’s optimizing it.

5 | DeepSeek foregrounds algorithm innovation and compute efficiency

Key takeaways:

- Efficiency does not mean lower demand—Jevons Paradox applies to AI. DeepSeek’s lower-cost training approach signals an era of algorithmic efficiency, but rather than reducing demand for compute, these breakthroughs are driving even greater infrastructure investment.

- The AI supply chain is evolving—open models and China’s rise are reshaping competition. Open-weight models are rapidly commoditizing the foundation-model layer, leading to lower costs and increased accessibility.

- History repeats itself—AI infrastructure mirrors past industrial revolutions. Just as past technological breakthroughs made electricity and computing mass-market products, AI infrastructure investment follows a similar trajectory. AI’s growth is not just about infrastructure investment, but about economic scalability.

Amid soaring AI infrastructure costs and the relentless pursuit of larger, more compute-intensive models, Chinese AI startup DeepSeek has taken a radically different approach. In mid-January, it launched its open-source R1 v3 LLM—reportedly trained for just $6 million, a fraction of what Western firms spend. Financial markets responded dramatically to the news with shares in ASML, Microsoft, NVIDIA and other AI specialists, and tech more broadly, all taking a hit.

Groq CEO Jonathan Ross, sitting on a panel at the World Economic Forum annual meeting in Davos, Switzerland, was asked how consequential DeepSeek’s announcement was. Ross said it was incredibly consequential but reminded the audience that R1 was trained on around 14 trillion tokens and used around 2,000 GPUs for its training run, both similar to training Meta’s open source 70 billion parameter Llama LLM. He also said DeepSeek is pretty good at marketing themselves and “making it seem like they’ve done something amazing.” Ross also said DeepSeek is a major OpenAI customer in terms of buying quality datasets rather than the arduous, and expensive, process of scraping the entirety of the internet then separating useful form useless data.

The bigger point, Ross said, is that “open models will win. Where I think everyone is getting confused though is when you have a model, you can amortize the cost of developing that, then distribute it.” But models don’t stay new for long, meaning there’s a durable appetite for AI infrastructure and compute cycles. And this gets into what he sees as a race between the U.S. and China. “We cannot do closed models anymore and be competitive…Without the compute, it doesn’t matter how good the model is. What countries are going to be tussling over is how much compute they have access to.”

Back to that $6 million. The tech stock sell-off feels reactionary given DeepSeek hasn’t exactly provided an itemized receipt of its costs; and those costs feel incredibly misaligned with everything we know about LLM training and the underlying AI infrastructure needed to support it. Based on information DeepSeek itself has provided, they used a compute cluster built with 2,048 NVIDIA H800 GPUs. While it’s never clear exactly how much vendors charge for things like this, if you assume a sort of mid-point price of $12,500 per GPU, we’re well past $6 million, so that price apparently doesn’t include GPUs or any other of the necessary infrastructure, rather rented or owned, used in training.

Image courtesy of DeepSeek.

Anyway, the real cost of training and investors’ huge reactions to a kind of arbitrary number aside, DeepSeek does appear to have built a performant tool in a very efficient way. And this is a major focus of AI industry discourse—post-training optimizations and reinforcement learning, test-time training and lowering model size are all teed up to help chip away at the astronomical costs associated with propping up the established laws of AI scaling.

The folks at IDC had a take on this which, as published, was about the Stargate Project announcement that, again, encapsulates the capital outlay needed to train ever-larger LLMs. “There are indications that the AI industry will soon be pivoting away from training massive [LLMs} for generalist use cases. Instead, smaller models that are much more fine-tuned and customized for highly specific use cases will be taking over. These small language models…do not require such huge infrastructure environments. Sparse models, narrow models, low precision models–much research is currently being done to dramatically reduce the infrastructure needs of AI model development while retaining their accuracy rates.”

Dan Ives, managing director and senior equity research analyst with Wedbush Securities, wrote on X, “DeepSeek is a competitive LLM model for consumer use cases…Launching broader AI infrastructure [is] a whole other ballgame and nothing with DeepSeek makes us believe anything different. It’s about [artificial general intelligence] for big tech and DeepSeek’s noise.” As for the price drop in firms like NVIDIA, Ives characterized it as a rare buying opportunity.

From tech sell-off to Jevons paradox

DeepSeek’s efficiency breakthroughs—and the AI industry’s broader shift toward smaller, fine-tuned models—echo a well-documented historical trend: Jevons Paradox. Just as steam engine efficiency led to greater coal consumption, AI model efficiency is driving increased demand for compute, not less. This occurs because increased efficiency lowers costs, which in turn drives greater demand for the resource. William Stanley Jevons put this idea out in the world in an 1865 book that looked at the relationship between coal consumption and efficiency of steam engine technology. Additional modern examples are energy efficiency and electricity use, fuel efficiency and driving, and AI.

Mustafa Suleyman, co-founder of DeepMind (later acquired by Google) and now CEO of Microsoft AI, wrote on X on Jan. 27: “We’re learning the same lesson that the history of technology has taught us over and over. Everything of value gets cheaper and easier to use, so it spreads far and wide. It’s one thing to say this, and another to see it unfold at warp speed and epic scale, week after week.”

AI luminary Andrew Ng, fresh off an interesting AGI panel at the World Economic Forum’s annual meeting in Davos (more on that later). Posited on LinkedIn that the DeepSeek of it all “crystallized, for many people, a few important trends that have been happening in plain sight: (i) China is catching up to the U.S. in generative AI, with implications for the AI supply chain. (ii) Open weight models are commoditizing the foundation-model layer, which creates opportunities for application builders. (iii) Scaling up isn’t the only path to AI progress. Despite the massive focus on and hype around processing power, algorithmic innovations are rapidly pushing down training costs.”

Does open (continue to) beat closed?

More on open weight, as opposed to closed or proprietary, models. Ng commented that, “A number of US companies have pushed for regulation to stifle open source by hyping up hypothetical AI dangers such as human extinction.” This very, very much came up on that WEF panel. “It is now clear that open source/open weight models are a key part of the AI supply chain: many companies will use them.” If the US doesn’t come around, “China will come to dominate this part of the supply chain and many businesses will end up using models that reflect China’s values much more than America’s.”

Ng wrote that OpenAI’s o1 costs $60 per million output tokens, whereas DeepSeek’s R1 costs $2.19 per million output tokens. “Open weight models are commoditizing the foundation-model layer…LLM token prices have been falling rapidly, and open weights have contributed to this trend and given developers more choice.”

Pat Gelsinger, the former CEO of Intel and VMware, posting to LinkedIn on Jan. 27. The DeepSeek discussion “misses three important lessons that we learned in the last five decades of computing,” he wrote.

- “Computing obeys the gas law…It fills the available space as defined by available resources (capital, power, thermal budgets, [etc…]…Making compute available at radically lower price points will drive an explosive expansion, not contraction, of the market.”

- “Engineering is about constraints.”

- “Open wins…we really want, nay need, AI research to increase its openness…AI is much too important for our future to allow a closed ecosystem to ever emerge as the one and only in this space.”

In keeping with our attempt at historical perspective—what’s old is new again—we’ll end with the biography Insull, a birth-to-life exploration of Thomas Edison’s right-hand man, Samuel Insull, by historian Forrest McDonald. Summarizing Insull’s approach to making electricity a mass-market product, McDonald described the largely forgotten titan’s philosophy: “Sell products as cheaply as possible—not because price competition dictated it; far from it. Rather, it stemmed from Insull’s radical belief, which Edison usually shared, that lower prices would bring greater volume, which would lower unit costs of production and thus yield greater profits.”

Final thought: The AI industry is learning an old lesson: greater efficiency doesn’t reduce demand—it accelerates it. Whether AI’s future is driven by open innovation or controlled ecosystems remains an open question, but one thing is certain: AI’s expansion is following the same economic laws that have shaped every major technological revolution.

6 | The push to AGI

Image courtesy of World Economic Forum.

Key takeaways:

- AGI remains an elusive and divisive concept—rather than a singular breakthrough, AI may continue to advance along a spectrum of capabilities, incrementally redefining what intelligence means.

- The debate over AGI’s risks is unresolved—some view AI as a controllable tool, while others warn that emergent AI behaviors suggest a loss of control is possible. The fundamental question remains: can AI be effectively constrained as it becomes more capable?

- AI development is now a geopolitical arms race—the U.S. and China are locked in a competition not just for AI innovation but for compute power dominance. While some advocate for international accords, others see the race as too fast-moving and high-stakes to pause.

In January at the World Economic Forum in Davos, a panel of leading AI researchers and industry figures tackled the question of AGI: what it is, when it might emerge, and whether it should be pursued at all. The discussion underscored deep divisions within the AI community—not just over the timeline for AGI, but over whether its development poses an existential risk to humanity.

On one side, Andrew Ng, co-founder of Google Brain and now executive chairman of LandingAI, dismissed concerns that AGI will spiral out of control, arguing instead that AI should be seen as a tool—one that, as it becomes cheaper and more widely available, will be an immense force for good. Yoshua Bengio, Turing Award-winning professor at the University of Montreal, strongly disagreed, warning that AI is already displaying emergent behaviors that suggest it could develop its own agency, making its control far from guaranteed.

Adding another layer to the discussion, Ross, the CEO of Groq, focused on the escalating AI arms race between the U.S. and China. While some on the panel called for slowing AI’s progress to allow time for better safety measures, Ross made it clear: the race is on, and it cannot be stopped. Recall comments from Altman of OpenAI that the company views AGI as something that can benefit all of humanity while also serving as a strategic (national) advantage to the country that achieves it.

Before debating AGI’s risks, the panel first grappled with defining it (in a pre-panel conversation in the greenroom apparently)—without success. Unlike today’s AI models, which excel at specific tasks, AGI is often described as a system that can reason, learn, and act across a wide range of human-like cognitive functions. But when asked if AGI is even a meaningful concept, Thomas Wolf, co-founder of Hugging Face, pushed back saying the panel felt a “bit like I’m at a Harry Potter conference but I’m not allowed to say magic exists…I don’t think there will be AGI.” Instead, he described AI’s trajectory as a growing spectrum of models with varying levels of intelligence, rather than a singular, definitive leap to AGI.

Ross echoed that sentiment, pointing out that for decades, researchers have moved the goalposts for what qualifies as intelligence. When humans invented calculators, he said, people thought intelligence was around the corner. Same when AI beat Go. The reality, he suggested, is that AI continues to improve incrementally, rather than in sudden leaps toward human-like cognition.

While some panelists questioned whether AGI is even a useful term, Ng and Bengio debated a more pressing question: if AGI does emerge, will it be dangerous? Ng sees AI as simply another tool—one that, like any technology, can be used for good or ill but remains under human control. “Every year, our ability to control AI is improving,” he said. “I think the safest way to make sure AI doesn’t do bad things” is the same way we build airplanes. “Sometimes something bad happens, and we fix it.”