The Olympic Games are set to tax the capabilities of service providers, though there are steps that can be taken ahead of such large events

“We’re going on network freeze,” began the conversation with a major carrier. “We can’t proof-of-concept, trial or touch our network for anything except for essential maintenance,” the carrier continued. This was the internal discussion in direct response to the UEFA Euro 2016 championship recently held in France. The carrier responded: “Everything is delayed – everything!”

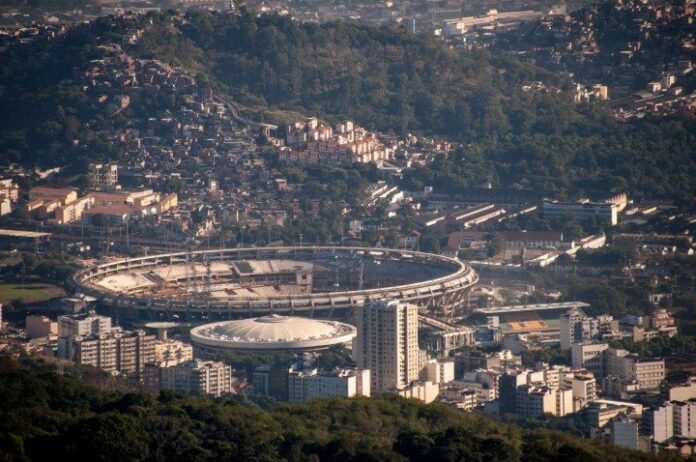

With the Olympics kicking off, the risk of similar challenges is very high when it comes to local carriers fearing the loss of network control and performance degradation during major events. As we look at Rio de Janeiro, it’s not so much the many thousands of participants, nor the hundreds of thousands of extra visitors all using mobile services that impact operations, but rather the operator’s inability to try out new technologies or respond should something go wrong, such as an outage or major service disruption.

At the crux of the issue is the trend that many operators are in the process of moving from an average revenue per user to an average profit per user model. Borne out by the shift to software technologies, such as software-defined networking and network function virtualization, these transformation projects aim to squeeze every last cost out of the network. However, the aforementioned inflexibility – and the worry that deploying a new network element or trying a new service could bring the roof down – adds an extra layer to this challenge. A clear example is the lack of network visibility built into new virtualization technologies such as NFV, where there’s very little in the way of monitoring, and packet-level insight for emergency fault-finding when errors occur.

Looking more closely at a specific scenario of what this means in the real world, a carrier might deploy a new virtualized network function in a quiet time when there is no immediate stress on the network and run it through the acceptance test process. It then begins to tie the VNF into its operating support and business support systems and leave the service assurance portion for later on. Something happens on the network, whether the VNF experiences load, behavioral or even interoperability issues with another device, and big problems ensue. The next stages play out as such:

● The lack of prior knowledge of the VNF or network element in this situation leads the troubleshooting team to investigate as deeply as it can, looking for the needle in the haystack.

● To isolate the issue, borders are put around many devices in which some are taken out of service to learn more about the issue.

● When the VNF is identified as the contributing culprit, deep-dive investigation begins. However, things come to a halt because there is no deep packet visibility into the traffic inside or between the virtualized network elements.

In short, there is no way to debug the issue, and the carrier is clearly not ready for an event.

Winning with visibility

The whole idea of a network going into a freeze for a large event, although prudent, may be an over-reaction. A better way to overcome these issues is to deploy a visibility fabric, which helps the operator to not only get a grip on the network when things do go astray, but also facilitate more controlled and quicker proof of concepts and trials. Benefits of implementing a visibility fabric include:

● Understanding how the network changes when a new service or network element is added.

● Gaining visibility into virtualized environments, such as SDN or NFV.

● Speeding up trial as the operator can now see and directly understand how the new piece of equipment passes or fails their acceptance test (no more finger-pointing).

● Locating where interoperability issues may be creeping within service-chained functions and the traffic passing between them.

Networks that have pervasive visibility built-in and a method to quickly switch-out new network elements doesn’t need to disrupt operations anymore. There’s a positive and very real-world argument that perhaps you want to see how these new technologies actually perform when put to an extreme test. As long as there’s a way to quickly remediate and fail-safe back to known good network elements – where the network is well instrumented – the carrier can see the differences between the old and the new and make decisions in real time.

The tap-as-a-service project

Another emerging approach to solving packet-level visibility issues involves tap-as-a-service project, which is designed to help tenants or cloud administrators debug complex virtual networks and gain virtual machine visibility. TaaS uses a vendor-independent method for accessing data inside the virtualized server environment through remote port mirroring capabilities. In addition to honoring tenant boundaries, its mirror sessions span across multiple compute and network nodes so that traffic is accessible in the traditional manner as if a Test Anything Protocol or Switched Port Analyzer/Mirror port is sourcing the traffic from a legacy or physical network.

By supplying data to a variety of network analytics and security applications, TaaS give back visibility to operators who now have the opportunity to derisk the rollout of new technology through the use of existing debugging, analytics and performance tools.

Rising outage costs

The benefits of virtualization capabilities – like those of NFV – are widely accepted and sought after. From capital expense savings on reducing hardware spend and network function dependency to scaling services quickly to address changing demands, operators and service providers are becoming more agile and resilient, while also enhancing customer satisfaction.

Despite NFV cost savings, there is an area often neglected – outage costs. Whether hard or reputation based, outage costs are rising due to the extra time spent reviving a failed system. A one-minute outage, for example, could potentially become a five-minute outage without direct packet-level visibility.

The reality is that the carrier previously mentioned can never get the six weeks back that they lost to the UEFA Euro 2016 championship and the same could happen around the Olympic Games. That time is gone. The opportunity to change to a new technology, to learn or trial a new offering has been significantly delayed. Although it’s easy for an outside commentator to lay out these issues, the challenge and lost opportunities are very real. But by adopting a strategy that allows for greater network visibility, the answers become more transparent.

Andy Huckridge is the director of service provider solutions at Gigamon with 20 years of Silicon Valley telecommunications industry experience with cutting-edge technologies, including the global interoperability testing of many new telecom technologies. Prior to Gigamon, as a seasoned telecom industry executive, he served as an independent consultant to operators and carriers as well as to network and test equipment manufacturers. Huckridge holds a Master of Science and Bachelor of Engineering in Advanced Telecom and Spacecraft Engineering. He also holds a patent in VoIP, co-authored an IETF RFC and was an inaugural member of the “Top 100 Voices of IP Communications” list.

Editor’s Note: In an attempt to broaden our interaction with our readers we have created this Reader Forum for those with something meaningful to say to the wireless industry. We want to keep this as open as possible, but we maintain some editorial control to keep it free of commercials or attacks. Please send along submissions for this section to our editors at: dmeyer@rcrwireless.com.