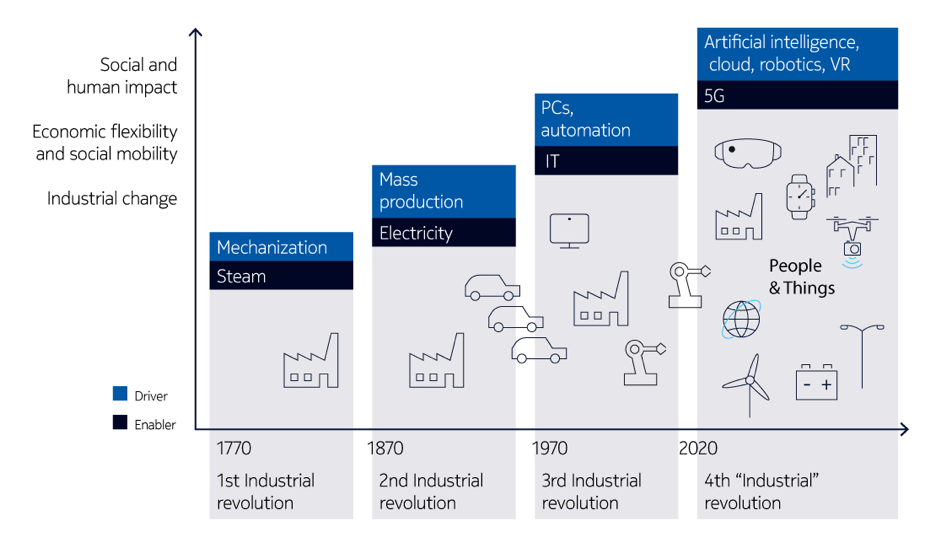

We are at the beginning of a revolution that is changing how we live, work and relate to each other. According to Professor Klaus Schwab, founder and executive chairman of the World Economic Forum, we are entering the 4th Industrial Revolution (4IR).

Connecting everyone and everything requires a transformation to an agile, cognitive, sensing, self-learning and programmable network that pushes the boundaries of technology to create the desired immersive, life-changing experiences.

Moving to the 4th Industrial Revolution

Moving to the 4th Industrial Revolution

With this in mind, there has been a lot of industry focus on cloud technology. Communications service providers and enterprises are embracing virtualization and software-defined networking, integrating cloud and its associated technologies into their networks. The industry is recognizing that it will require moving beyond just the virtualization of the core to the adoption of cloud-native architectures and technologies to support the expansion and delivery of 4IR services.

However, as we have begun implementing these technologies into networks, some learnings around the deployment and operationalization of the cloud model have begun to emerge in areas such as energy and space.

The case for integrating cloud and webscale technologies is compelling. The variability and unpredictability that new broadband and IoT/MTC services and applications are introducing are driving us to adopt more flexible, agile and, above all, scalable architectures.

Much of the variability in traffic volume is coming from cloud-based applications such as video streaming. IoT/MTC services introduce their special requirements because they can have such different utilization of the control and user planes. It will introduce billions of new devices onto the network in the coming decades.

Some IoT/MTC will be low bandwidth sensor traffic, but there is a significant subset that will introduce high bandwidth video streaming. As well, there are very low latency applications, mostly associated with automation such as driverless cars that introduce very stringent latency requirements, which will be challenging to meet with highly centralized, cloud architectures.

Preparing the mobile network

In the past, networks such as the telephone, mobile, and data networks have been built for more predictable services and applications. The telephone and mobile networks have had to deal with the unpredictability of call length and volume, but the characteristics of the network services have nonetheless been predictable.

Moving forward our networks will need to be different than these previous networks insofar as they will be designed to handle the full range of applications, from billions of IoT sensors to real-time HD video, to industrial automation applications. This next-generation architecture will converge networks and access technologies, including fixed, mobile, and purpose-built networks for applications such as traffic signaling, autonomous vehicles, and smart electrical grids.

Take 5G for example, which is often misunderstood as yet one more iteration in the evolution of the mobile network. While it will need to build upon its mobile network roots, it will be much more inclusive of fixed access technologies. In comparison to historical fixed networks, the mobile network has some critical aspects, such as granular device and subscriber management, session control and policy management that were not as applicable in a fixed network. But some of the applications on the fixed network side will impose their own service characteristics and requirements that were never an issue for the mobile network — specifically, low latency IoT and very high bandwidth services for enterprises and consumers.

If we look at high bandwidth residential and enterprise applications under 5G for example, they will be run over dedicated network slices that will provide them with seamless security and access globally. Whatever resources these dedicated network slices require will, in theory, be hosted in the appropriate cloud data center. But here we may encounter a challenge — how do we efficiently and flexibly manage the ongoing data growth, not just the video/multimedia driven consumer mobile internet, but growth driven by new IoT/MTC, enterprise, and residential services and applications?

Is it impossible to spin up enough virtual cloud resources to handle hundreds of gigabits or even terabits in the future? Not impossible, but potentially highly inefficient from the point of view of energy and data center footprint.

Virtualization, diversification and affordability

In most discussions of virtualization and the cloud, there has been an assumption that as these infrastructures scale, the cost of compute will be appropriately manageable. But in the real world, this is not the case. In practice, the cost of energy and of building bigger data centers are imposing challenges to CSPs and enterprises. Data center architects today have already begun diversifying from the X86 general processors in order to combat some of these challenges. In big data installations, it is often widespread practice to use graphic accelerators for the mathematic-intensive operations. And, on the low end, they have begun to introduce ATOM processors into some of their architectures in order to lower power consumption.

Just as data centers are already diversifying on the hardware level because of energy and space requirements, further hardware diversification may be required to meet the demanding service requirements coming from the high bandwidth consumer, enterprise and residential services and applications. Specifically, purpose-built networking silicon may have a role to play in this future cloud network.

Certainly, control plane is compute heavy and will likely always be suited to standard X86 general processors, but some user plane functions may be better suited to being supported with networking processors. A small footprint element could handle hundreds of gigabits trending upwards to a terabit of forwarding, which would result in significant energy and space savings.

Shifting our way of thinking

For low latency applications in the field, current thinking is that certain network functions of the mobile core will be placed locally. Even further latency gains will be possible by utilizing networking processors in these distributed applications. Much the same argument can be made for high bandwidth, wireless local access, where the last 100 meters may see multiple gigabits of traffic. Network processors may well be better suited.

The beauty of a pure cloud model is the ultimate flexibility of the infrastructure and network resource utilization is maximized. However, because of real-world learnings and challenges around cloud performance, efficiency and operational simplification, such as power and space, we may have to find a middle ground between the purpose-built networking infrastructure and the IT-oriented compute environments of our data centers.

We see that large data centers are already moving in this direction. There are already several types of functions for which specialized processors are more appropriate, and the utility of those functions means it is worth investing in that kind of specialization. We would argue that this will continue to be true for our cloud-architected, converged networks moving forward in the Fourth Industrial Revolution.

About the author

With over 20 years of experience in the telecommunications industry, Marketing Director Nick Cadwgan has held senior architectural, product marketing & management, and business strategy roles focusing on Broadband Access, Carrier Ethernet, Carrier/IP Routing, Optical Transport and Mobile Networks with Motorola, Nortel Networks, Newbridge Networks and other privately funded companies. Nick brings a proven combination of marketing, technology and business management expertise to his current role at Nokia.