Architectural changes made in 5G to optimize and scale networks with cloud radio access networks (C-RAN) have created stricter synchronization and latency requirements, particularly for fronthaul networks that provide connectivity between radios and centralized baseband controllers.

Standardization bodies have developed new standards for meeting these requirements. One such standard in the Time-Sensitive Networking (TSN) toolkit is IEEE Std 802.1Qbu, which specifies frame preemption. This amendment to IEEE Std 802.1Q, along with IEEE Std 802.3br, specifies a method for transmitting time-sensitive frames in a manner that significantly reduces delay and delay variation.

While the greatest benefit of delay reduction is realized at lower port rates, the intent of frame preemption is to minimize delay and frame delay variation and protect time-sensitive flows from other flows in a deterministic manner. This allows latency-sensitive fronthaul traffic to be transported without being impeded by other flows, making it easier to conform to the rigorous requirements imposed by standards such as IEEE 802.1CM, which defines TSN for Fronthaul profiles.

Operators have a strong desire to move to packet-based fronthaul to drive down cost and increase scale. In the past, operators addressed fronthaul primarily through direct fiber and point-to-point wavelength-division multiplexing (WDM) links, which did not cause asymmetry or delay variation. With the move to transport fronthaul streams over bridged Ethernet networks, strict latency and jitter requirements raise the need for new standards-based mechanisms that will make Ethernet deterministic. The need is greatest in cases where time-sensitive frames must be prioritized over non-time-sensitive frames.

Traditional Ethernet queue management and scheduling techniques such as strict priority queuing (SPQ) or variants of round-robin (RR) queuing schedule full frames by priority or, by relative weight. However, these techniques do not provide the determinism needed for the transport of high-priority time-sensitive traffic. Instead, they require high-priority frames to wait in a queue if a lower-priority frame is already transmitting. They then process the high-priority frames as quickly as possible, but the delays can reach the tens of microseconds if the lower-priority frames are large. Unbounded frame delay for time-sensitive frames can have negative impacts such as deterioration in voice and video services and inaccurate data collection in real-time monitoring systems.

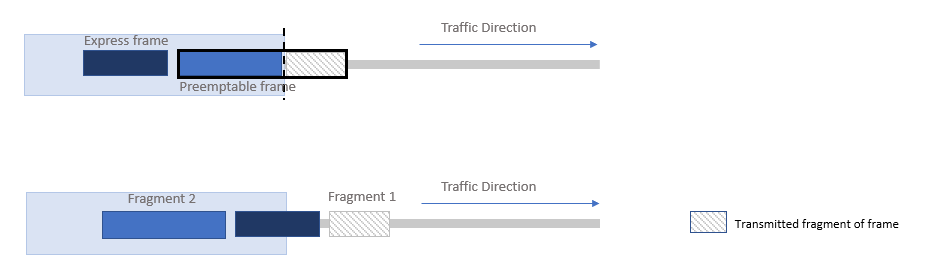

A system that complies with IEEE 802.1Qbu interrupts the transmission of a non-time-sensitive frame if it receives a time-sensitive frame. The system treats the time-sensitive frame as an express frame and transmit it either immediately or after transmitting a minimum fragment of the preemptable frame. It then resumes transmitting the preemptable frame. The receive side of the system (at the other end of the link) then reassembles the preempted fragments into their original frames.

With this approach in place, operators can ensure that time-sensitive frames experience much smaller delays (marked by tight frame delay variation). In a fronthaul application, time-critical fronthaul traffic is configured to use high-priority express queues, while other, non-time-critical traffic is configured to use preemptable queues. The figure below explains this concept at a high-level.

Figure 1. The basics of frame preemption

Changes in the MAC merge sublayer (MMS) are required to determine which frames are preemptable and which ones are not, as well as how to reassemble preempted frames at every hop, as described in IEEE 802.3br. With IEEE 802.3br, the MAC layer uses the MMS to support two MAC service interfaces: express MAC (eMAC) and preemptable MAC (pMAC). As preemptable frames are fragmented and express frames are interspersed between them, frame preemption must be enabled on each hop to ensure that frames are reassembled correctly.

Skeptics have argued about whether frame preemption is necessary, noting that the greatest impact of frame preemption on latency is seen at lower port rates and that impact decreases as port rates increase. However, testing has revealed that frame preemption saves multiple microseconds in conditions where a high fan-in of client ports compete for the same line port. Schedulers may transmit in microbursts instead of frame by frame allowing for features such as look-ahead scheduling. Frames in between the scheduler and the MAC are considered inflight frames and can negatively impact FDV. Without frame preemption, once a frame has left the scheduler, it cannot be interrupted.

Frame preemption can lower latency. But its real value comes from its ability to decrease the effects of non-fronthaul traffic on fronthaul traffic and to make network design for fronthaul traffic deterministic by minimizing latency and latency variation in a queued bridged network. This becomes crucial when operators mix fronthaul and non-fronthaul services within a C-RAN fronthaul network that offers converged multiservice access.