Over the past two years, I have gathered a ton of information about AI enhancements to the RAN and the possibility of running RAN workloads on GPUs. Many readers have seen my 40-page report in this area. They know my skepticism related to the short-sighted idea of running RAN software on Grace Hopper servers.

One mobile operator has decided to defy the logic in my analysis and give it a try. Softbank will be building a cluster of 5G sites in a centralized RAN configuration, where a single Grace Hopper server can handle the load of around 20 sites.

As he has in the past, the visionary leader of Softbank is pushing to be three steps ahead. Masa is taking a risk. The intention is to create an AI marketplace with local and secure AI computing. This is a speculative idea: Will the transition to AI be a reason to justify a business shift from AWS/Microsoft/Google to a mobile operator? Is there an appetite for local AI services on shared infrastructure?

I am still skeptical. I haven’t seen serious revenue behind low-latency IoT yet, let alone low-latency requirements for AI applications. And make no mistake, it’s the revenue for AI services driving this experiment….there is no savings in the RAN operations due to the extremely high cost and power consumption of the server.

Meanwhile, Nvidia has also announced partnerships with Nokia and Ericsson. This looks more promising to me. Ericsson is releasing a new baseband ASIC chip soon with some cores devoted to AI inferencing. Instead of buying a Grace Hopper server for $50,000, why not place two or three GPU cores onto an existing ASIC to enhance performance? This seems like the right tradeoff in terms of cost and power consumption. This particular case will use AI for the telecom application only, not for commercial AI services. That means that it’s a problem with well defined boundary conditions and the inference can be kept very small. It fits the RAN budget.

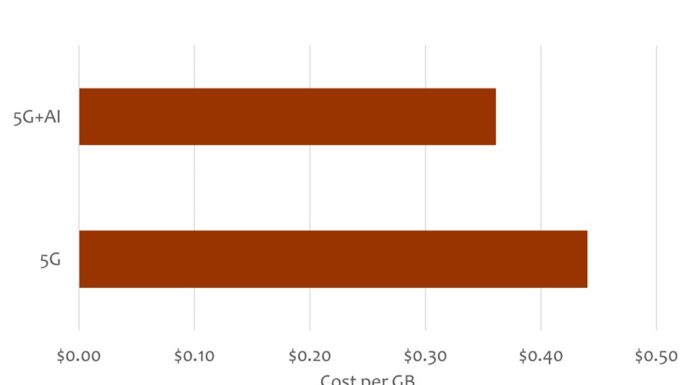

The use of AI and Machine Learning to optimize the RAN has a very specific ROI: it gives the operators more capacity without spending much money. Our calculations indicate that the weighted cost of delivering each GB of data drops from $0.44 to $0.36 using the same hardware, but adding some AI optimization features to traffic steering, beamforming, and other radio settings.

Making the leap from enhancements of RAN capacity (AI-for-RAN) to AI services (AI-on-RAN) may happen someday, but I believe it will require at least 10 years of development. This experiment may work technically. But the business model and application development will take a lot more time. It’s important for the industry to understand the difference between optimization of the radio (worthwhile, with a clear ROI) and investing in AI services (speculative and risky).