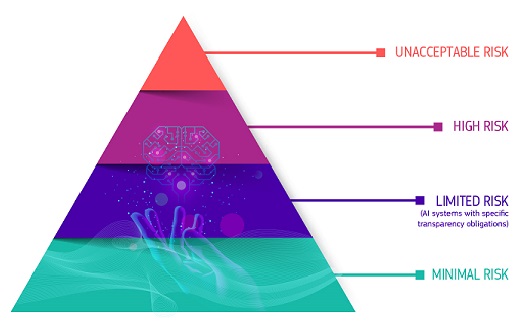

The AI Act defines four levels of risk for AI systems: Unacceptable risk, high risk, limited risk and minimal risk

The European Union’s (EU) Artificial Intelligence Act (AI Act), first unveiled in 2023, set a common regulatory and legal framework for the development and application of AI. It was proposed by the European Commission (EC) in April 2021 and passed in the European Parliament in May 2024. This week, the EC established new guidelines that prohibits the use of AI practices whose risk assessment is deemed “unacceptable,” while “high.”

The EC’s website explains that the AI Act has defined four levels of risk for AI systems: Unacceptable risk, high risk, limited risk and minimal risk.

Unacceptable risk AI systems, or those considered a “clear threat to the safety, livelihoods and rights of people,” are now banned in the EU. These include things like social scoring, internet or CCTV material scraping for facial recognition databases and harmful AI-based manipulation, deception and exploitation of vulnerabilities.

While not prohibited, the EC will also monitor AI systems categized as “high risk.” These include applications that appear to have been created in good faith but could lead to devasting consequences if something were to go wrong. Examples include: AI safety components in critical infrastructures whose failure could put the life and health of citizens at risk, such as transportation; AI solutions used in education institutions that may determine the access to education and course of someone’s professional life, such as exam scoring; AI-based safety components of products, such as robot-assisted surgery; and AI use-cases in law enforcement that may interfere with people’s fundamental rights, such as evidence evaluation.

Before a high risk AI system can be place on the market, it must undergo a “conformity” assessment and comply with all AI Act requirements. Then the system must be registrared standalone in a database and must bear the Conformité Européene (CE) Mark, which has been used to regulate goods sold within the European Economic Area (EEA) since 1985.

Enforcement of the act will be overseen b national regulators, and those companies found to be non-compliant will be slapped with hefty financial penalties of €35 million or more, depending on global annual revenue. Additionally, those engaging in prohibited AI practices may also be banned from operating in the EU at all.

For most AI developers in the region, though, none of this will be necessary. That’s because, according to the EC, the majority of AI systems currently used in the EU present minimal or no risk.

“The AI Act ensures that Europeans can trust what AI has to offer,” the EC stated. “While most AI systems pose limited to no risk and can contribute to solving many societal challenges, certain AI systems create risks that we must address to avoid undesirable outcomes.”

For an AI Act deep dive, check out “Five things to know about the European AI Act.”